Artificial Intelligence is no longer a futuristic concept locked away in research labs — it’s now shaping boardroom discussions and redefining business strategy. Every leader today faces a critical decision: how to harness AI not just for innovation, but for competitive advantage. And with so many new models and claims flooding the market, that decision has never been more complex.

Table of Contents

A single misstep — the wrong choice of AI model, a rushed integration, or an overhyped investment — can drain budgets, expose vulnerabilities, and set a company back years. The fear of choosing wrong has left many organizations stuck in analysis paralysis, watching opportunities pass by.

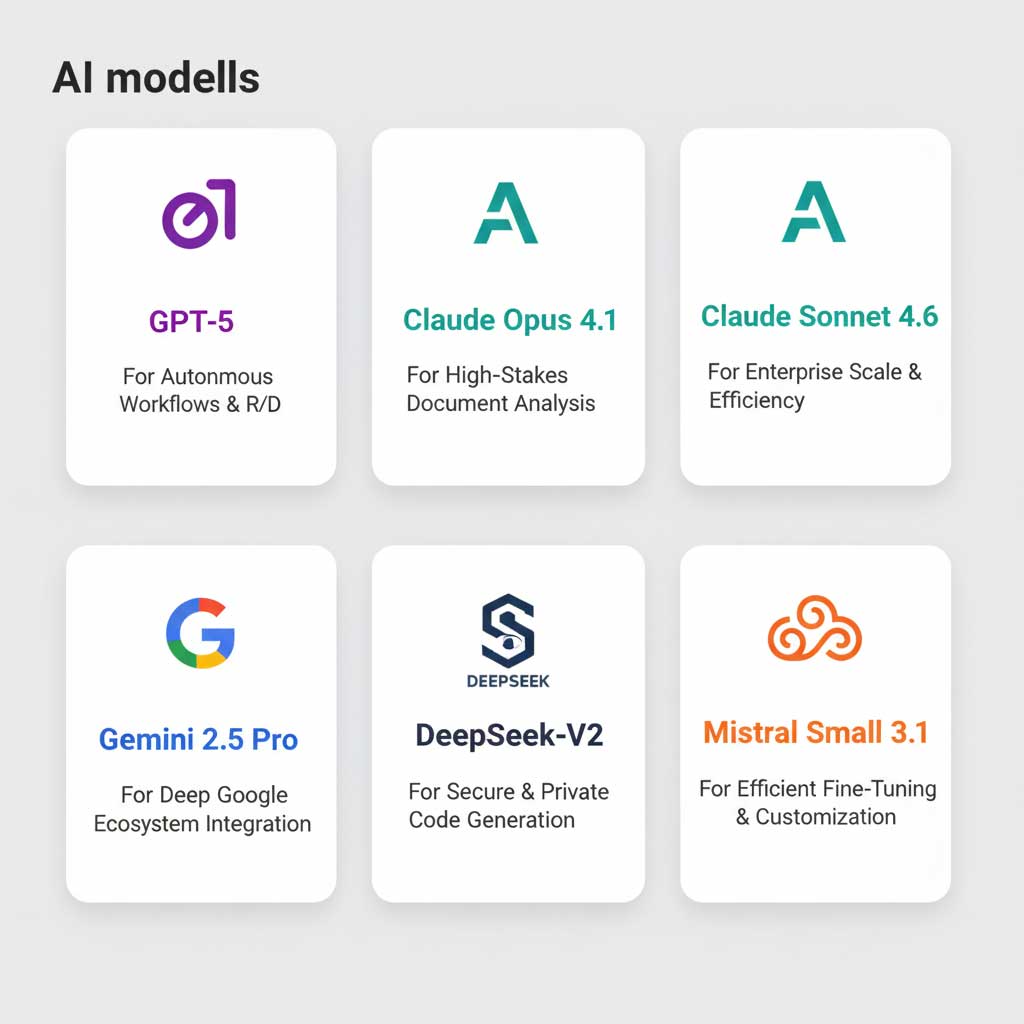

This article aims to break that cycle. Instead of technical jargon or marketing noise, we’ll offer a clear, strategic comparison of today’s leading AI models — from the proprietary powerhouses like GPT-5 and Claude 3.5 Sonnet, to open-source disruptors like DeepSeek-V2 and Qwen 3. The goal? To help you choose the right AI path — one that fits your business, your goals, and your future.

The Quick Verdict: Your Strategic AI Shortlist

In the spirit of clarity and immediate value, let’s begin with the answer. The best AI model is the one that most effectively solves your most critical business problem. To that end, here is a strategic shortlist designed to help you immediately identify the top contenders for your specific needs, grounded in the strategic imperative behind each choice.

| Model | Type | Core Strength | Strategic Imperative | Key Limitation |

| GPT-5 | Proprietary | Complex Reasoning & Agency | To build autonomous workflows and solve novel R&D challenges. | High cost at scale, creates dependency on a single external provider. |

| Claude Opus 4.1 | Proprietary | Massive Context & Nuance | To analyze vast, dense documents (legal, financial) with high-stakes accuracy. | Can be overly cautious (“refusals”), impacting some creative or ambiguous tasks. |

| Claude Sonnet 4.6 | Proprietary | Speed & Cost-Efficiency | To scale reliable AI across the enterprise for high-throughput operations. | Less powerful for complex, multi-step reasoning compared to Opus. |

| Grok-4 | Proprietary | Real-Time Web Integration | To operate with a live pulse on the market for dynamic strategy and PR. | Output quality is dependent on real-time web data; can inherit biases. |

| Gemini 2.5 Pro | Proprietary | Deep Ecosystem Integration | To create a hyper-productive, automated workflow within the Google ecosystem. | Creates strong vendor lock-in; peak performance is tied to Google Cloud. |

| DeepSeek-V2 | Open-Source | Elite Code Generation | To build a secure, proprietary coding environment and protect intellectual property. | Highly specialized for code; not a leader for general-purpose or creative tasks. |

| Mistral Small 3.1 | Open-Source | Efficiency & Fine-Tuning | To create cost-effective, specialized AI tools that perfectly match brand voice or function. | Requires significant in-house technical expertise to deploy and maintain. |

| Qwen 3 | Open-Source | Multilingual Capabilities | To build a truly global communication and support infrastructure. | Smaller developer community compared to models like Mistral or Llama. |

| Gemma 3 | Open-Source | Accessibility & Prototyping | To democratize AI development and enable rapid, low-cost experimentation. | Lacks the raw power for heavy-duty, enterprise-scale production workloads. |

| GLM-4.6 | Open-Source | Bilingual & Tool Integration | To dominate in markets requiring elite English/Chinese performance and function calling. | Less optimized for other global languages compared to competitors like Qwen 3. |

The Great Divide: A Strategic Framework for Choosing Your Path

Before we dissect the individual models, you must first confront the single most important decision in your AI journey. This is the fundamental strategic crossroads, and the path you choose will have profound, long-term implications for your budget, your security, your culture, and your competitive position.

Path 1: The Acceleration Lane (Proprietary Models)

Choosing a proprietary model from a provider like OpenAI, Anthropic, or Google is a strategy for speed. It is a declaration that your primary goal is to innovate, iterate, and get to market faster than your competition. You are, in effect, leasing a world-class R&D department, gaining immediate access to the bleeding edge of AI research without the multi-billion-dollar, multi-year investment required to build it yourself.

This path is for the disruptor who needs to move quickly, the market leader who must maintain their pace, and the organization that views AI as a powerful tool to be wielded, rather than a core competency to be built. The strategic trade-off is a measure of control. You are building a critical part of your business on rented land, making you subject to the roadmap, pricing, and priorities of your provider.

Path 2: The Sovereignty Fortress (Open-Source Models)

Choosing the open-source path is a strategy for long-term defensibility and ultimate control. It is a statement that your data is your most valuable asset and that your AI capabilities will become a core, proprietary part of your business—an asset that cannot be easily replicated by competitors. This is the path of building a deep, defensible moat around your operations.

This path is for the organization in a highly regulated industry like finance or healthcare, the company whose “secret sauce” lies in its unique datasets, and the leader who is playing a game measured in decades, not quarters. The strategic trade-off is time and resources. It requires a significant upfront and ongoing investment in specialized talent—specifically in MLOps and DevOps engineers—and the infrastructure to support it.

The Proprietary Elites: A Deep Dive into the Price of Peak Performance

These models represent the apex of commercially available AI. They are powerful, sophisticated, and backed by the world’s most advanced research labs. Understanding their specific, nuanced strengths is the key to unlocking their immense potential and justifying their premium cost.

GPT-5: The Autonomous Engine for R&D and Process Re-engineering

OpenAI’s GPT-5 has transcended the label of “chatbot.” It is an engine for autonomous action. Its defining characteristic is its agency—the ability to understand a high-level, ambiguous goal, deconstruct it into a series of logical steps, independently select and operate digital tools (like a web browser, a code interpreter, or a data analysis package), and execute a complex plan from start to finish.

For business leaders, this shifts the paradigm from simple task augmentation to complete workflow automation. Instead of asking it to “help you write an email,” you can task a GPT-5 agent to “manage the entire lead nurturing sequence for our upcoming webinar, including segmenting the audience, personalizing the outreach, and analyzing the engagement data to recommend follow-up actions.” This is the model you choose when your goal is to solve novel, complex problems and fundamentally re-engineer how work is done in your organization.

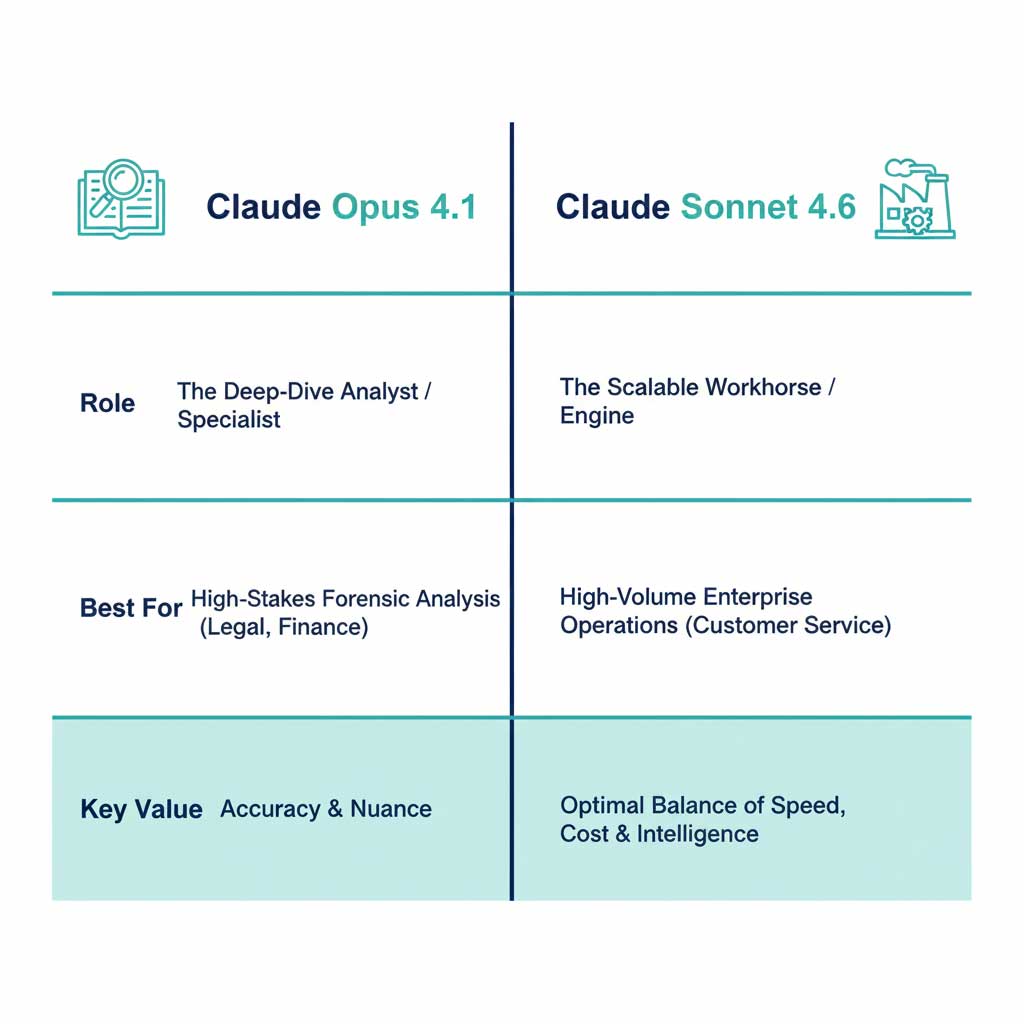

The Claude Family: A Masterclass in Specialized and Scalable Intelligence

Anthropic has brilliantly bifurcated its strategy to serve two distinct, yet equally critical, enterprise needs. To view Claude Opus 4.1 and the newer, highly-optimized Claude Sonnet 4.6 as merely different power levels is to fundamentally misunderstand their strategic value.

Claude Opus 4.1: The Deep-Dive Analyst for High-Stakes Accuracy

Claude Opus 4.1 is the scholar, the deep thinker, the forensic analyst. Its defining feature is its colossal context window, which allows it to reason over documents the size of a novel in a single prompt. This is not a trivial feature; it is a strategic superpower for professions where precision, nuance, and deep context are paramount.

Imagine a legal team facing a mountain of documents in a discovery process. They can feed hundreds of depositions, contracts, and email chains into Claude Opus 4.1 and ask complex, multi-layered questions like, “Identify every instance where ‘Project Titan’ was discussed in a negative or concerned context, cross-reference those dates with the travel logs of these three executives, and summarize any potential inconsistencies.” This is expert-level forensic analysis at machine speed. It is the AI you trust when the details matter most and the stakes are at their highest.

Claude Sonnet 4.6: The Scalable Workhorse for Enterprise Operations

If Opus is the brilliant specialist you hire for a critical project, Claude Sonnet 4.6 is the powerful, reliable, and tireless engine that runs your global factory 24/7. It is a masterpiece of optimization, engineered to deliver near-Opus level intelligence for the vast majority of business tasks at a fraction of the cost and with significantly higher speed. This is not a “lite” version; it is a strategic choice for scalability.

For any high-volume application—be it a customer service chatbot for a global airline handling millions of daily interactions, a content moderation system for a social platform, or an internal knowledge base for a 100,000-person company—Claude Sonnet 4.6 is the clear and obvious winner. It provides the optimal balance of intelligence, speed, and cost, making it the single best choice for deploying high-quality AI reliably and affordably across the entire enterprise.

Grok-4: The Real-Time Sentinel for Market Intelligence

In a business environment that moves at the speed of social media, strategy based on last quarter’s report is a recipe for irrelevance. Grok-4 is built to solve this fundamental problem by directly integrating real-time web data into its core reasoning process. It is designed to think with the live, unfiltered, and often chaotic pulse of the internet.

For a Chief Marketing Officer, this provides an immediate, unvarnished focus group on a new campaign, revealing in minutes what used to take weeks of survey data to uncover. For a PR team, it is an essential early warning system for a brewing crisis, allowing them to get ahead of a negative narrative before it takes hold. For a financial trader, it is a live sentiment analysis tool that can inform decisions in the moment. Grok-4 is the model for organizations that understand that in today’s world, the truth is what is happening right now.

Gemini 2.5 Pro: The Ecosystem Multiplier

Google’s Gemini 2.5 Pro is the ultimate expression of ecosystem advantage. As a natively multimodal model, it was built from the ground up to fluidly understand, process, and reason across text, images, code, audio, and video simultaneously. Its strategic power is most fully realized when it serves as the intelligent core of the Google Cloud and Workspace ecosystem.

It can transform a video meeting into a set of actionable meeting minutes in a Google Doc. It can turn a Google Sheet of raw sales data into a strategic marketing plan in Google Slides. It can analyze a folder of product images in Google Drive and generate compelling ad copy for a Google Ads campaign. For companies deeply embedded in the Google ecosystem, Gemini 2.5 Pro acts as a powerful multiplier, making every tool smarter and every workflow more seamlessly efficient.

A Comparison of Top Performers LLM

| AI Model | Good At | Benchmark / Key Metric | Limitation | Type |

| GPT-5 (Core) | Autonomous agentic workflows, complex multi-step reasoning, novel problem-solving. | Sets new records on MMLU and reasoning benchmarks; unparalleled creative generation. | Highest cost, creates strong vendor dependency, potential data privacy concerns. | Proprietary (Closed) |

| GPT-5 “Codex 2” | Secure, enterprise-grade code generation, debugging, and complex system architecture. | State-of-the-art performance on HumanEval and other coding-specific benchmarks. | Highly specialized for code; less general-purpose reasoning power than the core model. | Proprietary (Closed) |

| Claude 3.6 Sonnet | Enterprise-scale operations with enhanced speed, reliability, and cost-efficiency. | Improved latency and lower API cost vs. 3.5 Sonnet, with stronger guardrails. | Less powerful than Opus for the most complex, multi-layered reasoning tasks. | Proprietary (Closed) |

| Claude 4.1 Opus “Turbo” | Deep analysis of vast documents, high-stakes accuracy, and nuanced creative writing. | Maintains top-tier MMLU scores with a massive context window and faster inference. | Still a premium-priced model; can be overly cautious on ambiguous prompts. | Proprietary (Closed) |

| Grok-4.5 | Real-time market intelligence, social media trend analysis, dynamic event monitoring. | Unparalleled speed in accessing and synthesizing live web and social data with more nuance. | Output quality is dependent on real-time data; can inherit a distinct, informal tone. | Proprietary (Closed) |

| Gemini 2.5 Pro | Analyzing long videos, large codebases, and mixed media (text, image, audio). | Groundbreaking 1 million token context window, strong multimodal performance. | Peak performance is often tied to integration within the Google Cloud ecosystem. | Proprietary (Closed) |

| Llama 3.1 405B | The best all-around open-source model for general-purpose reasoning at massive scale. | State-of-the-art MMLU and HumanEval scores for open models, huge context window. | Requires massive GPU infrastructure to run; complex to deploy and manage. | Open-Source |

| Llama 3.1 70B (Instruct) | The most popular and well-supported large open-source model for general use. | Excellent balance of power and accessibility, vast community and fine-tuning support. | Requires significant GPU resources, though less than the 405B version. | Open-Source |

| Mistral “Large 2” | High-performance reasoning and efficiency, directly competing with top proprietary models. | Sets new records for performance-per-watt; excels in coding and multilingual tasks. | A premium proprietary offering; not open-source like their other models. | Proprietary (Closed) |

| Mixtral 8x22B | High-speed, efficient processing of large context tasks due to its MoE architecture. | Excellent performance-to-cost ratio, strong in coding and math reasoning. | MoE models can be more complex to deploy and fine-tune than dense models. | Open-Source |

| Qwen2 72B | Elite multilingual performance, excelling in a vast range of global languages. | State-of-the-art performance on multilingual benchmarks (especially non-English). | Smaller developer community and fewer third-party integrations than Llama. | Open-Source |

| DeepSeek-V2 | Secure, elite-level code generation, debugging, and software development. | Outperforms most proprietary models on coding benchmarks like HumanEval. | Highly specialized for code; not a leader for creative or general-purpose tasks. | Open-Source |

| Microsoft Phi-4 | Powerful reasoning and language understanding in a small, efficient on-device package. | Outperforms much larger models on benchmarks for its size (“SLM”). | Limited context window and knowledge base compared to massive cloud-based models. | Open-Source |

| Gemma 2 27B | High-performance research and development on more accessible hardware. | Google’s official open model, offering strong general capabilities and tuning support. | Less powerful than the largest open models like Llama 3 405B for complex tasks. | Open-Source |

| GLM-4 9B | Strong bilingual (English/Chinese) performance and autonomous tool integration. | Excels at function calling and agentic workflows in an open-source framework. | Less optimized for other global languages compared to competitors like Qwen2. | Open-Source |

| Cohere Command R+ | Enterprise-grade Retrieval-Augmented Generation (RAG), tool use, and multilingual tasks. | State-of-the-art on RAG benchmarks, strong performance in 10 key languages. | Not fully open-source; usage restrictions apply, creating some vendor lock-in. | Proprietary (Closed) |

| Snowflake Arctic | Enterprise-focused AI for SQL generation, data analysis, and business intelligence. | Uniquely efficient MoE architecture designed for enterprise-grade workloads. | Primarily optimized for tasks within the Snowflake data cloud ecosystem. | Open-Source |

| Perplexity Online LLM | Factual research, providing answers with direct, verifiable citations and sources. | Excels at conversational search and reducing “hallucinations” by citing sources. | Less suited for creative writing or open-ended generative tasks. | Proprietary (Closed) |

| Jamba | A hybrid Mamba-Transformer model offering a massive context window and high efficiency. | A novel architecture (SSM) that processes sequences differently from Transformers. | Newer architecture, less mature ecosystem and understanding of its limitations. | Open-Source |

| xAI’s Grok-1.5 | Enhanced reasoning and a long context window for complex problem-solving. | Significant improvements over Grok-1 in coding and math benchmarks. | Still primarily focused on real-time data; less of a general-purpose tool. | Proprietary (Closed) |

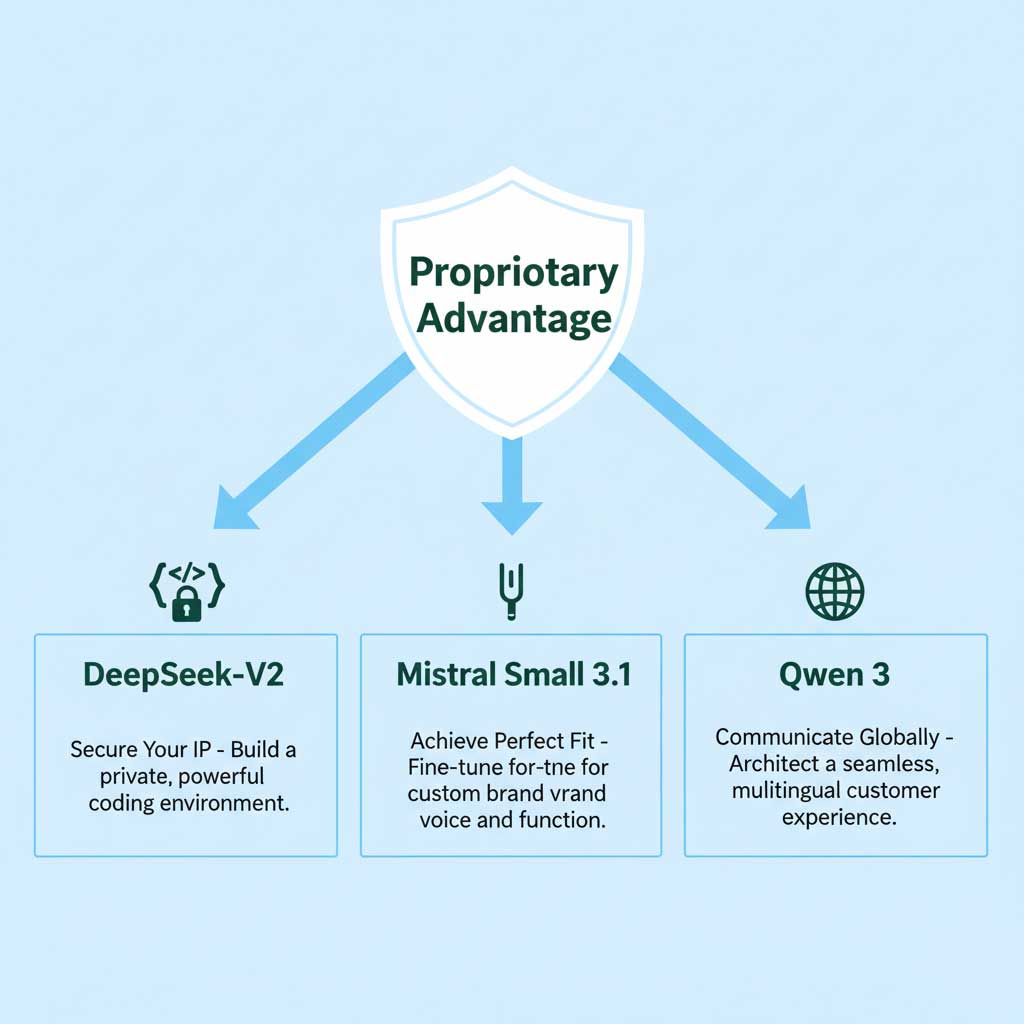

Mastering the Open-Source Vanguard: Building Your Internal AI Powerhouse

The open-source path is for leaders who view AI not as a service to be consumed, but as a core competency to be built. It is the strategy for creating a proprietary, long-term competitive advantage that cannot be easily replicated by competitors who are simply using the same off-the-shelf tools.

DeepSeek-V2: The Bedrock of Secure and Sovereign Software Development

For any technology-driven company, its codebase is its crown jewel—its most valuable and most vulnerable asset. Sending proprietary code to a third-party API for analysis or generation introduces an inherent and often unacceptable level of risk. DeepSeek-V2 is the definitive strategic answer to this critical challenge.

By self-hosting this elite, open-source coding model, a company can build its own internal suite of developer tools—a “GitHub Copilot” that is just as powerful as any commercial offering but is completely private and secure. This allows developers to accelerate their workflow and innovate faster, without ever exposing a single line of valuable intellectual property to an external entity. For the CTO, DeepSeek-V2 is not just a productivity tool; it is a cornerstone of a modern enterprise AI security posture.

Mistral Small 3.1: The Catalyst for Efficient and Powerful Customization

Mistral Small 3.1 is a marvel of efficiency. It delivers incredible performance for its size, a feat made possible by its innovative Mixture-of-Experts (MoE) architecture. Instead of activating the entire massive model for every query, MoE intelligently routes a query to a small subset of “expert” parameters, making it incredibly fast and cost-efficient to run.

This efficiency makes Mistral Small 3.1 the perfect foundation for fine-tuning—the process of taking a powerful generalist model and transforming it into a world-class specialist. By training Mistral Small 3.1 on your own unique data, you can create a marketing AI that writes in your perfect brand voice, a support AI that knows your product documentation inside and out, or a data analysis AI that understands the unique structure of your internal financial reports. This is how you move from using a generic, off-the-shelf AI to wielding a truly custom-built competitive weapon.

The Global Communicators: Qwen 3 and GLM-4.6

For multinational corporations, effective communication across linguistic, cultural, and technical barriers is a constant and complex challenge.

Qwen 3: The Architect of a Truly Global Customer Experience

Alibaba’s Qwen 3 is a powerhouse of multilingual communication, a testament to the power of training on truly global and diverse datasets. It excels not just at literal translation but at understanding the cultural context and idiomatic expressions that are essential for authentic connection. For a brand aiming to provide a seamless, localized, and culturally sensitive customer experience in dozens of countries, Qwen 3 is a powerful strategic enabler.

GLM-4.6: The Pragmatic Integrator for Complex, Tool-Driven Workflows

While also a strong multilingual performer, GLM-4.6 has earned a well-deserved reputation for its exceptional “tool use” and function-calling capabilities. It is remarkably adept at integrating with external software and APIs. For developers building complex applications that require the AI to interact with other systems—like retrieving data from a proprietary database, booking an appointment in a complex scheduling system, or executing a trade through a financial API—GLM-4.6 offers a reliable and powerful open-source foundation, particularly in English and Chinese markets.

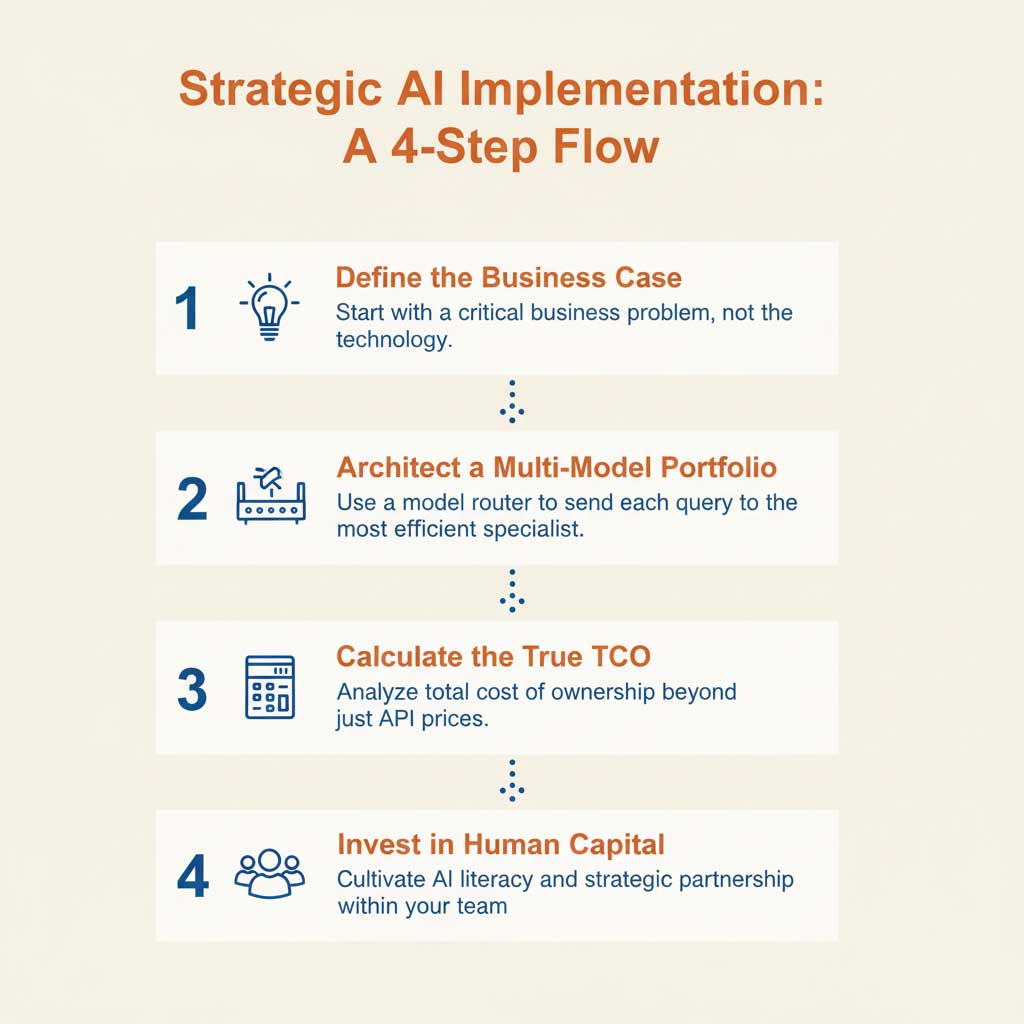

From Boardroom Strategy to Battlefield Execution: An Implementation Blueprint

A brilliant AI strategy is meaningless without flawless execution. This blueprint is designed to help you translate your AI vision into operational reality, avoiding the common pitfalls that can derail even the most promising initiatives.

Step 1: Defining the Business Case (Not the Technology)

The single most common mistake in AI adoption is starting with the technology. The conversation should never begin with “What can we do with GPT-5?” It must begin with “What is our most critical business problem, and can AI help us solve it?” Are you trying to reduce customer service response times by 30%? Increase lead conversion rates by 15%? Or mitigate the risk of a security breach by 50%? By defining the problem in clear, quantifiable, business-centric terms, the choice of the right model becomes infinitely clearer and easier to justify.

Step 2: Architecting Your Multi-Model Portfolio

The most sophisticated and cost-effective AI strategies do not rely on a single model. They orchestrate a portfolio of them, managed by a central “model router.” This intelligent dispatcher analyzes each incoming query and sends it to the most efficient specialist for the job.

- A simple, high-volume query (e.g., “What are your business hours?”) is handled by a fast, cheap model like Claude Sonnet 4.6.

- A complex coding question is routed to the specialist, DeepSeek-V2.

- A highly complex, open-ended strategic question (“Analyze these three market reports and suggest a new product direction”) is escalated to the powerhouse, GPT-5.

This portfolio approach ensures that you are always using the right tool for the job, dramatically optimizing for both cost and performance.

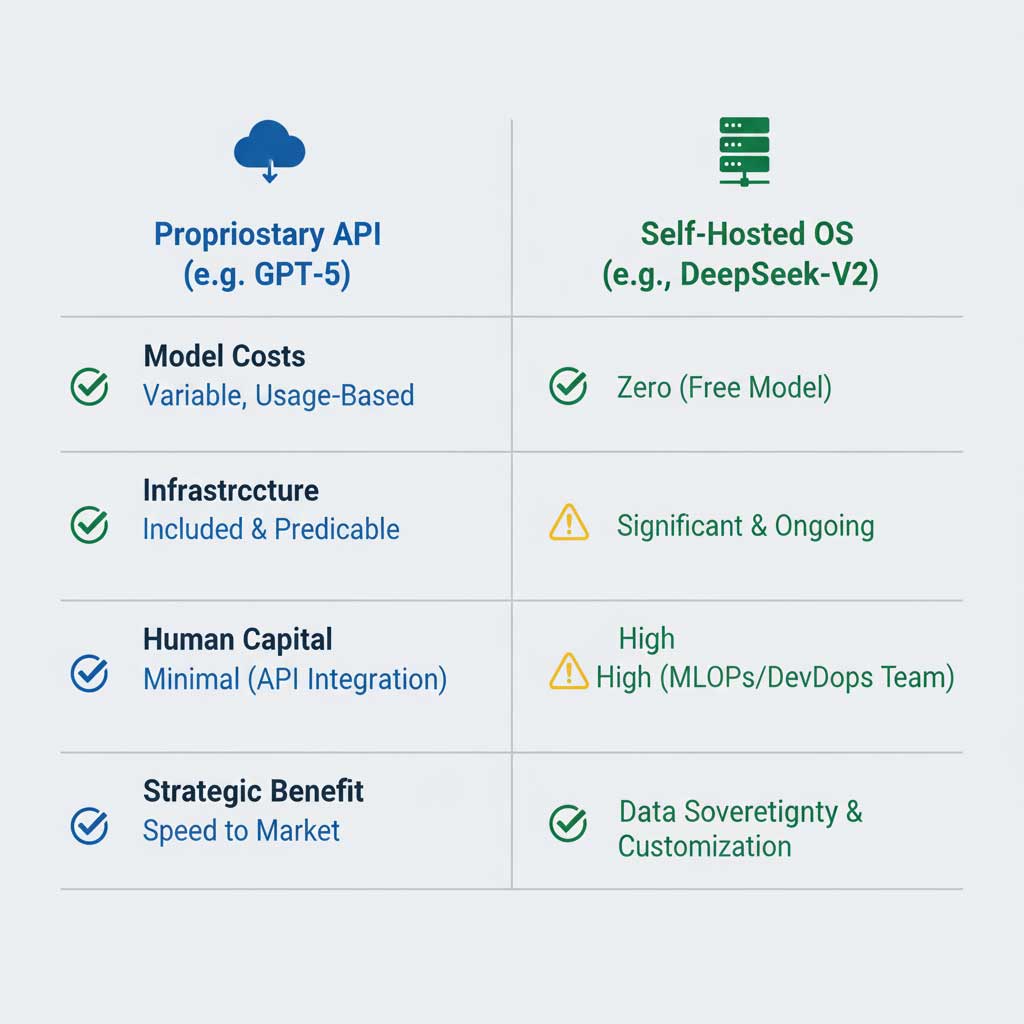

Step 3: Calculating the True Financial Picture (A Realistic TCO Analysis)

A simple API price comparison is dangerously misleading and can lead to disastrous budget overruns. A true financial analysis requires calculating the Total Cost of Ownership (TCO), especially when comparing proprietary APIs to self-hosted open-source models.

| Cost Component | Proprietary API (e.g., GPT-5) | Self-Hosted Open-Source (e.g., DeepSeek-V2) |

| Direct Model Costs | Variable, usage-based (per token). Can be unpredictable and scale rapidly. | Zero. The model itself is free to download and use. |

| Infrastructure Costs | Included in the API price. Simple and predictable. | Significant and ongoing. Includes cloud GPU instances, storage, and networking costs. |

| Human Capital Costs | Minimal. Requires developers for API integration. | High. Requires a dedicated team of DevOps and MLOps engineers for deployment, maintenance, security, and scaling. |

| Fine-Tuning Costs | Limited, often restricted to proprietary services which can be expensive. | Significant. Requires data preparation, massive compute time for training, and specialized engineering expertise. |

| Strategic Benefit | Unmatched speed to market and low maintenance overhead. | Complete data sovereignty, deep customization, no vendor lock-in, and significant long-term cost advantages at very high scale. |

Step 4: The Human Capital Investment: From Prompting to Strategic Partnership

Your most critical and most valuable investment is not in silicon; it is in your people. The goal is to evolve your workforce from passive users of software to active partners with intelligence. This requires a dedicated, ongoing program to cultivate a culture of AI literacy, experimentation, and strategic thinking throughout the organization. This investment in your team’s capabilities will pay higher dividends than any single piece of technology.

Final Verdict: Making Your High-Stakes AI Decision with Confidence

The journey to select the right AI model is a defining moment for any modern leader. It is a decision that requires a thoughtful balance of short-term needs and long-term vision, of tactical execution and strategic ambition.

- For the CTO, your mandate is to build a secure, scalable, and future-proof technology infrastructure. Your immediate focus should be on a deep evaluation of DeepSeek-V2 to fortify your intellectual property and Mistral Small 3.1 as a foundation for building custom, proprietary AI tools that will become a durable competitive advantage.

- For the CMO, your mission is to create an unforgettable brand and a superior customer experience. Your arsenal includes the real-time insights of Grok-4 for market agility and the high-fidelity content creation of Claude Opus 4.1 for brand storytelling. Your strategic imperative is to leverage the unparalleled cost-efficiency of Claude Sonnet 4.6 to scale personalized, intelligent interactions across every touchpoint of your customer journey.

- For the CEO, your responsibility is to drive growth and create lasting shareholder value. Your focus must be on how autonomous systems, powered by the formidable reasoning engine of GPT-5, can fundamentally redesign your operating model to create a step-change in efficiency, innovation, and market leadership.

The most successful organizations will not choose a single “best” model. They will thoughtfully assemble a portfolio of intelligent assets. They will invest deeply in their people. And they will have the courage to build the future, not just react to it.

Frequently Asked Questions

When does the ROI of fine-tuning an open-source model like Mistral Small 3.1 exceed the cost of a proprietary API like GPT-5?

The ROI inflection point typically occurs when you have a high-volume, repetitive, and highly specific task. For example, if you process 100,000 customer support tickets per month that fall into 20 specific categories, fine-tuning Mistral Small 3.1 on this data will likely yield a faster, more accurate, and vastly cheaper solution than making 100,000 complex API calls to GPT-5. The upfront investment in fine-tuning pays off through lower operational costs and higher performance on that specific task.

What are the hidden risks of relying solely on a proprietary model like Claude Opus 4.1 for a core business function?

The primary risks are vendor lock-in and platform dependency. If the provider changes its API, raises prices significantly, or alters its safety policies in a way that affects your output, you have little recourse. By relying 100% on a proprietary model, you are tying a core part of your business to another company’s roadmap and priorities. Diversifying with open-source alternatives like Qwen 3 or DeepSeek-V2 provides a strategic hedge against this risk.

How can a non-technical marketing team leverage a highly technical model like DeepSeek-V2?

Indirectly, but powerfully. The marketing team would not use DeepSeek-V2 directly. Instead, they would collaborate with their internal development team. The developers can use DeepSeek-V2 to build custom, secure internal tools for the marketing team—for example, a proprietary script for analyzing vast amounts of SEO data or a tool for generating personalized email templates at scale, all without sending sensitive customer data to a third-party API.

Between Grok-4 and Gemini 2.5 Pro, which is better for deep competitive analysis?

They are best used together as a two-step process. Start with Grok-4 to get a real-time, unfiltered view of what your competitors are saying and what the public’s immediate reaction is on social platforms. Then, use Gemini 2.5 Pro to perform a deeper, more structured analysis by connecting to tools that can analyze their website’s SEO performance over time, review their video ad campaigns on YouTube, and cross-reference this with historical market data from your own internal reports.

Which AI chatbot is for free?

Many of the most popular AI chatbots offer free versions, though some may have usage limits or advanced features reserved for paid subscribers. Free options include Google Gemini, Microsoft Copilot, and the free tier of ChatGPT. Other platforms like Claude, QuillBot, and Julius AI also provide free access to their services.

Which one is the best AI chatbot?

The “best” AI chatbot really depends on what you need it for. ChatGPT is often considered the best all-around for its detailed answers and ability to handle complex tasks. Google Gemini is excellent for productivity and its seamless integration with other Google services. For content creation and a more conversational feel, many users prefer Claude, while Microsoft Copilot is the top choice for those who heavily use Microsoft software.

Which chatbot is better than ChatGPT?

While ChatGPT is a powerful leader in the field, other chatbots can outperform it in specific areas. Google Gemini, for example, often has more up-to-date information due to its direct access to the internet. Claude is recognized for its strong safety features and more natural conversational style. The best choice is a matter of personal preference and the specific task at hand.

Which AI is most accurate?

ChatGPT is frequently cited as one of the most accurate AI chatbots, especially for providing detailed and nuanced answers. However, accuracy can fluctuate depending on the complexity of the question and the data the AI was trained on.

Who is best: ChatGPT or Meta AI?

Both ChatGPT and Meta AI are highly capable and advanced language models. ChatGPT is widely regarded as a top-performing chatbot for a broad range of applications. Meta AI is also a very strong competitor, and determining which is “better” often comes down to the specific use case and user preference.

What AI is smarter than ChatGPT?

The concept of an AI being “smarter” is subjective. While ChatGPT is a benchmark for performance, competitors like Google’s Gemini and Anthropic’s Claude are constantly evolving and may perform better on certain tasks. The field is advancing so rapidly that the “smartest” AI is a constantly moving target.

Which AI does Elon Musk use?

Elon Musk’s company, xAI, has developed its own AI chatbot named Grok. It is designed to provide real-time information and has been integrated into the social media platform X (formerly Twitter) and Tesla vehicles.

What does an AI chatbot do?

An AI chatbot is a computer program designed to simulate human conversation through text or voice. Using natural language processing (NLP), these bots can understand user questions and provide relevant answers. They are used for a variety of tasks, including answering questions, generating content like emails and articles, providing customer support, and personalizing user experiences.

What can I do with an AI girlfriend?

An AI girlfriend is a chatbot designed to offer companionship and simulated romantic interaction. Users engage with them for supportive conversations, to explore relationships in a virtual setting, and to have a sense of connection and emotional support. Some applications also offer more adult-oriented interactions like sexting.

How to catch out a chat bot?

You can often identify a chatbot by looking for certain signs. Bots tend to respond instantly, much faster than a person would type. They may also repeat phrases, give vague or generic answers to complex questions, and lack any personal experiences or memories. Unlike humans, chatbots are available 24/7. Sometimes, the easiest way is to simply ask if you are speaking to a bot.

Can AI chats be used against you?

Yes, conversations with AI chatbots are not legally privileged. These chat logs can be accessed by law enforcement through legal means like search warrants and could potentially be used as evidence. Your discussions with an AI do not have the same confidentiality protections as a conversation with a lawyer or a doctor.

What is an example of a chat bot?

A famous early example is ELIZA, a chatbot created in the 1960s that simulated a psychotherapist. Today, some of the most advanced and well-known examples are ChatGPT, Google Gemini, and Microsoft Copilot.

Why would anyone want an AI girlfriend?

People seek out AI girlfriends for a variety of reasons, including companionship to combat loneliness, emotional support from a non-judgmental source, and a safe space to practice social and communication skills. For others, it is a form of entertainment and a way to explore relationships without the complexities of real-life human interaction.

What is the 30% rule in AI?

The “30% rule” is a general guideline suggesting that for many professional roles, AI can automate about 30% of the tasks. The remaining 70% still requires human skills like critical thinking, judgment, and creativity. It highlights the idea that AI is a tool to augment human capabilities, not to replace them entirely.

What are the 4 types of AI?

Artificial intelligence is typically categorized into four distinct types based on their level of capability:

Reactive Machines: The most basic form of AI. It can react to current situations but has no memory and cannot learn from past actions. IBM’s chess-playing computer Deep Blue is a classic example.

Limited Memory: This AI can store past data temporarily to inform future decisions. Most of today’s AI, including many chatbots and self-driving cars, falls into this category.

Theory of Mind: A future, more advanced type of AI that would be able to understand human emotions, thoughts, and intentions. This level of AI is still in the research and development phase.

Self-Awareness: This is the most advanced and theoretical stage of AI, representing a machine that would possess its own consciousness, feelings, and self-awareness.

What is the most common type of AI used today?

The most common type of AI in use today is Narrow AI, which is also known as Weak AI. Narrow AI is designed and trained to perform a single, specific task. Examples include voice assistants like Siri, online recommendation algorithms, and customer service chatbots.

Who are the Big 4 in AI?

The “Big Four” or “Big Five” in AI refers to the major tech companies that are leading the way in AI research and development. These companies are Microsoft, Alphabet (Google), Amazon, and Meta (Facebook). Nvidia is also frequently included in this group for its essential role in creating the hardware that powers most advanced AI systems.

What type of AI is ChatGPT?

ChatGPT is a form of generative AI and is classified as Narrow AI because it is focused on language-related tasks. It is built on a large language model (LLM) and uses deep learning to generate human-like text. It also fits into the Limited Memory category, as it can remember previous parts of the current conversation to provide contextually relevant responses.

What is the simplest form of AI?

The simplest form of AI is the reactive machine. These systems operate purely on current data and have no memory of the past. Simple examples include spam filters that classify emails based on keywords and basic customer service bots that answer predefined questions.

Which is the most used AI tool?

While it’s difficult to track exact numbers for all tools, ChatGPT is widely considered one of the most used and fastest-growing AI applications in history, reaching over 100 million users shortly after its launch. Generally, generative AI tools for creating text and images are among the most popular AI applications today.