Voice is no longer a futuristic trend; it’s a fundamental part of how we interact with the digital world. With over 150 million voice assistant users in the U.S. alone, brands that can’t listen, understand, and respond in every customer’s language are already being left behind. In this high-stakes environment, a technological breakthrough has just redrawn the map for global communication.

Table of Contents

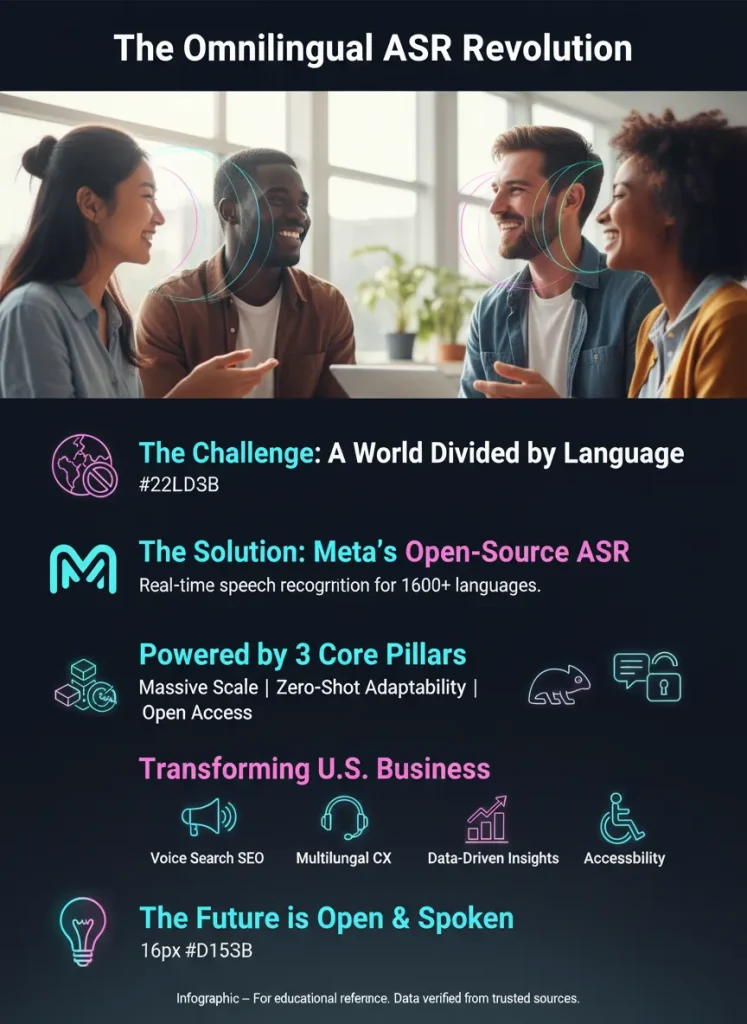

The announcement comes from Meta AI’s FAIR team: the release of Omnilingual ASR, a suite of powerful open-source ASR models that shatters previous language barriers. This is not just an incremental update. It is a foundational shift in multilingual speech recognition. This article breaks down what this technology is, why its open-source nature is a game-changer for marketers, and how U.S. businesses can leverage it to dominate in an AI-driven landscape defined by conversational search and global audiences.

What is Omnilingual ASR? Decoding Meta’s Leap into Universal Transcription

At its core, Omnilingual ASR represents a new standard for automatic speech recognition (ASR), the technology that converts spoken words into text. But unlike previous systems that focused on a few dozen commercially valuable languages, Meta AI has taken a radically inclusive approach, building a system that can understand a staggering number of the world’s voices.

A New Standard for Automatic Speech Recognition (ASR)

Omnilingual ASR is Meta’s suite of AI models designed to provide state-of-the-art speech-to-text capabilities for over 1,600 languages. Its primary mission is to include hundreds of previously unsupported, low-resource languages, effectively giving a digital voice to communities that have been overlooked by mainstream technology. This isn’t just about transcription; it’s about accessibility and equity on a global scale.

The Three Pillars of Its Power: Scale, Adaptability, and Access

The incredible power of Omnilingual ASR rests on three key innovations that work together to create a system that is robust, flexible, and accessible to all.

1. Scale (The AllASR Corpus)

The models were trained on the massive AllASR corpus, a dataset containing over 120,000 hours of labeled speech. To build this diverse and comprehensive corpus, Meta AI collaborated with partners like Mozilla Common Voice and Lanfrica, ensuring the data reflects real-world speech patterns and includes a vast array of languages that are often underrepresented in training data.

2. Adaptability (Zero-Shot and In-Context Learning)

Perhaps the most revolutionary feature is its zero-shot language support. This means the model can learn to transcribe a new language using just a few audio-text examples, a process known as in-context learning. It does not require a full, costly retraining cycle. This remarkable adaptability makes it possible to extend support to over 5,400 languages, practically ending the digital divide for countless communities.

3. Access (The Apache 2.0 License)

Meta has released these powerful AI speech models under the Apache 2.0 license. This is a critical detail for businesses, as it means the technology is completely free for commercial use, modification, and distribution. This open approach directly challenges the expensive, pay-per-use API models of many competitors, democratizing access to elite automatic speech recognition.

The State of Voice in 2025: Industry Trends Demanding a Multilingual Solution

The launch of Omnilingual ASR is perfectly timed. The digital marketing and search landscape is rapidly evolving, with voice technology and conversational AI moving from the periphery to the very center of user engagement strategies. Understanding these trends reveals why a powerful, multilingual ASR solution is no longer a luxury but a necessity.

The Dominance of Voice Search and Conversational AI

Voice technology is now a mature and dominant interface. Recent industry data paints a clear picture of a voice-first world:

- By 2025, an estimated 153.5 million people in the U.S. will use voice assistants regularly.

- Over 70% of voice searches are now in natural, conversational language, a significant shift from the short, fragmented keywords used in traditional text search.

- A staggering 81% of brands that have implemented conversational AI report significant improvements in customer satisfaction and engagement.

This data underscores a fundamental change in user behavior. Customers expect to speak to brands naturally, ask complex questions, and receive instant, accurate responses. Brands that cannot facilitate this will struggle to remain relevant.

The Impact of Google Gemini Search Updates on SEO

This shift is further amplified by Google’s own evolution. The Google Gemini search updates have pushed the search engine’s capabilities far beyond simple keyword matching. Gemini is designed to understand context, nuance, and user intent on a much deeper level, making it exceptionally skilled at interpreting the long-tail, question-based queries common in voice search.

For marketers, this means that successful voice search optimization now depends on creating content that directly and thoroughly answers users’ spoken questions. A tool like Omnilingual ASR becomes essential for this, as it allows businesses to analyze real-world voice queries from diverse audiences to inform a more effective and helpful content strategy.

Omnilingual ASR vs. The Competition: A Marketer’s Guide to the Top ASR Platforms

To fully appreciate the strategic advantage offered by Omnilingual ASR, it’s crucial to see how it stacks up against other leading platforms. For marketers and businesses, the choice of an ASR system depends on factors like language reach, cost, and flexibility. Here’s a clear comparison:

| Feature | Meta Omnilingual ASR | Google USM (Universal Speech Model) | OpenAI Whisper |

| Language Support | 1,600+ (Extendable to 5,400+ via zero-shot) | ~300+ languages | ~99 languages |

| Primary Strength | Unmatched language breadth & low-resource language support | High accuracy & deep integration with Google Cloud | Excellent robustness for English transcription |

| Licensing Model | Apache 2.0 (Free for commercial use) | Proprietary (API-based, usage costs) | Open Source (MIT License) |

| Ideal for Marketers | Global campaigns, multilingual CX, data sovereignty, custom applications | Businesses invested in the Google ecosystem, high-volume standard languages | Content creators, podcasters, journalists needing high-quality English transcripts |

| Key Innovation | Zero-shot in-context learning | Massive scale training on 12M hours of data | Robustness on diverse and noisy audio |

While Google’s models offer seamless integration within its cloud ecosystem and Whisper is a go-to for high-quality English transcription, neither can compete with the sheer scale and adaptability of Meta AI‘s offering. For any U.S. business with ambitions of reaching diverse domestic or global audiences, Omnilingual ASR provides an unparalleled advantage.

Practical Strategies: Integrating Meta’s ASR into Your U.S. Business Workflows

Understanding the technology is one thing; deploying it for a competitive advantage is another. Here are actionable strategies for integrating Meta AI’s speech recognition into U.S. business workflows to drive tangible results in SEO, customer experience, and marketing intelligence.

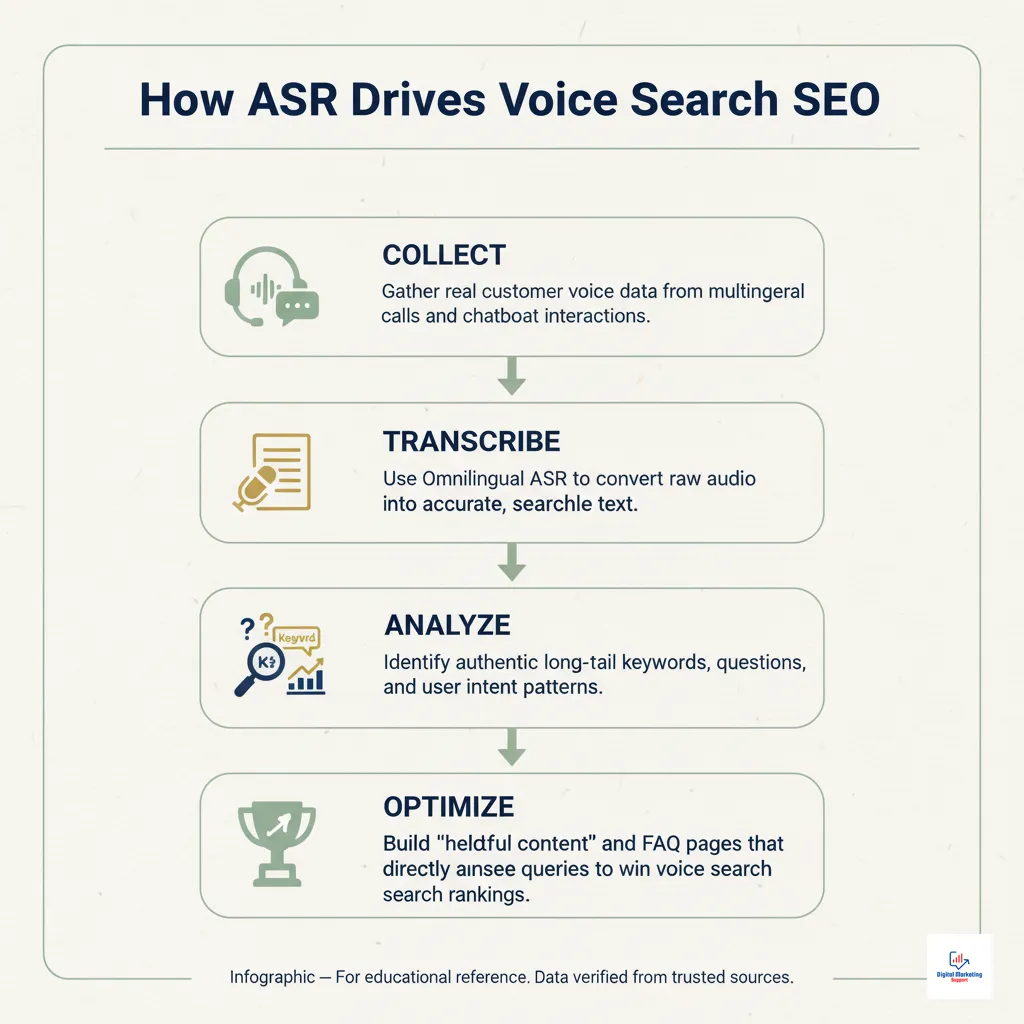

Future-Proofing SEO: Winning at Multilingual Voice Search

A powerful strategy is to use Omnilingual ASR to transcribe customer service calls and chatbot logs, particularly from non-English speaking U.S. audiences. This process provides a goldmine of authentic, long-tail keywords and conversational queries that reflect true user intent.

This approach allows businesses to build highly targeted content, FAQ pages, and knowledge bases that directly answer the specific questions their audience is asking. This aligns perfectly with Google’s “helpful content” standards, boosting your voice search optimization efforts and helping you capture high-intent traffic that competitors are missing.

Revolutionizing Customer Experience with AI-Powered Support

Businesses can integrate the open-source ASR models directly into their call center software or website’s conversational AI platform. This enables immediate and effective customer support automation in dozens of languages spoken across the U.S.

This provides instant, real-time speech recognition and support. It dramatically reduces customer wait times, improves first-contact resolution rates, and enhances overall satisfaction. With over 20% of the U.S. population speaking a language other than English at home, this capability is a powerful differentiator.

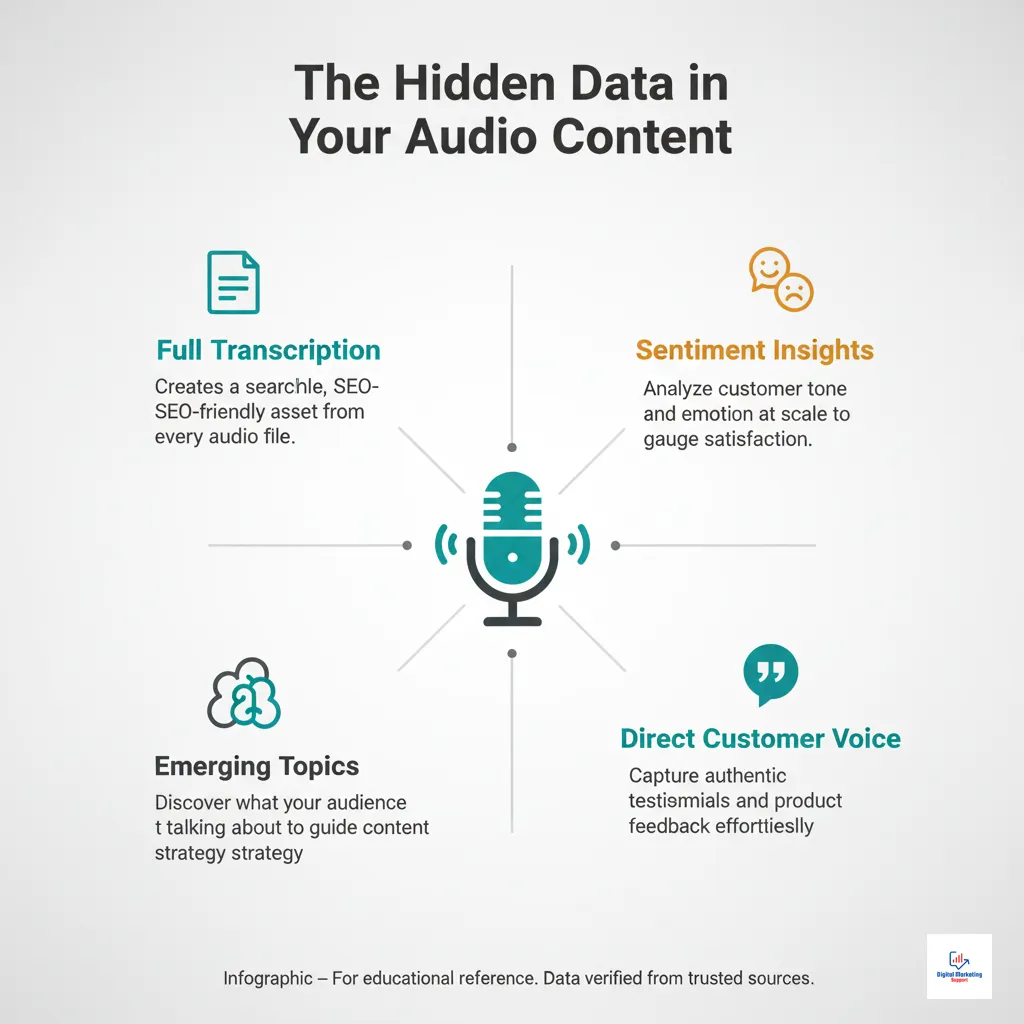

Data-Driven Marketing: Unlocking Insights and Accessibility

An effective workflow is to automatically transcribe all your video and audio content, including webinars, podcasts, and social media videos, into multiple languages using Omnilingual ASR.

First, this significantly boosts your multilingual content accessibility, strengthening your brand’s reputation and helping fulfill ADA compliance. Second, it transforms your unstructured audio content into a rich, searchable text database. This text can be analyzed for sentiment, trending topics, and customer pain points, fueling a more effective data-driven SEO and content strategy.

Innovating in Voice Commerce and Hyper-Targeted Advertising

Brands can develop voice-enabled shopping assistants or create localized audio ads for specific linguistic communities within the U.S. market.

The field of voice commerce is projected to grow exponentially. Offering a seamless, voice-driven purchasing experience in a user’s native language can dramatically increase conversion rates. The zero-shot language support of Omnilingual ASR allows for rapid A/B testing and deployment of campaigns for new and emerging audiences without significant investment.

Industry Use Cases: Real-World Examples of Omnilingual ASR Adoption

The transformative potential of this technology becomes even clearer when applied to specific industries. These real-world scenarios illustrate how Omnilingual ASR is not just a tool for tech giants but a practical solution for businesses and organizations across the U.S.

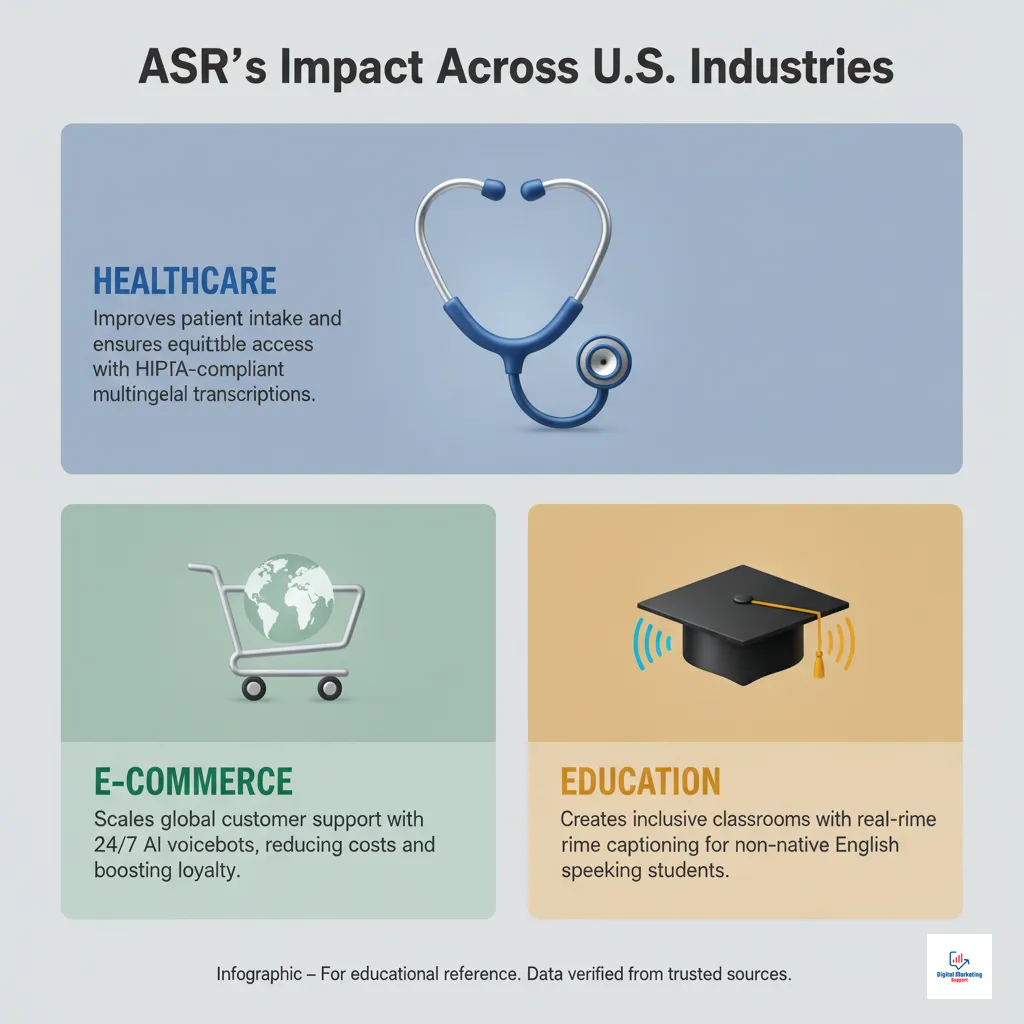

U.S. Healthcare: Enhancing Patient Intake and Accessibility

Consider a hospital system that integrates Omnilingual ASR into its patient portal and check-in kiosks. As a result, patients with limited English proficiency can now state their symptoms and medical history in their native language. The system provides an accurate, HIPAA-compliant transcription for medical staff, reducing communication errors, improving patient outcomes, and ensuring more equitable access to care.

Global E-commerce: Scaling Customer Support for U.S. Retailers

Imagine a U.S.-based online retailer with a growing global customer base using open-source ASR models to power its support voicebots. The retailer can now offer 24/7, real-time speech recognition support in over 40 languages without hiring a massive multilingual staff. This leads to a projected 30% reduction in support costs and a measurable increase in international customer loyalty.

Education Technology: Creating Inclusive Learning Environments

An EdTech firm providing online learning platforms to U.S. school districts can use the ASR technology to auto-caption live lectures. Consequently, students who are recent immigrants or speak English as a second language can get real-time transcriptions in their native tongue, dramatically improving their comprehension, engagement, and educational outcomes.

To help businesses plan their adoption, here is a practical roadmap.

| Implementation Roadmap for Omnilingual ASR | |||

| Business Goal | Application of Omnilingual ASR | Key Metrics to Track | Primary U.S. Industries |

| Improve Customer Satisfaction | Multilingual chatbot & call center automation | Customer Satisfaction Score (CSAT), First Contact Resolution (FCR) | E-commerce, Telecom, Finance |

| Increase SEO Traffic | Transcribe user queries to find long-tail keywords | Organic traffic from non-English keywords, featured snippet rankings | Media, B2B SaaS, Local Services |

| Enhance Brand Inclusivity | Auto-captioning for all video/audio content | Website accessibility score, user engagement time | Government, Healthcare, Education |

| Drive Innovation | Develop voice-controlled apps or services | New product adoption rate, user feedback on voice features | Tech, Automotive, Consumer Goods |

Summary & Key Takeaways: The Future is Open and Spoken

Meta AI‘s Omnilingual ASR is far more than a technical achievement; it represents a strategic democratization of elite multilingual speech recognition. It empowers businesses of all sizes to build more inclusive, intelligent, and effective communication channels.

For U.S. businesses, the advantages are clear and immediate:

- Unprecedented Reach: The ability to finally connect with diverse domestic and global audiences at a scale that was previously unimaginable.

- Cost-Effective Innovation: The open-source Apache 2.0 license removes prohibitive financial barriers, allowing for custom solutions and full data ownership.

- Strategic Alignment: This technology directly addresses the most significant trends in marketing and search for 2025: conversational AI, voice search optimization, and universal content accessibility.

The release of these powerful AI speech models is a call to action. Marketers, developers, and business leaders should begin exploring how these open-source ASR models can be integrated into their workflows to build a deep and lasting competitive advantage in a world that is increasingly voice-first.

Frequently Asked Questions (FAQ)

What is Meta’s Omnilingual ASR?

Meta’s Omnilingual ASR is a suite of advanced, open-source ASR models designed for automatic speech recognition across more than 1,600 languages. It is released under a commercial-friendly Apache 2.0 license, making it free for businesses to use.

How does Omnilingual ASR differ from Google’s and OpenAI’s models?

The main difference is its massive scale and unique adaptability. Omnilingual ASR supports over 1,600 languages and features zero-shot language support to add new ones easily, while Google’s USM supports around 300+ and OpenAI’s Whisper supports about 99.

What makes zero-shot ASR so powerful for marketers?

Zero-shot ASR allows marketing teams to engage with niche, low-resource language communities without waiting for new models to be trained. This enables rapid global expansion, hyper-targeted campaigns, and more inclusive customer support automation.

Is Omnilingual ASR free to use for commercial purposes?

Yes. The entire suite of models is released under the Apache 2.0 license, which explicitly permits free use, modification, and distribution for both commercial and non-commercial projects.

What is the AllASR corpus?

The AllASR corpus is the massive and diverse dataset Meta AI used to train its multilingual speech recognition models. It includes over 120,000 hours of labeled speech across 1,690 languages from various sources.

Can Meta’s ASR help my brand reach multilingual U.S. audiences?

Absolutely. It can power real-time speech recognition for chatbots, call centers, and voice search optimization efforts tailored to the diverse languages spoken across the U.S., significantly enhancing customer experience and market penetration.

What are the top benefits of using open-source ASR in a marketing strategy?

The key benefits are cost-effectiveness (no recurring API fees), deep customization and flexibility, enhanced data privacy (models can be run on-premise), and the freedom to innovate without restrictive licensing.

How accurate are the Omnilingual ASR models?

The largest model achieves a character error rate (CER) below 10% on 78% of the 1,600+ supported languages, making it highly accurate and reliable for most practical business applications, from transcription to conversational AI.

Why is in-context learning important for voice search optimization?

In-context learning allows an AI to understand nuances, dialects, and new phrases rapidly. For voice search optimization, this means the system can better interpret conversational, long-tail queries from diverse users, leading to more relevant results and stronger SEO performance.

What industries are adopting open-source speech recognition fastest in the U.S.?

Technology, e-commerce, healthcare (for accessibility and patient intake), education technology (for transcription and language learning), and media (for automated captioning) are among the fastest-adopting industries for this powerful technology.

How can marketers integrate Meta’s ASR with existing AI tools?

Because the models are built on standard frameworks like PyTorch, they can be integrated via APIs into existing conversational AI platforms, marketing automation software, CRM systems, and data analytics pipelines.

Does Omnilingual ASR work in real time?

Yes, the models are highly efficient. The smaller models are specifically designed for real-time speech recognition, making them perfectly suitable for live applications like call center support, live captioning, and interactive voice response systems.