To fix AI hallucinations for your brand, you must implement Generative Engine Optimization (GEO) by securing high-authority citations and deploying advanced Schema.org markup. AI models like ChatGPT, Google Gemini, and Perplexity rely on Retrieval-Augmented Generation (RAG) to pull facts. By verifying your data on the Google Knowledge Graph, Wikipedia, and LinkedIn, you provide a “source of truth” that reduces hallucination rates and ensures accurate brand representation in AI search results.

Table of Contents

Imagine a potential investor or a high-value client asks ChatGPT a simple question: “Who is the CEO of [Your Company]?” or “Does [Your Company] have a history of data breaches?”

The answer they get isn’t a list of blue links they have to sift through. It is a definitive, confident paragraph generated by an AI. But what if that answer is wrong? What if the AI confidently names a CEO who retired three years ago? What if it fabricates a lawsuit that never happened?

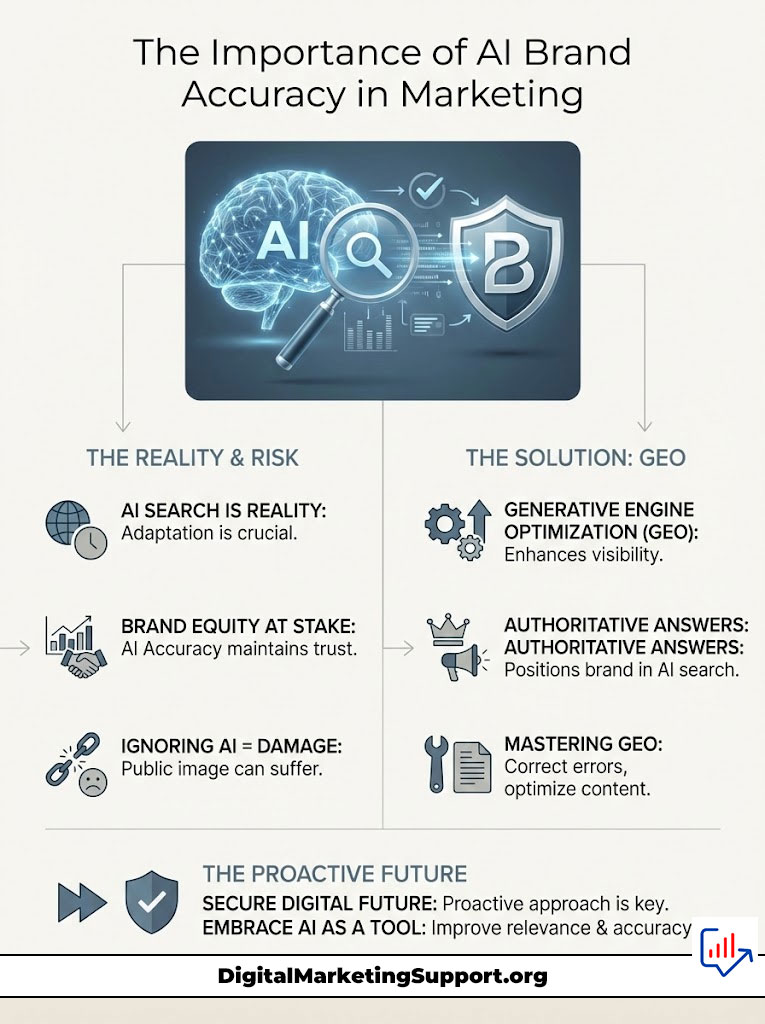

This is not a hypothetical nightmare. It is the current reality of AI Brand Accuracy. As search behavior shifts from typing keywords into Google to asking complex questions to Large Language Models (LLMs), brands are facing a new crisis. It is no longer enough to rank on the first page. You must ensure that the machine itself knows who you are.

We are moving from the age of Search Engine Optimization (SEO) to the era of Generative Engine Optimization (GEO). This guide will walk you through the technical and strategic roadmap to audit your digital footprint, correct AI search visibility errors, and immunize your brand against the volatility of AI-generated misinformation.

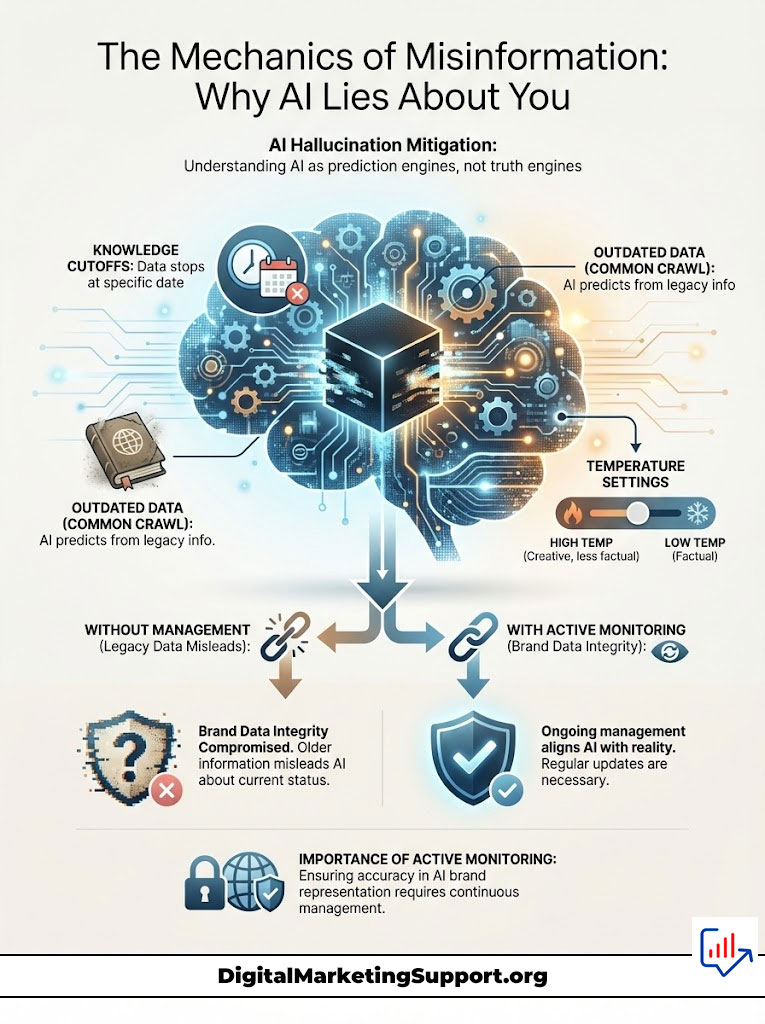

The Mechanics of Misinformation: Why AI Lies About You

To fix the problem, you first need to understand the mechanism behind the lie. AI Hallucination Mitigation begins with accepting that LLMs are not truth engines. They are prediction engines.

The Black Box Problem and Common Crawl

When a user queries a platform like SearchGPT or Claude, the model does not “know” facts in the way a human does. It predicts the next statistically likely token (word part) in a sequence based on the massive datasets it was trained on. A significant portion of this training data comes from Common Crawl, a repository of billions of web pages.

The issue with Common Crawl is that it is a snapshot of the internet that spans years. It contains outdated “About Us” pages, defunct blogs, and old news articles. If your brand’s “About” page from 2019 is heavily weighted in the training data, the AI will prioritize that old data over your current reality. This lag creates a disconnect between your live business status and your AI Brand Accuracy.

Knowledge Cutoffs and Temperature Settings

Every AI model has a “knowledge cutoff”—a date past which it has no training data. While models are increasingly using Retrieval-Augmented Generation (RAG) to look up fresh info, the core “personality” and fundamental facts about entities often reside in the pre-trained weights.

Furthermore, AI models operate with a setting called “temperature.” A higher temperature makes the AI more creative but less factual. When a user asks a niche question about your brand and the AI lacks specific data, a high temperature setting encourages the model to “fill in the blanks” to please the user. This is where AI search visibility turns into liability. The AI hallucinates a plausible-sounding but factually incorrect detail to complete the pattern.

Hallucination Rates and Brand Data Integrity

Recent industry analysis suggests that hallucination rates vary significantly between models. Google Gemini might hallucinate differently than Perplexity AI because they prioritize different seed data. For a brand manager, this means Brand Data Integrity is not a “set it and forget it” task. You might be accurately represented in Bing Copilot but completely misrepresented in Anthropic’s Claude.

If you do not actively manage your entity data, the AI defaults to the most statistically probable text found in its training corpus. If 80% of the internet discusses your old product line and only 20% discusses your new one, the AI will likely hallucinate that the old product is still your flagship.

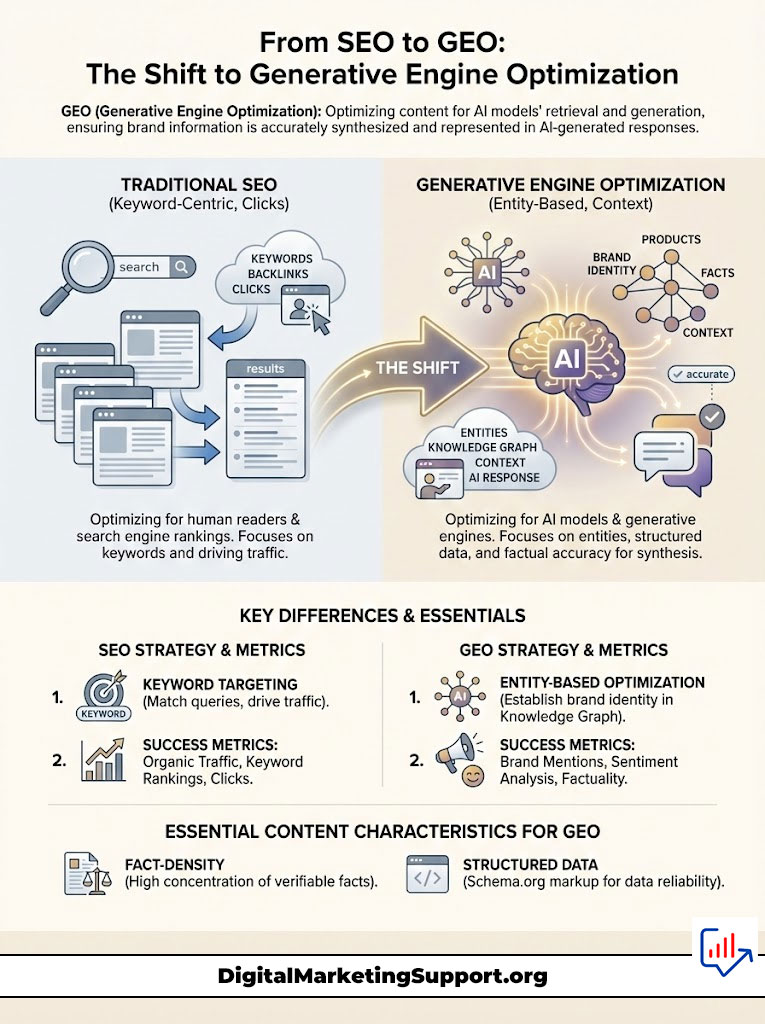

From SEO to GEO: The Shift to Generative Engine Optimization

The industry is undergoing a seismic shift. Traditional SEO was about proving relevance to a ranking algorithm. Generative Engine Optimization (GEO) is about proving authority to a synthesis engine.

Defining Generative Engine Optimization (GEO)

Generative Engine Optimization (GEO) is the practice of optimizing content, data structures, and brand citations to ensure that generative AI models select your brand as the preferred answer. In SEO, you win by getting a click. In GEO, you win by being the answer.

This requires a fundamental change in strategy. You are no longer writing for a human reader who skims headlines. You are writing for a machine that ingests facts. To improve AI Brand Accuracy, your content must be dense with entities, relationships, and verifiable assertions.

Entity-Based SEO vs. Keyword Targeting

Traditional SEO focuses on strings of text (keywords). Entity-based SEO focuses on things (concepts). Google and other AI developers use a Knowledge Graph to understand that “Apple” is a technology company, not just a fruit.

If your brand is not clearly defined as an entity in the Google Knowledge Graph, LLMs treat your brand name as just another word. They will confuse it with similar-sounding words or other companies. Generative Engine Optimization (GEO) prioritizes establishing this entity identity. You must teach the AI that [Brand Name] is a distinct legal entity with specific attributes (CEO, Location, Industry).

Comparison: SEO vs. GEO Strategy

| Feature | Traditional SEO | Generative Engine Optimization (GEO) |

| Primary Goal | Rank #1 on SERP Blue Links | Be the cited source in the AI Answer |

| Success Metric | Click-Through Rate (CTR) | Brand Mention, Sentiment & Share of Model |

| Data Structure | Keywords & Backlinks | Entities, Knowledge Graph & Vectors |

| Content Focus | Long-form readability | Fact-density, Structured Data & Authority |

| User Journey | Search > Click > Read | Ask > Read Answer > Verify |

| Target Audience | Human Browsers | LLM Training Sets & RAG Bots |

Step 1: The AI Audit – Diagnosing Your Brand’s Reality

You cannot fix what you do not measure. The first step in LLM Reputation Management is a comprehensive audit of how the major AI engines currently perceive your brand.

Red Team Prompts for SearchGPT and Perplexity AI

You need to “Red Team” your own brand. This means intentionally trying to get the AI to fail or produce negative information. Do not just ask “What is [Brand Name]?” Use prompts that force the AI to dig deeper.

Execute these prompts on SearchGPT, Perplexity AI, Google Gemini, and Claude:

- The Identity Test: “Who is [Brand Name] and what do they sell? List their top 3 competitors.”

- The Leadership Test: “Who is the current CEO of [Brand Name]? When did they take office?”

- The Crisis Test: “Has [Brand Name] been involved in any recent scandals or lawsuits?”

- The Product Test: “What are the specifications of [Brand Name]’s latest product? Is it better than [Competitor]?”

Document the errors. You will likely find that SearchGPT might have better “freshness” regarding news, while older models might reference obsolete leadership.

Identifying Sentiment Drift and Brand Rot

Hallucinations are not always binary (true/false). Sometimes they manifest as “Sentiment Drift.” This happens when AI search visibility is technically accurate but tonally negative.

For example, an AI might describe your company as “formerly a leader in the space,” implying you are no longer relevant. Or it might associate your brand with a competitor’s failure because both names appear in the same Common Crawl datasets. Identifying this “brand rot” is crucial. If the AI output consistently uses passive or past-tense language when describing your active services, your AI Brand Accuracy score is degrading.

Using Tools for LLM Reputation Management

Manual checking is essential, but for ongoing monitoring, you need tools. Platforms designed for LLM Reputation Management are emerging. These tools automate the prompting process and analyze the sentiment of the output. They track your AI search visibility over time, alerting you if a new model update suddenly changes your brand’s narrative.

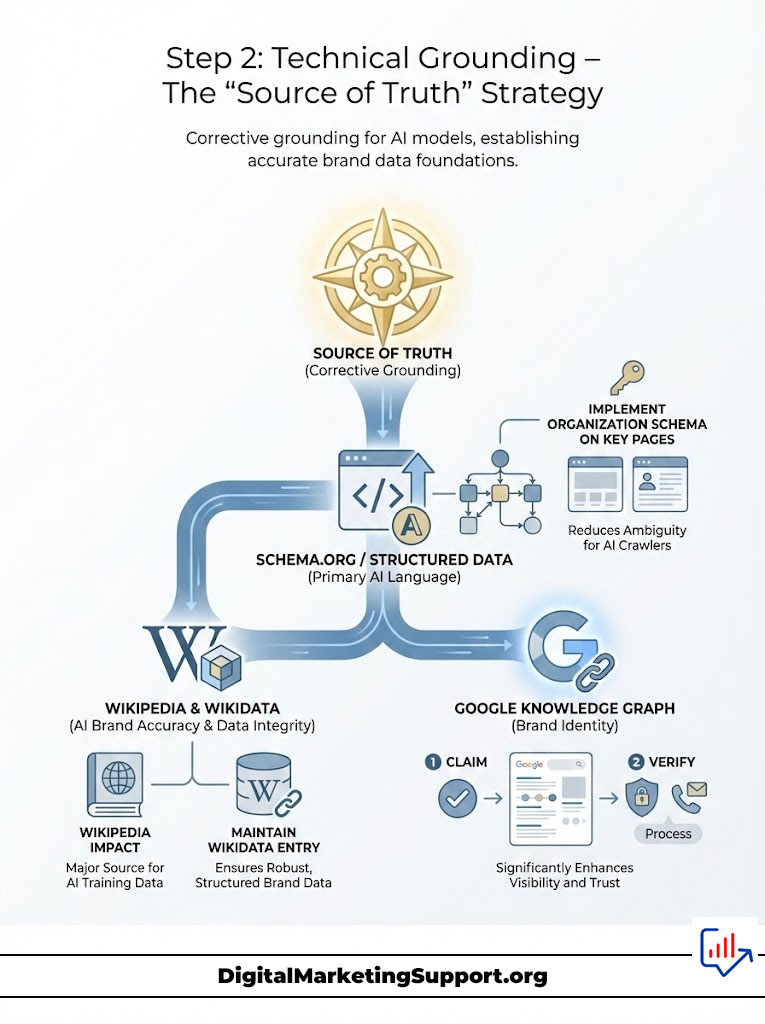

Step 2: Technical Grounding – The “Source of Truth” Strategy

Once you have identified the errors, you must provide the AI with a corrective “Source of Truth.” This is called Grounding AI models. You cannot edit the AI’s brain directly, but you can build a beacon of facts that it cannot ignore.

Leveraging Schema.org and Structured Data

The most powerful language you can speak to an AI is Schema.org / Structured Data. This is code that sits on your website and explicitly tells crawlers what the data means.

For AI Brand Accuracy, you must implement the Organization schema on your homepage and “About” page. This schema should include:

- legalName: Your official registered name.

- foundingDate: When you started.

- sameAs: Links to your verified social profiles, Wikipedia, and Wikidata.

- contactPoint: Customer service information.

By using Schema.org / Structured Data, you reduce the ambiguity for the crawler. Instead of the AI guessing your CEO’s name from a blog post, it reads the employee or founder property in your code. This is a critical pillar of Generative Engine Optimization (GEO).

Claiming the Google Knowledge Graph

Google Gemini and many other retrieval systems rely heavily on the Google Knowledge Graph. If you do not have a Knowledge Panel, or if it is unclaimed, you are vulnerable.

To claim it, you must be verified by Google. Once claimed, you can suggest edits to facts. Note that changes here ripple out. Because Google is a primary index for the web, correcting your Knowledge Graph entry often fixes downstream errors in other models that use Google results for Retrieval-Augmented Generation (RAG).

Wikidata and Wikipedia Influence

We cannot discuss AI Brand Accuracy without mentioning Wikipedia. Almost every major LLM, from GPT-4 to LLaMA, weights Wikipedia heavily in its training data.

If your Wikipedia page is outdated, AI search visibility will suffer. However, Wikipedia is not a marketing channel; it is an encyclopedia. You cannot simply “fix” it with marketing copy. You must ensure that the citations on Wikipedia point to recent, high-authority news sources that accurately reflect your current state.

If you cannot qualify for a Wikipedia page, focus on Wikidata. Wikidata is a structured database that acts as a backend for Wikipedia and is machine-readable. Creating and maintaining a robust Wikidata entry is a high-leverage move for Brand Data Integrity.

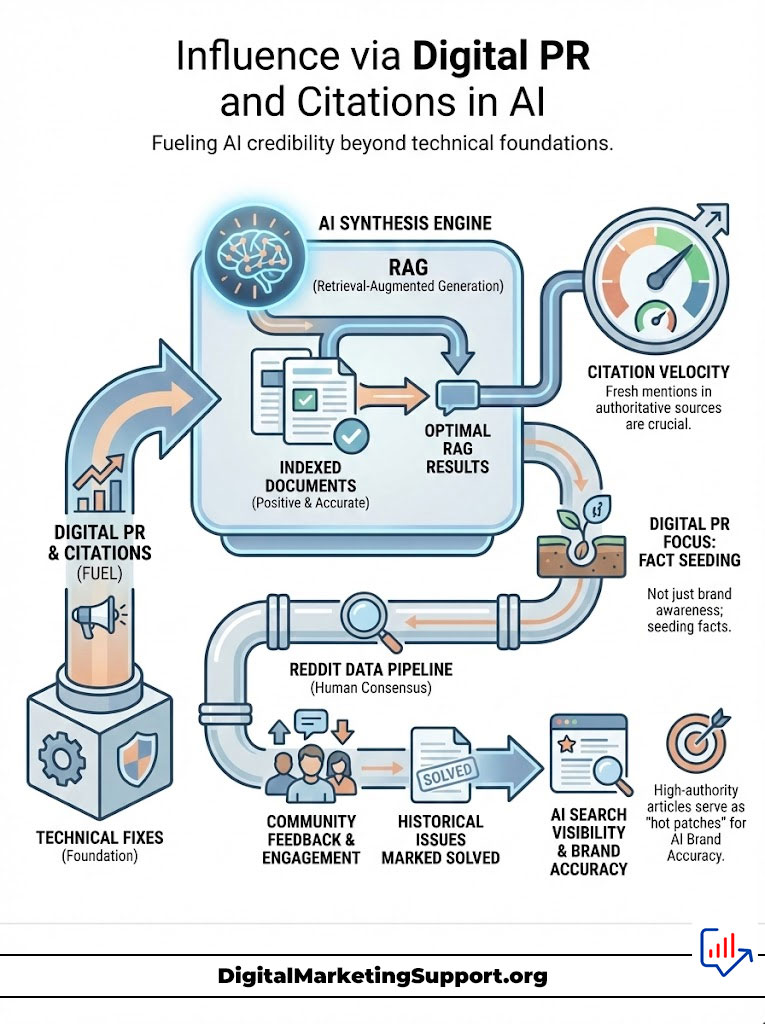

Step 3: Influence via Digital PR and Citations

Technical fixes provide the foundation, but Digital PR for AI provides the fuel. AI models trust third-party validation more than they trust your own website.

Retrieval-Augmented Generation (RAG) Explained

Most modern AI search engines use Retrieval-Augmented Generation (RAG). This means when you ask a question, the AI pauses, searches its index for relevant documents, and then synthesizes an answer based on those documents.

To optimize for Retrieval-Augmented Generation (RAG), you need to ensure that the documents the AI retrieves are positive and accurate. If the top-ranking news articles about your brand are five years old, the RAG process will retrieve obsolete data.

Citation Velocity and Digital PR for AI

You need “Citation Velocity”—a steady stream of fresh mentions in authoritative sources. This is where Digital PR for AI differs from traditional PR. You are not just looking for brand awareness; you are looking for “fact seeding.”

When you issue a press release or secure an interview, ensure that the core facts (CEO name, product focus, mission) are clearly stated. Perplexity AI and SearchGPT are voracious consumers of news feeds. A recent article in a high-authority domain like Bloomberg or TechCrunch serves as a “hot patch” for AI Brand Accuracy, overriding older data stored in the model’s long-term memory.

The Reddit Factor and Human Feedback

In a surprising turn for AI Search Visibility, Reddit has become a cornerstone of data. Google and OpenAI have struck deals to access Reddit’s data pipeline. AI models value Reddit because it represents “human” consensus.

If users on Reddit are discussing bugs in your software that were fixed two years ago, the AI might still report them as current issues. LLM Reputation Management now involves community engagement. You must ensure that solved problems are marked as “solved” in public forums. This provides the AI with the context it needs to report that an issue is historical, not current.

Comparison of Major AI Engines for Brand Managers

Not all AI engines function the same way. Understanding the nuances of each platform is vital for a targeted Generative Engine Optimization (GEO) campaign.

| AI Engine | Primary Data Source | Update Frequency | Brand Optimization Focus |

| Google Gemini | Google Index + Knowledge Graph | Real-time | Schema, GMB Profile & Merchant Center |

| SearchGPT (OpenAI) | Training Data + Bing Search | Periodic / Live | Digital PR, Wikipedia & Bing Indexing |

| Perplexity AI | Live Web Crawl (RAG) | Real-time | Citation Authority, News & Clear Syntax |

| Claude (Anthropic) | Curated Training Data | Low (Static) | Long-form Contextual Data & Whitepapers |

| Bing Copilot | Bing Index | Real-time | Microsoft Knowledge Graph & LinkedIn |

Correcting the Record: What to Do When the AI Is Wrong

Despite your best efforts with Schema.org / Structured Data and Digital PR for AI, hallucinations will happen. When they do, you need a recovery protocol.

The Feedback Loop and Reporting Errors

Most platforms offer a mechanism to report bad outputs. On ChatGPT, you can give a “Thumbs Down” and provide the correct text. While this does not instantly fix the model for everyone, it adds your correction to the Reinforcement Learning from Human Feedback (RLHF) queue. Over time, this helps improve AI Brand Accuracy.

The “Inception” Method for AI Hallucination Mitigation

For stubborn hallucinations, you must use the “Inception” method. This involves creating a piece of content specifically designed to be the definitive answer to the hallucinated query.

If Perplexity AI insists your product is not vegan when it is, publish a dedicated page or press release titled: “Is [Product Name] Vegan? Yes, Here is the Certification.” Ensure this page is heavily optimized for Entity-based SEO and linked from your homepage. Then, use social channels to drive traffic to it. The goal is to force this specific URL into the Retrieval-Augmented Generation (RAG) window of the AI.

Measuring Success in an AI-First World

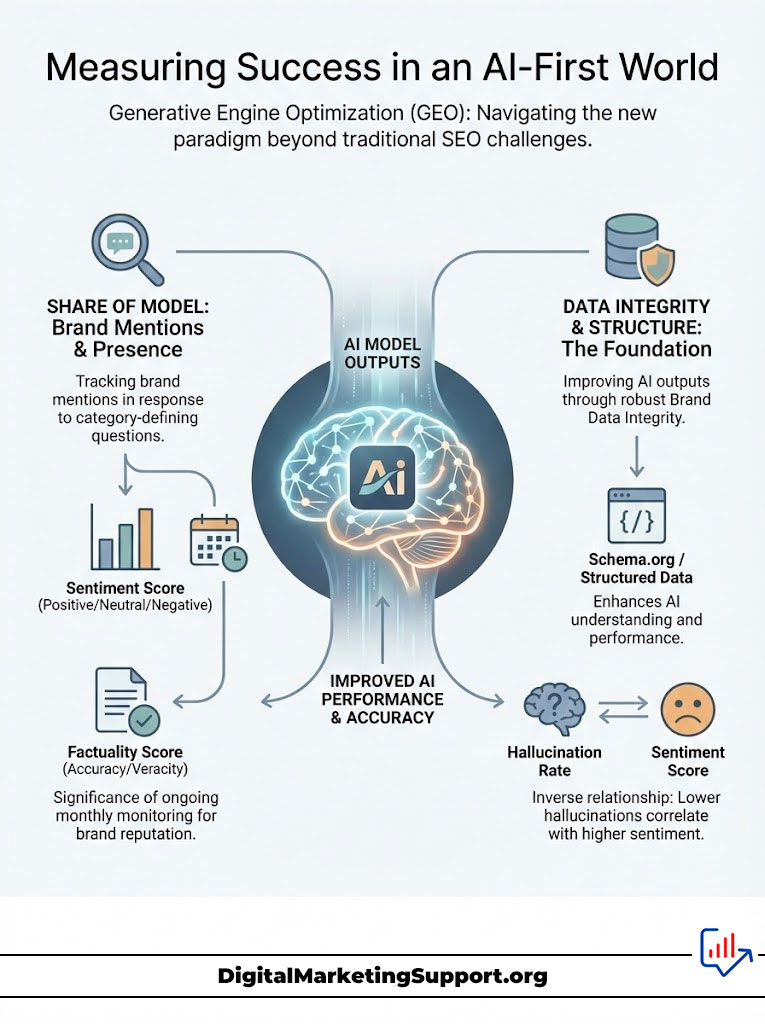

The metrics for Generative Engine Optimization (GEO) are harder to track than SEO, but they exist. You are looking for Share of Model.

Tracking Share of Model

How often is your brand mentioned when a user asks a category-defining question? If someone asks SearchGPT for “best enterprise CRM,” do you appear in the generated list? This is your “Share of Model.”

Sentiment and Factuality Scores

You must also score the output. Is the sentiment positive? Is the factuality 100%? Track these metrics month-over-month. As you improve your Brand Data Integrity through Schema.org / Structured Data and Digital PR for AI, you should see the Hallucination Rate drop and the Sentiment Score rise.

Summary & Key Takeaways

The transition to AI search is not coming; it is here. AI Brand Accuracy is now a fundamental component of brand equity. If you ignore it, you allow a stochastic parrot to define your public image.

To secure your future, remember these core pillars:

- Audit Relentlessly: Use SearchGPT and Perplexity AI to constantly check your brand’s pulse.

- Ground Technically: Use Schema.org / Structured Data and the Google Knowledge Graph to provide a machine-readable source of truth.

- Verify Authority: Use Digital PR for AI to build a wall of citations that Retrieval-Augmented Generation (RAG) systems cannot ignore.

- Engage Communities: Manage your narrative on Reddit and LinkedIn to ensure human consensus aligns with brand reality.

By mastering Generative Engine Optimization (GEO), you do more than fix errors. You position your brand as the authoritative answer in the world’s most advanced search engines.

Frequently Asked Questions (FAQ)

What exactly causes AI to hallucinate facts about my brand?

AI hallucinations occur when Large Language Models (LLMs) encounter gaps, conflicts, or outdated information in their training data. Instead of admitting ignorance, the model predicts the most statistically probable sequence of words, often conflating your brand with similar entities or outdated facts from Common Crawl.

How is Generative Engine Optimization (GEO) different from traditional SEO?

While SEO focuses on ranking links for keywords to drive clicks, Generative Engine Optimization (GEO) focuses on optimizing entities and data structures to become the direct answer synthesized by an AI. GEO prioritizes Brand Data Integrity and authority over click-through rates.

Can I pay OpenAI or Google to fix my brand information directly?

No, there is currently no “paid support” line to manually edit the training weights of models like GPT-4 or Gemini. You must influence the output organically by improving your AI Search Visibility through high-authority citations, Schema.org / Structured Data, and verified Knowledge Panels.

Why does Perplexity AI show different results than ChatGPT?

Perplexity AI relies heavily on real-time Retrieval-Augmented Generation (RAG), meaning it scans the live web to construct answers based on current sources. ChatGPT (depending on the specific model version used) often relies more heavily on pre-trained internal weights and historical data, though it also uses browsing capabilities. This difference explains why Perplexity is often better for breaking news while ChatGPT provides more comprehensive historical context.

How often should I audit my brand on AI platforms?

Given the rapid update cycles of LLMs and the constant refresh of search indices, a monthly audit is recommended. For high-stakes industries like finance or healthcare, utilizing real-time LLM Reputation Management tools to monitor daily changes in AI search visibility is safer to prevent misinformation from spreading.

Does social media content affect AI search results?

Yes, significantly. Platforms like LinkedIn and X (formerly Twitter) are frequently indexed by search engines to gauge real-time sentiment and verify leadership profiles. Ensuring your executive team’s LinkedIn profiles are consistent with your official website helps cross-verify data for AI Brand Accuracy.

What is the fastest way to fix a specific factual error?

Updating high-authority third-party sources yields the fastest results. If your Google Knowledge Graph or Wikidata entry is incorrect, fixing those usually propagates to Google Gemini and other models faster than updating a blog post on your own site.

Do Schema markups guarantee accurate AI answers?

No mechanism offers a 100% guarantee with probabilistic models. However, implementing detailed Schema.org / Structured Data provides the strongest possible signal to the crawler. It acts as a “hard constraint” that significantly reduces the probability of the AI hallucinating by providing it with a machine-readable dictionary of your brand’s facts.

Is AI reputation management necessary for small businesses?

Absolutely. As local search shifts toward conversational AI (e.g., “Find a reliable plumber near me who offers emergency services”), incorrect data about your hours, services, or location can kill lead generation instantly. AI Search Visibility is just as critical for local SEO as it is for global enterprises.

What tools can monitor AI brand sentiment?

While traditional social listening tools are adapting, specialized platforms like Brand24, Awario, and newer custom-built LLM Reputation Management dashboards are best for tracking these specific metrics. They allow you to see not just if you are mentioned, but how the AI constructs the narrative around your brand.

What is “Grounding” in the context of AI?

Grounding AI models refers to the process of linking an AI’s generation to a verifiable, external source of information. When you optimize your content for Generative Engine Optimization (GEO), you are essentially creating the “ground” that the AI stands on to reach the correct conclusion, rather than letting it float in the “cloud” of its training data.

Can I sue an AI company for brand hallucinations?

Legal precedents regarding AI defamation are still evolving in 2025. While some lawsuits have been filed, they are complex and slow. The most effective immediate strategy is not litigation but proactive data management and AI Hallucination Mitigation techniques to correct the record technically.

Disclaimer

The information provided in this guide regarding AI Brand Accuracy and Generative Engine Optimization (GEO) is based on the current technological landscape of Large Language Models (LLMs) and search algorithms as of late 2025. Due to the rapid evolution of AI technology, algorithms and best practices may shift. This content does not constitute legal advice regarding defamation or corporate liability.

References

- Schema.org Documentation: For technical implementation of Organization and Product schemas.

- Google Search Central: Guidelines on Knowledge Panels and Entity verification.

- Common Crawl: Documentation on web corpus data used for LLM training.

- OpenAI & Perplexity AI: Public developer documentation regarding citation protocols and model behavior.