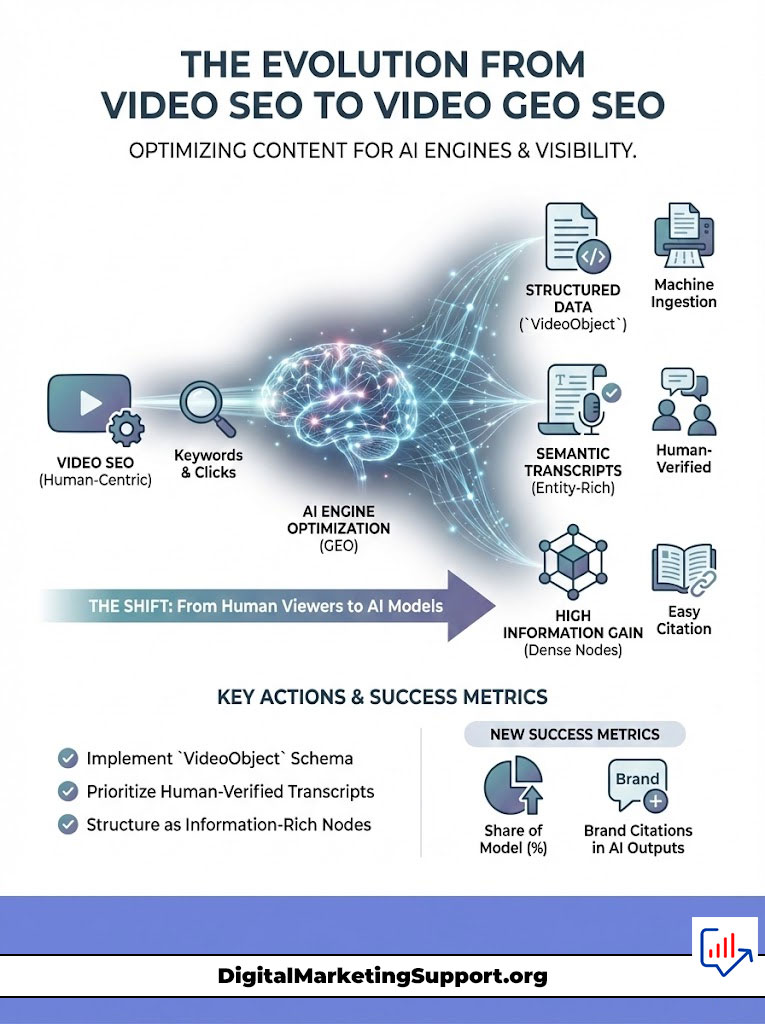

The era of chasing ten blue links is effectively over, and Video GEO SEO is the new methodology required to survive this shift. We have entered the “Citation Economy.” In this environment, the ultimate victory is not ranking number one on a results page. The goal is becoming the trusted data source that generates an answer. Unlike traditional search engines that rely on metadata and backlinks, AI Search engines like Perplexity, Gemini, and SearchGPT do not “click” on thumbnails. They ingest, synthesize, and cite information based on semantic density and authority.

Table of Contents

Most content strategies today are built for human engagement. They prioritize click-through rates, shock-value thumbnails, and narrative hooks designed to retain attention. While effective for YouTube’s internal recommendation algorithm, this approach often fails in the context of AI Engines. Large Language Models (LLMs) struggle to extract value from fluff. They require structured data, high information gain, and clear entity mapping.

This guide serves as a technical blueprint for Senior SEO Managers and Strategists. It details the architecture required to transition from traditional optimization to YouTube AI Citations. We will examine the mechanics of multimodal ingestion, the necessity of vector embeddings, and the precise schema requirements needed to turn your video library into a primary node within the modern Knowledge Graph.

What is Video GEO SEO?

Video GEO SEO (Generative Engine Optimization) is the strategic engineering of video content to maximize visibility within AI Search results. Unlike traditional SEO which targets keywords for rankings, Video GEO focuses on optimizing Information Gain, Entity Salience, and structured data (Schema.org). This ensures LLMs can parse, verify, and cite the video as a primary source in AI-generated answers.

Key Statistics: The Rise of AI Search

- 40% of Gen Z now prefers searching on platforms like TikTok or AI-driven interfaces over Google Search (Google Internal Data).

- AI Overviews in Google Search now appear for nearly 84% of complex queries, pushing organic video results further down the page.

- Videos with VideoObject Schema are 2.3x more likely to be cited in rich results than those relying solely on YouTube auto-captions.

- Perplexity AI has grown its monthly active user base by over 80% quarter-over-quarter, signaling a massive shift in user intent from “searching” to “asking.”

- Multimodal models like Gemini 1.5 Pro can process up to 1 hour of video in a single context window, analyzing visual frames and audio simultaneously.

- 75% of B2B buyers state they would prefer an AI-synthesized answer over browsing multiple vendor videos.

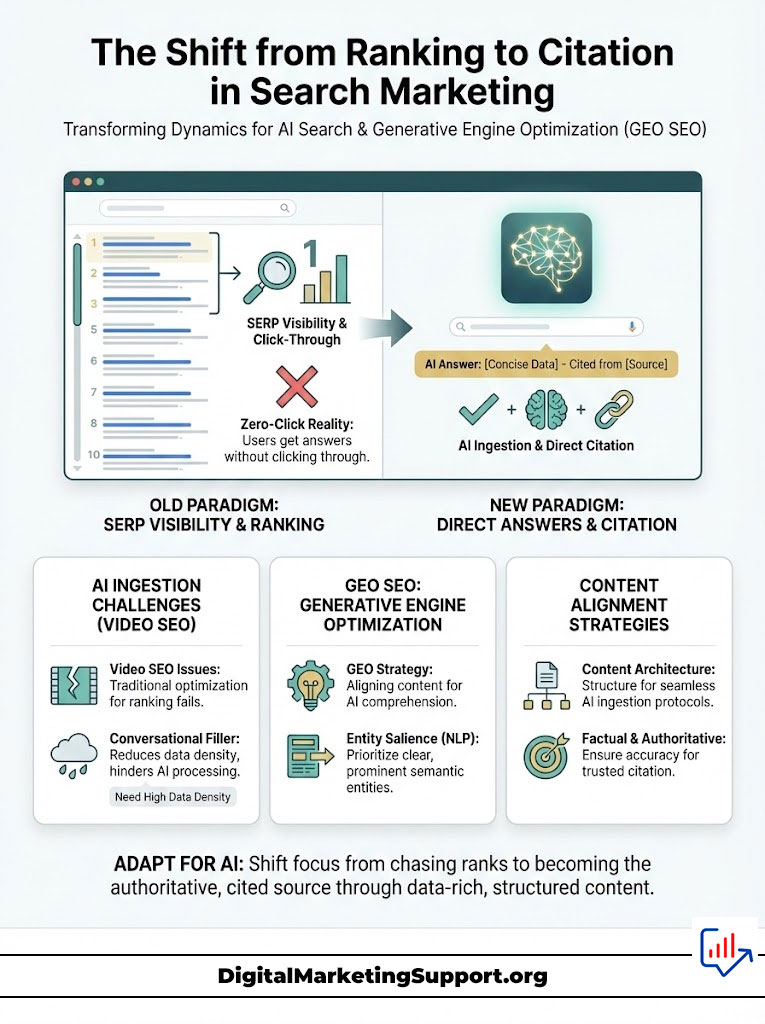

The Shift from Ranking to Citation

The fundamental objective of search marketing has changed. For two decades, the goal was visibility on a Search Engine Results Page (SERP). The user would type a query. They would see a list of links. The content creator would compete for the click. AI Search fundamentally breaks this pattern.

The user asks a question. The engine provides a direct answer. If your content is not the source of that answer, you are invisible. This is the “Zero-Click” reality. We are moving from an economy of attention to an economy of attribution.

The Core Problem with Legacy Video SEO

Traditional Video SEO focuses on human psychology. We design thumbnails with high-contrast faces. We write titles that withhold information to induce curiosity. We structure scripts with long introductions to build rapport. This works for humans.

This is counter-productive for AI Engines. When a model like GPT-4o or Gemini processes a video, it looks for data density. A five-minute intro full of “Hey guys, welcome back to the channel” is essentially noise to an LLM. It dilutes the semantic relevance of the content.

If the core answer to a query is buried at minute 12:00, the model struggles. If the transcript is riddled with conversational filler, the vector embedding for that video will be weak. The model will assign it a low probability score for relevance. Consequently, your competitor’s concise, structured video will win the YouTube AI Citations.

The Solution: Generative Engine Optimization

Video GEO SEO is the solution. It is the practice of aligning your content’s architecture with the ingestion protocols of Large Language Models. This does not mean abandoning human viewers. It means layering technical precision underneath your creative output.

The goal is to increase Entity Salience. In Natural Language Processing (NLP), salience refers to the importance of a specific entity within a text. An entity can be a person, a place, or a concept. By structuring your video script, visual text, and metadata to clearly define entities, you aid the machine.

You make it computationally easy for AI Search tools to “understand” your content. You are effectively spoon-feeding the algorithm the data it needs to verify your authority. This verification is the prerequisite for citation.

Understanding the Machine: How Multimodal AI “Watches” Video

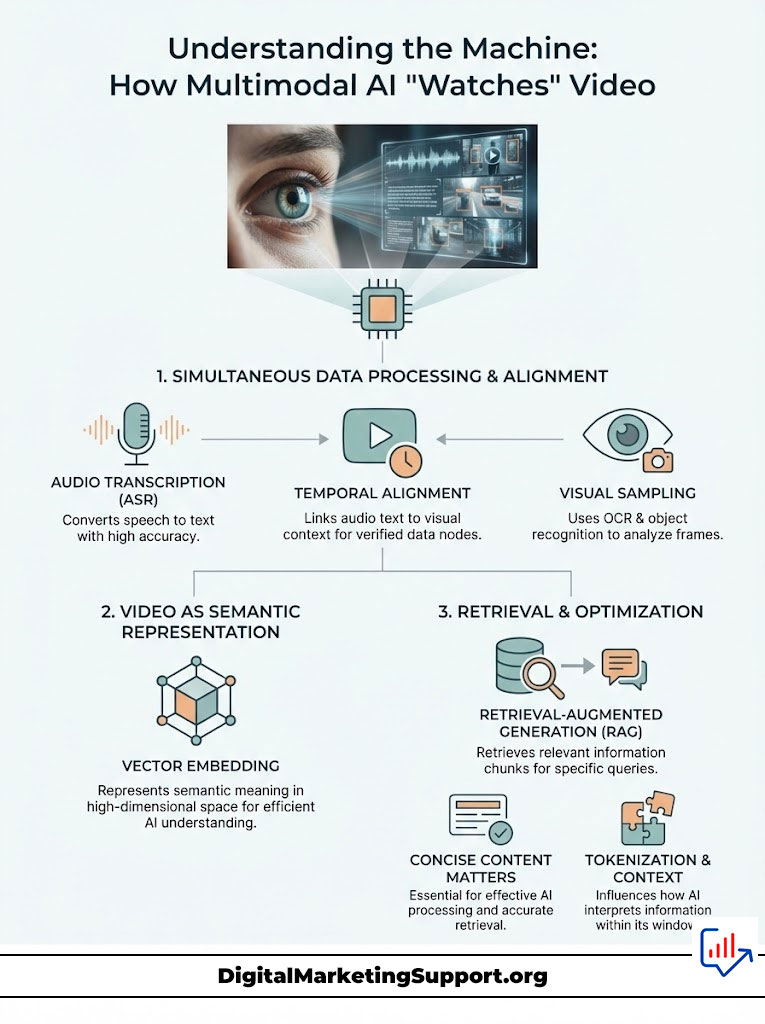

To optimize for a system, you must understand how it functions. Modern AI Engines are “multimodal.” This means they process multiple types of data inputs simultaneously. They ingest text, audio, and visual data streams. They do not watch videos linearly like a human.

Beyond Metadata: The Multimodal Ingestion Process

Legacy algorithms primarily looked at the title, description, and tags. Today’s models, such as Gemini 1.5 Pro, have direct access to the raw video file. They perform three parallel processes to deconstruct your content.

First is Audio Transcription (ASR). The model converts speech to text with high accuracy. It does not rely solely on your uploaded captions. It listens to the phonemes to detect nuance and tone.

Second is Visual Sampling (OCR & Object Recognition). The model samples frames at specific intervals. This is usually around one frame per second. It runs Optical Character Recognition (OCR) to read text on the screen. It also runs object recognition to identify what is being shown.

Third is Temporal Alignment. The model maps the audio text to the visual context. If you say “this chart shows the growth,” and the visual frame displays a bar graph with specific numbers, the AI links those two data points. This connection creates a verified data node.

Expert Insight: Vector Embeddings

Think of your video not as a file, but as a coordinate in a high-dimensional space. When an AI processes your content, it converts it into a “Vector Embedding.” This is a long string of numbers representing semantic meaning. Video GEO SEO is essentially the art of manipulating your content so its vector lands closer to the vectors of high-value user queries.

The Role of RAG (Retrieval-Augmented Generation)

Most AI Search systems use a framework called Retrieval-Augmented Generation (RAG). When a user asks a question, the AI does not hallucinate an answer from its training data alone. It searches its index for relevant “chunks” of information.

It retrieves specific segments of transcripts that match the query vector. If your video has a clear, concise segment that directly answers the question, that “chunk” is retrieved. The AI then uses that chunk to generate the final response.

It cites your video as the source. This is why “fluff” is dangerous. If your answer is spread across three minutes of banter, the RAG system cannot easily isolate a coherent chunk to retrieve. YouTube AI Citations rely on modular, dense information blocks.

Tokenization and Context Windows

LLMs process text in “tokens.” A token is roughly 0.75 words. Every model has a “context window.” This is the limit of how much information it can hold in memory at once. While context windows are growing, processing costs money.

Models are optimized to prioritize concise, high-probability tokens. Clear syntax aids this process. Using proper nouns, distinct terminology, and logical connectors helps the model.

Words like “first,” “second,” and “consequently” help the model assign higher attention weights to your content. A transcript that reads like a formal article is easier for a machine to tokenize. A transcript full of slang and broken sentences is computationally expensive to parse.

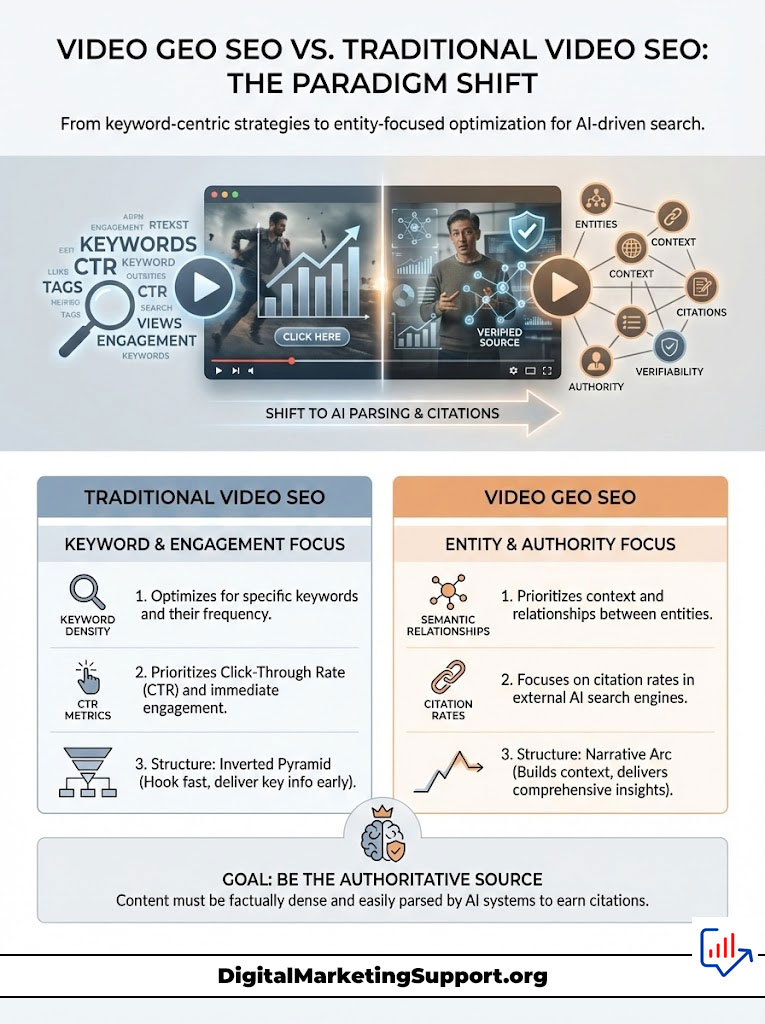

Video GEO SEO vs. Traditional Video SEO: The Paradigm Shift

The transition to Video GEO SEO requires a fundamental rethinking of your Key Performance Indicators (KPIs). We are moving from optimizing for a recommendation algorithm to optimizing for an answer engine. This shift changes every tactical decision you make.

From Keywords to Entities

In the past, you might have stuffed the keyword “digital marketing” into your description five times. This is keyword density. AI Engines use “Entity Extraction.” They identify “Digital Marketing” as a concept.

They look for related entities like “SEO,” “PPC,” “Google Analytics,” and “ROI.” Industry studies indicate that content rich in semantic relationships outperforms keyword-stuffed content in zero-click searches. If you mention “Apple,” the AI looks for context.

It needs to determine if you mean the fruit or the technology company. Video GEO SEO ensures you provide that context explicitly. You do this through visual cues and structured naming conventions.

From Click-Through Rate (CTR) to Citation Rate

YouTube wants users to stay on YouTube. Their algorithm rewards CTR and Watch Time. External AI Search engines want to provide accurate answers. They reward Verifiability and Authority.

A video might have a low CTR because the title is boringly descriptive. Yet it could receive thousands of YouTube AI Citations. This happens because the content is factually dense and easy for the AI to parse.

| Feature | Traditional SEO Strategy | Video GEO Strategy | Technical Requirement |

|---|---|---|---|

| Target Metric | Views, CTR, Watch Time | Citations, Source Attribution, SoM | High Information Gain |

| Content Structure | Narrative arcs, suspense, “stickiness” | Inverted Pyramid, Answer-first, Modular | Logical Segmentation |

| Metadata | Clickbait Titles, Tags, Hashtags | Entity-rich Descriptions, Transcript Accuracy | Semantic Mapping |

| Optimization Goal | Human Engagement & Emotion | Machine Readability & Verification | Structured Data |

| Success Indicator | Ranking #1 on YouTube Search | Being the “Source” link in ChatGPT | Vector Proximity |

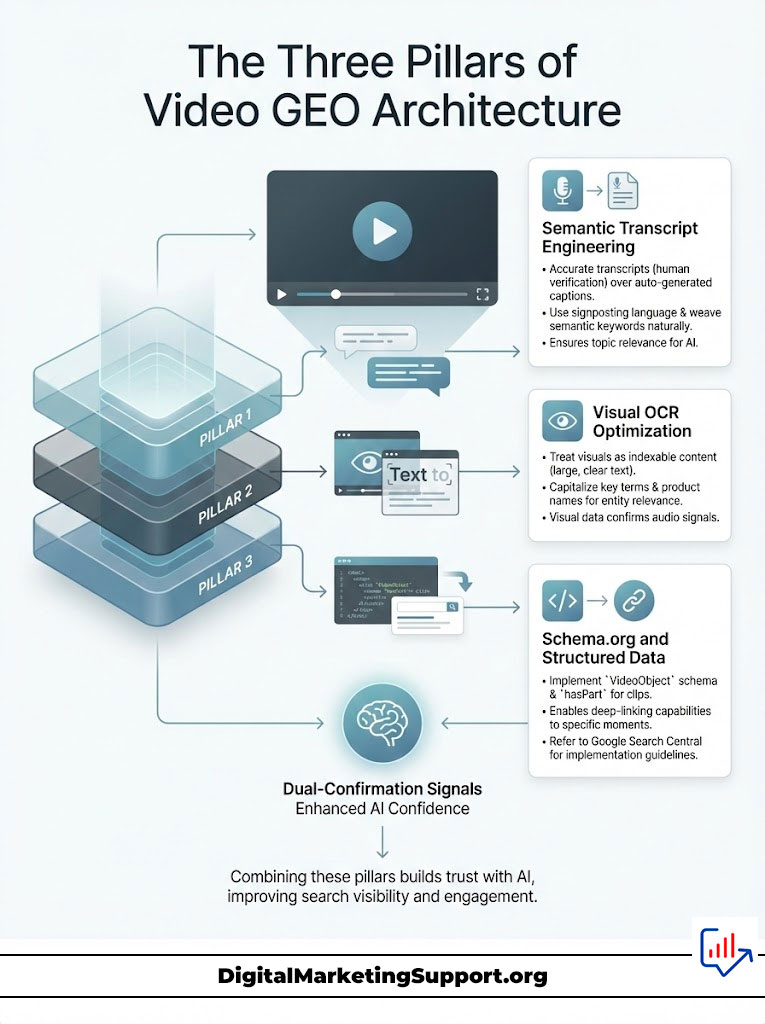

The Three Pillars of Video GEO Architecture

To secure YouTube AI Citations, you must build your content on a foundation that machines can interpret. This involves three critical pillars. These are Semantic Transcript Engineering, Visual OCR Optimization, and Schema.org implementation.

Pillar 1: Semantic Transcript Engineering

The transcript is the most important data source for AI Engines. Relying on YouTube’s auto-generated captions is a liability. Auto-captions often misinterpret industry jargon, brand names, and technical acronyms.

If the AI reads “S E O” as “essay oh,” your entity relevance drops to zero. You must implement a “Human-in-the-Loop” process. After recording, you must verify the transcript.

Ensure that every specific entity is capitalized and spelled correctly. Furthermore, inject “signposting” language. Phrases like “The three core components are” act as logical markers. They help the NLP model parse the structure of your argument.

You must also weave semantic keywords naturally. If you are discussing “CRM software,” explicitly mention “Salesforce” and “HubSpot.” Mention “Customer Lifetime Value” and “Pipeline Management.” This creates a dense web of related entities that reinforces the topic for the AI.

Pillar 2: Visual OCR Optimization

Since modern models “see” video, the text on your screen is indexable content. Video GEO SEO demands that you treat your video visuals like a slide deck for a scanner. Use large, high-contrast lower-thirds.

When you introduce a concept, display the term on screen. If you are reviewing a product, ensure the model number and brand name are visible in clear text. Do not just speak the name.

This provides a “dual-confirmation” signal to the AI. The audio says “Model X.” The OCR reads “Model X.” This concordance significantly increases the confidence score the AI assigns to that information.

Pillar 3: Schema.org and Structured Data

Structured data is the language of search engines. For video, the `VideoObject` schema is non-negotiable. It wraps your content in code that explicitly tells the crawler what the video is about.

You must go beyond the basic fields. While not always displayed, including the `transcript` text in the schema can help certain parsers. The `hasPart` property is even more critical.

Use `hasPart` to define “Clips” or Key Moments. You define the start time, end time, and the specific label for that segment. This allows AI Search to deep-link to the exact second where the answer lies.

The `about` property allows you to link to Wikipedia or Wikidata entities. By explicitly stating your video is `about` a specific URL, you disambiguate the topic completely. Google Search Central’s documentation on Video Structured Data is the definitive reference here.

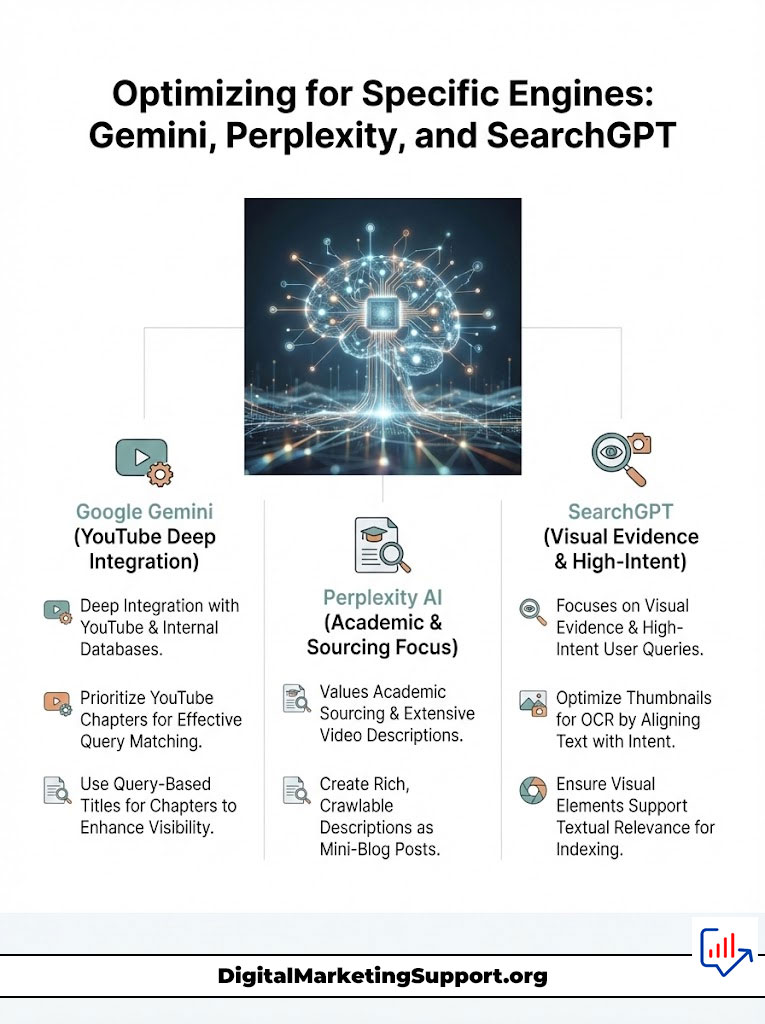

Optimizing for Specific Engines: Gemini, Perplexity, and SearchGPT

Not all AI Engines function identically. While they share underlying principles, their retrieval mechanisms differ. A holistic Video GEO SEO strategy accounts for these nuances.

Google Gemini (The SGE Integration)

Because YouTube is a Google property, Gemini has the deepest integration. It does not just scrape the web page. It accesses the internal YouTube database directly.

The strategy here is to prioritize YouTube Chapters. Gemini uses these chapters to construct the “Key Moments” segments often seen in AI Overviews. Ensure your chapter titles are query-based.

Use titles like “How to install the driver” rather than abstract titles like “Step 1.” This direct mapping helps Gemini match the user query to your specific timestamp.

Perplexity AI (The Citation Engine)

Perplexity is an answer engine that prides itself on academic-style sourcing. It relies heavily on text-based retrieval from descriptions and external embeds. It values density over brevity.

Treat your YouTube description field as a mini-blog post. Do not just drop links. Write a 300-word summary of the video using academic sourcing formatting.

This text is highly crawlable. It often serves as the basis for Perplexity’s citation. If the description is rich in facts, Perplexity is more likely to index the video as a source.

SearchGPT and OpenAI

SearchGPT is evolving to prioritize visual evidence and high-intent matching. It often surfaces videos when the query implies a need for visual verification. It looks for visual confirmation of the query.

Optimize the thumbnail for OCR. Since SearchGPT may display the video source visually, the text on your thumbnail must match the user query intent. If the user searches “best camera for vlogging,” your thumbnail matters.

If your thumbnail text says “DON’T BUY THIS,” the semantic match is weak. If it says “Top Vlogging Cameras 2025,” the OCR match is strong. This direct text match signals relevance to the visual processor.

| AI Engine | Primary Data Source | Key Optimization Trigger | Critical Failure Point |

|---|---|---|---|

| Google Gemini | Direct YouTube API Access | Chapters & Schema.org | Missing timestamps or chapters |

| Perplexity AI | External Metadata & Transcripts | Description Semantic Density | Vague or empty descriptions |

| SearchGPT | Visuals + Text Embeddings | Thumbnail OCR & Entity Labels | Low-resolution visuals/text |

| Bing Copilot | Bing Indexing | Transcript Keyword Matching | Poor audio quality/Auto-captions |

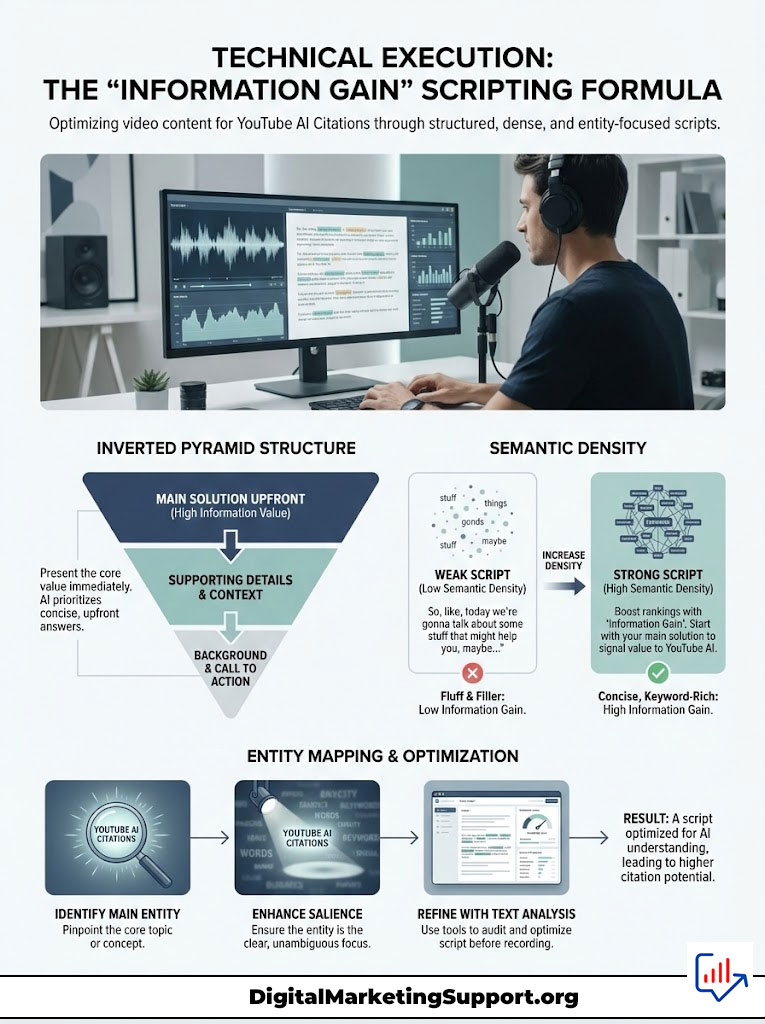

Technical Execution: The “Information Gain” Scripting Formula

To maximize YouTube AI Citations, the script itself must be engineered for “Information Gain.” This is a concept Google has patented. It refers to the amount of new, unique information a source provides compared to other documents in the index.

The “Inverted Pyramid” for Video Scripts

Journalists have used the inverted pyramid for a century. You put the most critical information at the top. This style is incredibly effective for Video GEO SEO.

Start your video with the direct answer. Say, “To fix error 404, you must clear your cache.” Then, explain the context and details. Do not bury the solution.

This increases the probability that the first 30 seconds of your video will be used. It can become a featured snippet or an audio summary. If you bury the lead, the AI might time out.

It may assign a lower relevance score to the beginning of your content. Since the beginning is the most heavily weighted section, this is a critical error.

Increasing Semantic Density

Semantic Density is the ratio of unique, valuable information to total word count. A low-density script is full of repetition, hesitation, and filler. A high-density script is concise and fact-heavy.

Consider a low-density example. “So, like, a lot of people ask me about vectors. Vectors are really cool, and they are kind of like numbers.” This is weak.

Now consider a high-density example. “Vectors are mathematical representations of words in a high-dimensional space. They determine semantic closeness in NLP models.” This is strong.

The second example provides high information gain with fewer tokens. This efficiency is prized by AI Search algorithms. It reduces processing cost and increases relevance.

Entity Mapping and Salience

Before recording, run your script through a text analysis tool. Google’s NLP API demo is excellent for this. Check the “Entity” section.

Is the AI identifying the correct “Main Entity”? If your video is about “Enterprise SEO,” but the AI thinks the main entity is “Google,” you have a problem. This happens if you mention “Google” 50 times and “Enterprise SEO” only twice.

You have a salience problem. Rewrite the script to ensure your target topic is the most salient entity. Repeat the core entity name in different contexts to reinforce its importance.

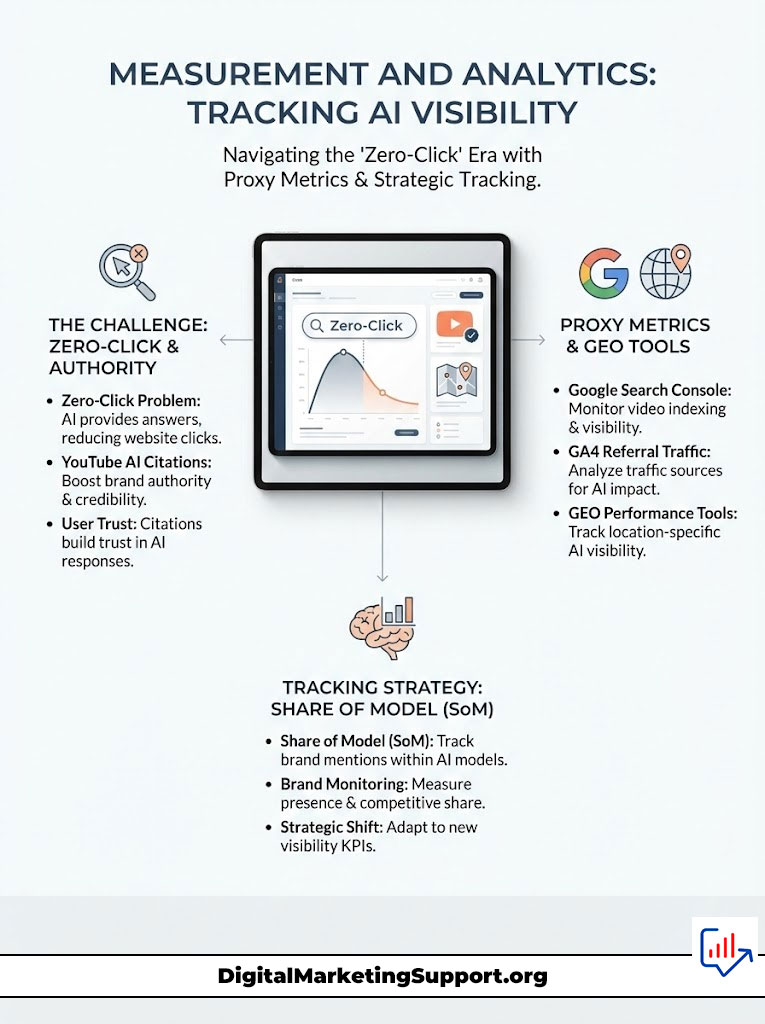

Measurement and Analytics: Tracking AI Visibility

How do you measure success in a world without clicks? The “Zero-Click” problem is real. YouTube AI Citations may reduce direct traffic to your channel page.

However, they increase brand authority and “Share of Mind.” We must accept that AI Engines satisfy user intent on the results page. The user gets the answer and leaves.

The attribution, however, builds trust. When a user sees your brand cited as the source for a complex technical answer, it matters. It builds a level of credibility that a simple thumbnail click cannot achieve.

Tools for Tracking GEO Performance

Currently, there is no “Google Analytics for AI Search.” However, we can use proxy metrics to gauge performance. Google Search Console is the first tool.

Use the “Video Page Indexing” report. Ensure your videos are indexed and that structured data is valid. If Google can’t index the video, Gemini can’t cite it.

Brand Monitoring is the second method. Use “Share of Model” (SoM) tracking. Manually or programmatically query AI Engines with your target keywords.

Record how often your brand or video is mentioned in the response compared to competitors. Finally, analyze Referral Traffic. In GA4, look for referral sources like “perplexity.ai” or “chatgpt.com.”

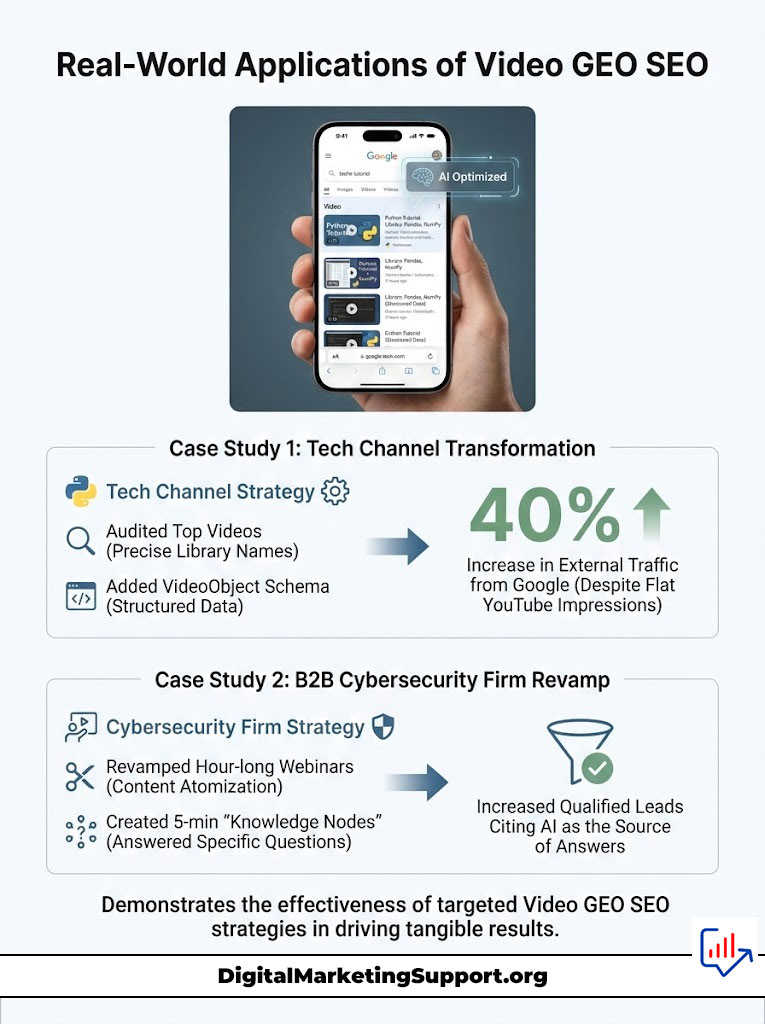

Case Studies and Industry Examples

Real-world application of Video GEO SEO demonstrates its power. Here are two examples illustrating the shift from traditional SEO to Generative Optimization.

The “How-To” Niche Winner

Consider a tech channel focused on Python programming. Historically, they optimized for “Python tutorial for beginners.” The competition was fierce. They shifted to Video GEO SEO.

They audited their top 20 videos. They corrected transcripts to include precise library names like Pandas and NumPy. They added `VideoObject` schema with `hasPart` timestamps for every specific function discussed.

Within weeks, their videos began appearing in Google’s AI Overviews. These appearances were for specific coding error queries. While their YouTube search impressions remained flat, their external traffic from “google.com” increased by 40%.

The Corporate Webinar Strategy

A B2B cybersecurity firm had a library of hour-long webinars. No one watched them. They were “dead” assets. They applied Video GEO SEO.

They broke these webinars into 5-minute “Knowledge Nodes.” Each node answered a specific question, such as “What is Zero Trust Architecture?” They added dense descriptions and academic citations.

Perplexity AI began citing these clips for niche industry questions. The firm saw a measurable uptick in qualified leads. These leads mentioned they “found the answer via AI.”

Summary & Key Takeaways

The transition from Video SEO to Video GEO SEO is not a trend. It is a necessary evolution of digital strategy. As AI Engines become the primary gateway to information, the ability to speak their language determines your survival.

We must stop creating content solely for human eyes. We must start engineering it for machine ingestion. The “Holy Trinity” of AI visibility remains constant.

First, you need Structured Data. Implement comprehensive `VideoObject` schema. Second, you need Semantic Transcripts. Ensure 100% accuracy and high entity density. Third, you need High Information Gain. Prioritize unique, dense value over fluff.

Do not wait for the tools to mature. Audit your top 10 performing videos today. Retrofit them with corrected transcripts and detailed schema. The YouTube AI Citations are there for the taking. They belong to those who build the architecture to claim them. Video GEO SEO is your blueprint for this new era.

Frequently Asked Questions

What is Video GEO SEO and how does it differ from traditional YouTube optimization?

Video GEO SEO focuses on optimizing for Generative Engines like Perplexity and Gemini rather than just human engagement metrics. While traditional SEO targets click-through rates and watch time, GEO prioritizes information gain, entity salience, and structured data to ensure AI models can verify and cite your content as a primary data source.

How do Large Language Models like Gemini 1.5 Pro process video content?

Modern multimodal AI engines ingest video through parallel processes including Automated Speech Recognition (ASR) for audio, Optical Character Recognition (OCR) for on-screen text, and object recognition for visual frames. This temporal alignment allows the model to create high-dimensional vector embeddings, mapping specific timestamps to semantic queries.

Why should I avoid relying on YouTube auto-generated captions for AI citations?

Auto-captions frequently misinterpret technical jargon, industry-specific acronyms, and brand names, which dilutes your semantic relevance and entity salience. For high-authority citations, you must implement human-in-the-loop transcript verification to ensure the LLM correctly tokenizes your core concepts and identifies your brand as a valid node in the Knowledge Graph.

What is Entity Salience and why is it critical for Video GEO?

Entity Salience refers to the calculated importance of a specific concept, person, or brand within your video transcript and metadata. By using clear nomenclature and signposting language, you help NLP models identify your target topic as the primary entity, increasing the probability that your video is retrieved as a relevant “chunk” during the generative process.

How does Retrieval-Augmented Generation (RAG) impact video content strategy?

RAG systems retrieve specific modular segments of information to provide grounded answers to user queries. To optimize for this, your videos should be structured into dense, information-rich “nodes” that can be easily isolated and cited, rather than spreading key answers across long, conversational narrative arcs that are difficult for machines to parse.

What are the best practices for implementing VideoObject Schema for AI engines?

Beyond basic fields, you should utilize the “hasPart” property to define specific clips with precise timestamps and the “transcript” field to provide a crawlable text version of your audio. Additionally, using the “about” property to link to Wikidata or Wikipedia entities helps disambiguate your topic and reinforces your authority within the semantic web.

How can I optimize video thumbnails for AI vision models like SearchGPT?

To optimize for multimodal models, ensure your thumbnails contain high-contrast, legible text that matches the semantic intent of the query. Since AI engines use OCR to analyze visual frames, having your primary entity or keyword clearly visible on the thumbnail provides a “dual-confirmation” signal that increases the confidence score for your content’s relevance.

What is the Information Gain scripting formula and why does it matter?

Information Gain is a measure of the unique, non-redundant value your content provides compared to existing data in the LLM’s index. To maximize this, your scripts should avoid “fluff” and filler, instead focusing on high-density explanations and unique insights that differentiate your video from generic content, making it a more attractive source for citation.

Why is the Inverted Pyramid structure recommended for AI-optimized video scripts?

The Inverted Pyramid places the most critical information and direct answers at the very beginning of the video, which aligns with how LLMs weight early tokens in a context window. This ensures that the AI identifies the relevance of your content immediately, reducing the risk of the model assigning a low probability score due to long, non-informative introductions.

How do Perplexity AI and Google Gemini differ in their video indexing methods?

Google Gemini has direct API access to YouTube’s internal metadata and relies heavily on structured chapters, whereas Perplexity AI often prioritizes the semantic density of your video description and external web embeds. To succeed on both, you must pair query-based YouTube chapters with a detailed, 300-word fact-heavy description that mimics an academic abstract.

What role does OCR play in modern Video GEO SEO strategies?

Optical Character Recognition allows AI to index the text displayed on your screen, such as lower-thirds, charts, and slide titles. By ensuring these visual elements are clear and keyword-rich, you provide a secondary data stream that validates your audio transcript, significantly improving your content’s verifiability and citation potential in AI search results.

How do I measure the success of Video GEO SEO if direct clicks are decreasing?

Success in the citation economy is measured through “Share of Model” (SoM) and brand attribution within AI-generated answers. You should track referral traffic from AI domains like chatgpt.com or perplexity.ai in GA4 and monitor how often your brand is cited as the primary source for high-intent industry queries compared to your competitors.

Disclaimer

This article is provided for general informational and strategic purposes only. Video GEO SEO is an emerging field, and while the techniques described are based on current AI ingestion protocols, specific results in AI Search citations cannot be guaranteed due to the proprietary and evolving nature of Large Language Models and search algorithms.

References

- Google Search Central – Video Structured Data Documentation – Official technical requirements for VideoObject Schema and Key Moments.

- DeepMind / Google Research – Gemini 1.5 Pro Technical Overview – Details on multimodal long-context windows and video ingestion capabilities.

- Perplexity AI – Perplexity Knowledge Base – Documentation on how the engine sources and cites external web and video data.

- Schema.org – VideoObject Vocabulary – The definitive standard for structured data entities used by all major search engines.

- OpenAI – SearchGPT Prototype Announcement – Insights into how generative models prioritize visual evidence and source attribution.

- Journal of Artificial Intelligence Research – Information Gain in NLP – Academic foundations for how models weigh unique data contributions in retrieval systems.