The world of artificial intelligence is no longer a distant, futuristic concept; it’s a tangible, powerful tool that is being woven into the fabric of our daily lives and business operations. In a landmark keynote held in San Francisco, the city of its birth, OpenAI pulled back the curtain on a suite of groundbreaking tools that promise to redefine how we interact with technology. Sam Altman, OpenAI’s CEO, set the stage by revealing staggering growth metrics that paint a clear picture: the AI revolution is not coming—it’s already here.

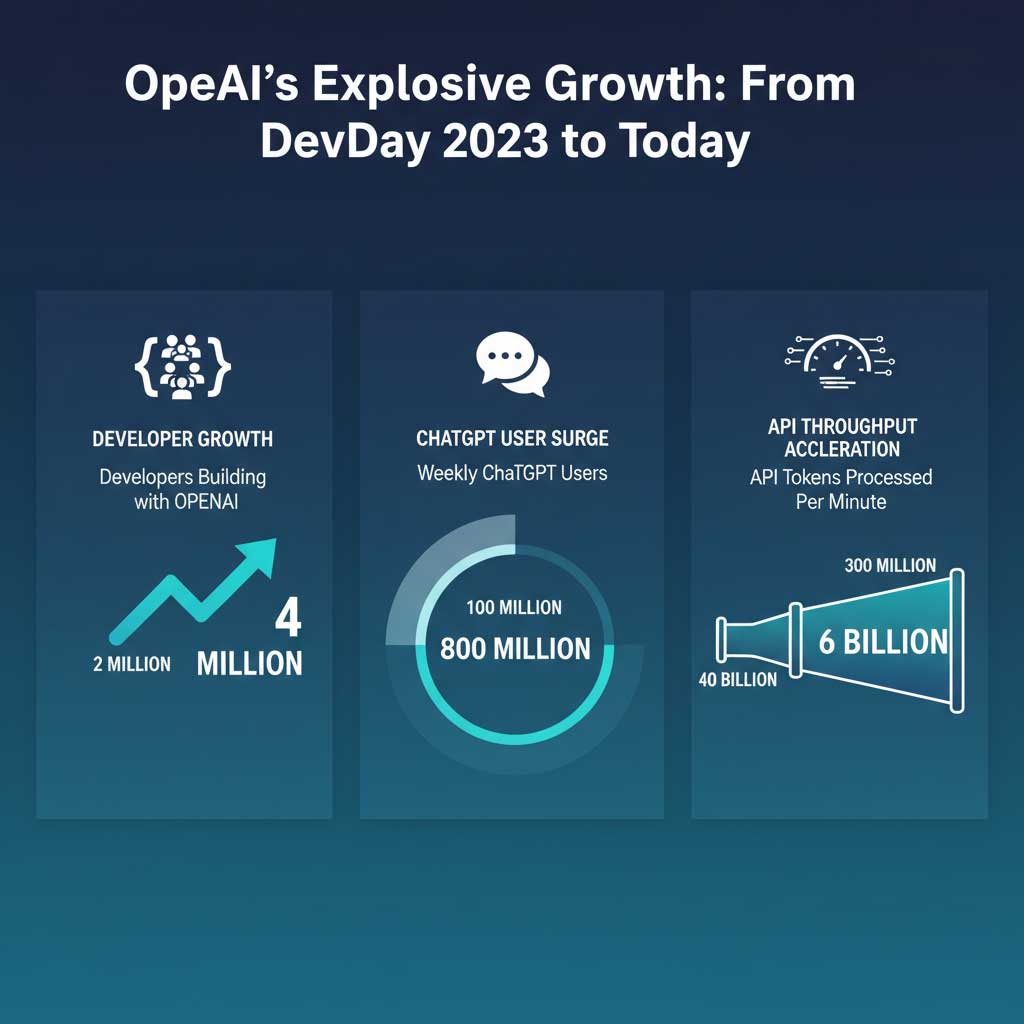

Almost two years on from the first DevDay in 2023, the growth has been nothing short of explosive.

The community of developers building on OpenAI’s platform has doubled, rocketing from 2 million to 4 million. Weekly active users of ChatGPT have surged from an impressive 100 million to a staggering 800 million people. Perhaps most telling for the scale of operations, the API processing throughput has accelerated from 300 million tokens per minute to over 6 billion tokens per minute.

This isn’t just about numbers; it’s about a fundamental shift in perception and application. As Altman articulated, AI has transformed from something people “play with” to something people “build with every day.” The message was unequivocal: there has never been a better time in history to be a builder. With this powerful framing, the event zeroed in on four revolutionary announcements set to empower developers, marketers, and businesses like never before.

The Dawn of a New App Ecosystem: Building Directly Inside ChatGPT with the Apps SDK

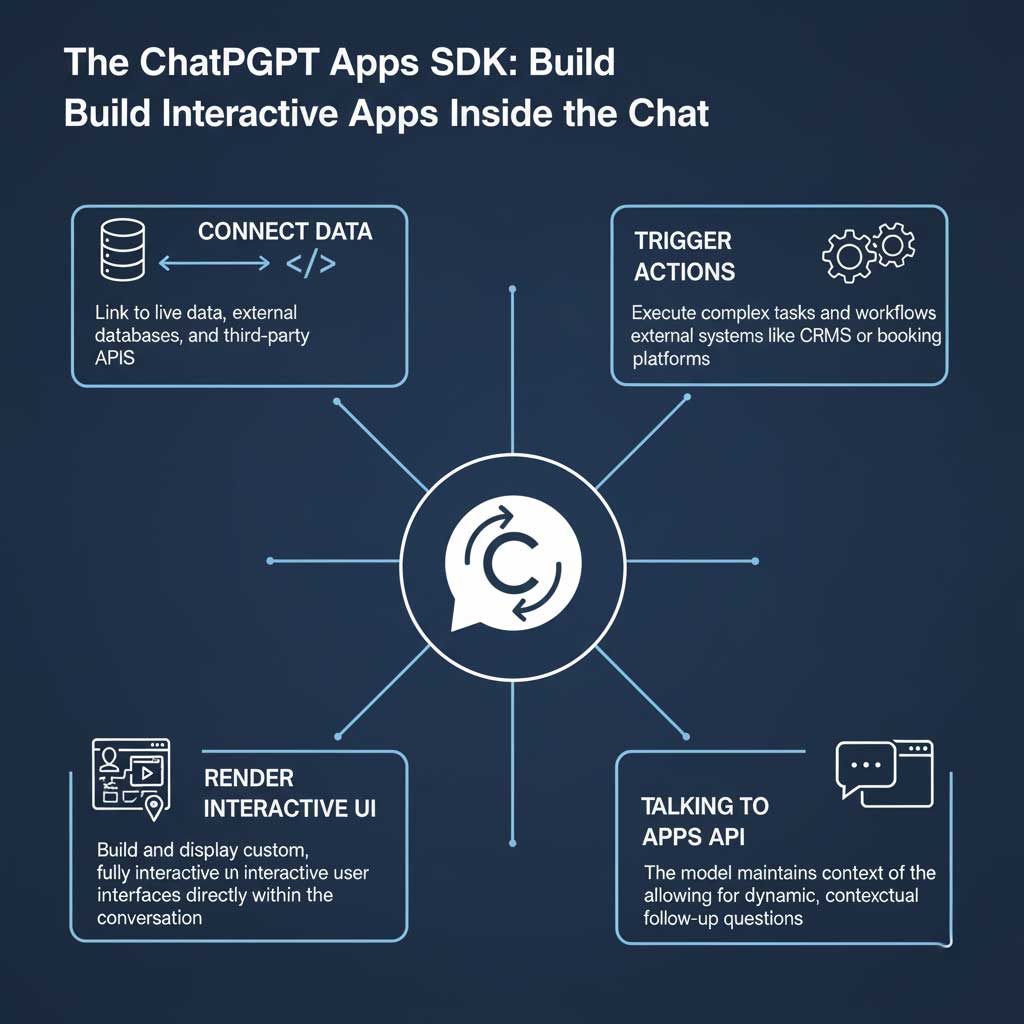

The first major reveal was a paradigm shift for conversational AI: the ability for developers to build fully interactive, rich applications directly inside the ChatGPT interface. This monumental leap is powered by the brand-new Apps SDK, a toolkit that transforms ChatGPT from a conversationalist into a dynamic, interactive operating system.

Key Features of the Groundbreaking Apps SDK

The Apps SDK is not merely a set of plugins; it’s a comprehensive development environment designed for depth, scale, and seamless user experience. It provides the full stack necessary to create meaningful applications that can be invoked and used without ever leaving the chat window.

Full-Stack Functionality and Open Standards

Developers are no longer limited to simple text-in, text-out interactions. The Apps SDK provides everything needed to:

- Connect Live Data: Pull in real-time information from external databases and APIs.

- Trigger Complex Actions: Execute tasks in third-party systems, from booking a reservation to updating a CRM.

- Render Interactive UI: Build and display custom, fully interactive user interfaces directly within the chat.

Crucially, this entire framework is built upon the MCP (Mutable Collaboration Protocol) open standard, signaling a commitment to an interoperable and future-proof ecosystem.

Unprecedented Reach and Intelligent Discovery

Building an app is only half the battle; getting it in front of users is paramount. The Apps SDK addresses this with a massive built-in user base and an intelligent discovery engine.

- Scale: Apps can instantly reach the hundreds of millions of users who interact with ChatGPT every week.

- Discovery by Name: A user can simply ask ChatGPT to use a specific app. For example, a user could upload a rough drawing and say, “Hey ChatGPT, use Figma to turn this sketch into a polished diagram.”

- Conversational Suggestions: The platform can also proactively suggest relevant apps. If a user asks, “Help me create a great playlist for my party this weekend,” ChatGPT might naturally respond by suggesting the Spotify app to build and share the playlist on the spot.

The Future of Conversational Commerce: Monetization and Instant Checkout

OpenAI is already planning the commercial future of this new ecosystem. The roadmap includes robust support for monetization, allowing developers to build businesses on the platform. Furthermore, the introduction of the Agentic Commerce Protocol will enable instant, frictionless checkout right inside ChatGPT, creating a new, powerful channel for e-commerce and service providers.

“Talking to Apps”: A Breakthrough in Contextual Awareness

Perhaps the most futuristic feature is the “Talking to Apps” API. This exposes context from the running application back to the ChatGPT model. In simple terms, ChatGPT always knows what you are seeing and interacting with inside an app. This allows for a deeply contextual and dynamic conversation, where you can ask follow-up questions about the specific content being displayed on your screen.

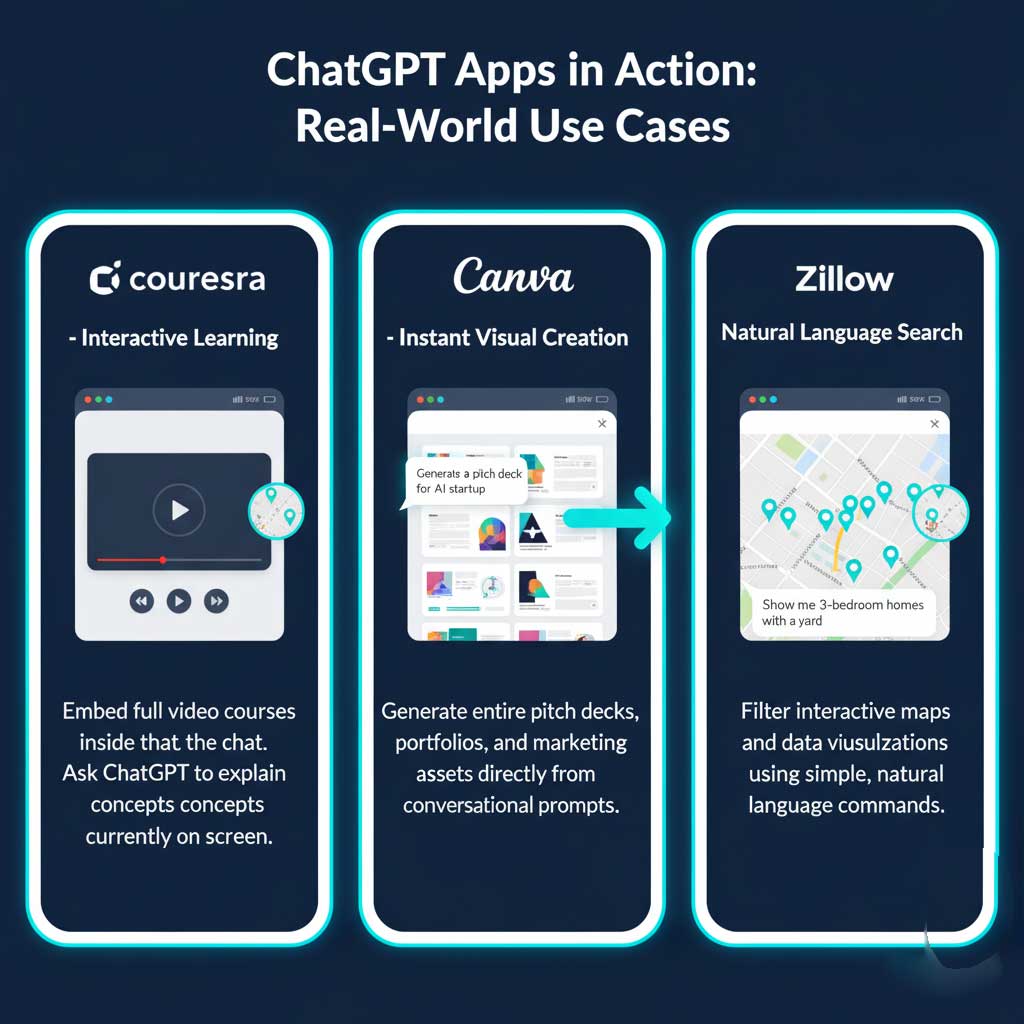

Real-World Examples in Action

To demonstrate the tangible power of the Apps SDK, OpenAI showcased several integrations from major partners.

- Coursera: The demo revealed a Coursera video course playing directly inline within the chat. A student could watch the lecture in picture-in-picture or full-screen mode. Leveraging the “Talking to Apps” feature, the student could then pause and ask, “Explain the concept he’s talking about at the 2:15 mark in a simpler way,” and ChatGPT would provide a tailored explanation based on the visual content of the video.

- Canva: This integration highlighted the power of generative AI for visual marketing. A user could have a conversation about a new business idea, and then simply ask Canva to generate a complete, multi-page pitch deck or a professional portfolio based on the text prompts discussed in the chat.

- Zillow: The Zillow demo brought real estate searches to life. An interactive map of homes for sale was embedded directly into the conversation. Instead of using clunky filters, the user could refine the search with natural language, saying things like, “This is great, but filter this to just the three-bedroom homes with a yard,” and watch the map update in real-time.

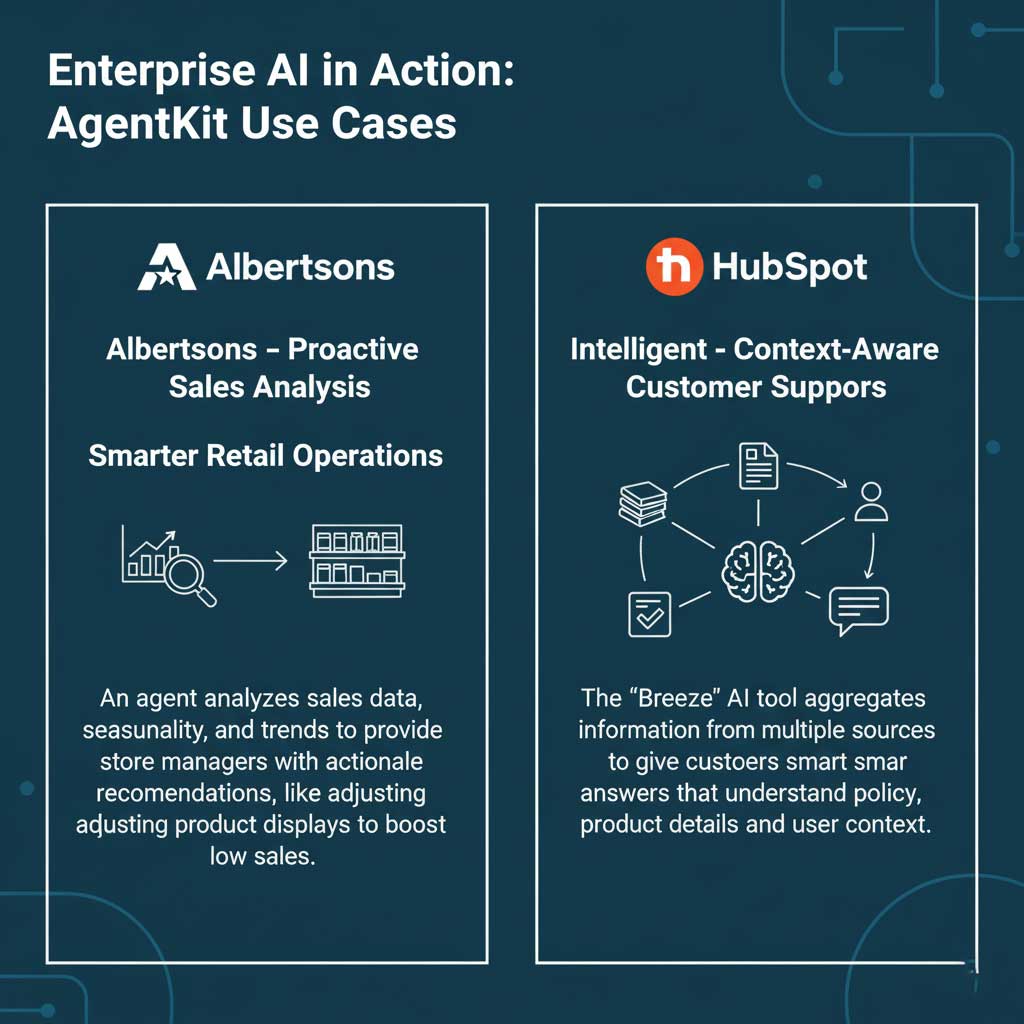

From Prototype to Production: Simplifying AI Agents with AgentKit

While AI agents—autonomous systems that can perform multi-step tasks—are a hot topic, OpenAI acknowledged a critical industry challenge: very few of them ever make it into a production environment. The complexity of orchestration, evaluation, and tooling often leaves promising prototypes stuck in development. To solve this, OpenAI introduced AgentKit.

AgentKit is a complete and cohesive set of building blocks integrated into the OpenAI platform, meticulously designed to help developers move agents from a rough idea to a production-ready application with significantly less friction and time.

The Core Components of AgentKit

AgentKit is composed of four key elements that address the entire agent development lifecycle.

- Agent Builder: This is a fast, visual workflow designer that allows developers to map out the logic and steps of their agent. Built on the existing Responses API, it provides an intuitive, low-code environment to design flows, test different paths, and rapidly ship ideas.

- ChatKit: Recognizing that building a polished chat interface is a project in itself, OpenAI is providing ChatKit. This is a pre-built, beautifully designed, and embeddable chat component that developers can drop directly into their own applications. It’s fully brandable, allowing businesses to maintain their visual identity while offloading the complexity of UI development and focusing on their unique product workflows.

- Evals for Agents: You can’t improve what you can’t measure. AgentKit introduces a powerful new suite of evaluation tools dedicated to measuring agent performance. This includes trace grading, which allows you to understand the agent’s step-by-step decisions to pinpoint where it went wrong. It also provides datasets for assessing individual nodes in the agent’s logic and tools for automated prompt optimization. These evaluations can even be run on external, third-party models.

- Connector Registry: A truly useful agent needs access to data and tools. The Connector Registry is a secure admin control panel that allows businesses to safely connect their agents to internal databases, proprietary tools, and third-party systems like Salesforce or Zendesk.

AgentKit Empowering Real-World Enterprise Solutions

The impact of AgentKit is already being felt by enterprise partners.

- Albertsons: The grocery giant used AgentKit to build a sophisticated agent for its store managers. The agent analyzes complex sales data, considering factors like seasonality, local events, and historical trends. It can then offer actionable recommendations, for example, proactively flagging that ice cream sales are low for the season and suggesting a new promotional display.

- HubSpot: The marketing and sales platform enhanced its AI tool, Breeze, using AgentKit. The new version can aggregate information from the company’s vast knowledge base, complex policy documents, and local user context to provide customers with smart, genuinely useful answers that feel personalized and accurate.

To cap it off, a developer demonstrated the sheer speed of the platform by building and deploying a specialized DevDay agent named “Ask Froge” live on stage in under 8 minutes. The agent used the visual builder, implemented pre-built safety guardrails like PII (Personally Identifiable Information) masking, connected to a file search tool to access event data, and even integrated a custom visual widget to display session schedules.

The Future of Software Development is Here: Codex and GPT-5 Are Now Generally Available

The third major announcement centered on how AI is fundamentally changing the act of writing software itself. OpenAI’s vision is to make it possible for anyone with a great idea to build an application, regardless of their coding expertise. The key to this vision is Codex.

Codex and the Power of GPT-5

Codex, OpenAI’s advanced software engineering agent, is officially graduating from a research preview to General Availability (GA). This move is supercharged by a massive upgrade under the hood: Codex is now running on the new GPT-5-Codex model. This is a highly specialized version of the forthcoming GPT-5, trained specifically for agentic coding tasks. It excels at complex assignments like code refactoring, in-depth code reviews, and can even dynamically adjust its “thinking time” to devote more computational resources to harder problems.

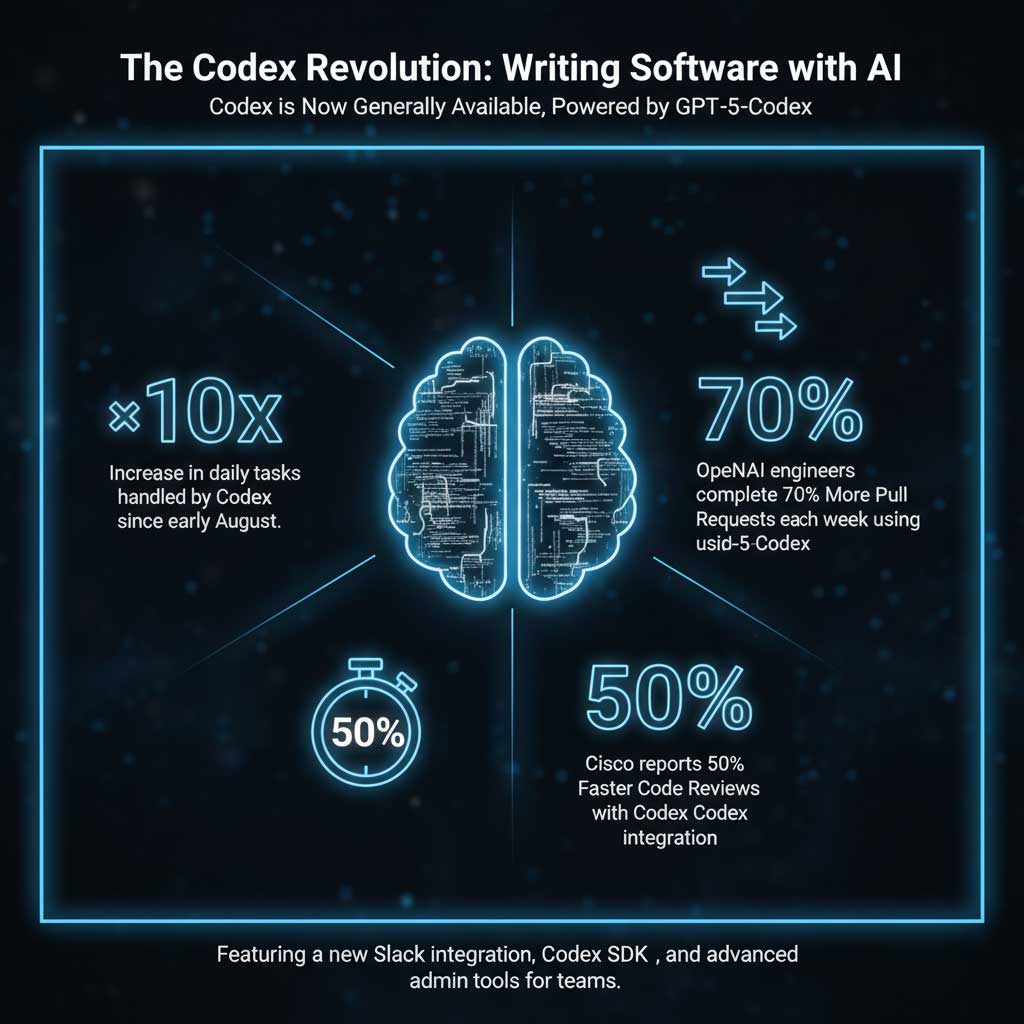

The impact has been immediate and profound. Since early August, the number of daily tasks handled by Codex has increased by a factor of 10x. Internally, OpenAI’s own engineers who use Codex are completing 70% more pull requests each week, and nearly every single pull request now goes through a Codex review before being merged.

New Features for Engineering Teams

To support professional development teams, Codex is launching with a suite of new enterprise-grade features:

- A native Slack integration for seamless workflow collaboration.

- A new Codex SDK to allow teams to extend and automate Codex within their own CI/CD pipelines and development workflows.

- Advanced admin tools and reporting, including environment controls and analytics dashboards for monitoring usage and performance.

Partners like Cisco are already seeing massive productivity gains, reporting that code reviews are happening 50% faster since integrating Codex.

Live Demo: Coding with the Physical World

The Codex demo was a stunning display of turning the physical environment into functional software with minimal human coding. Using the Codex CLI and a VS Code extension, a developer performed a series of incredible tasks live on stage:

- He took a quick paper sketch of a UI and asked Codex to build a simple control panel application from it.

- He then told Codex to implement the VISCA protocol to control a professional Sony FR7 camera on stage, which it did by writing the necessary code.

- He then wired up a wireless Xbox controller to control the camera simply by sending a task to the IDE extension.

- Using the Realtime API and Agent SDK, he integrated voice control into the application.

- In a remarkable display of agentic behavior, he asked Codex to build an MCP server for the stage lighting system using only reference documents. Codex autonomously found the required information, including GitHub docs for a specific command, and built the integration.

- Finally, using the new Codex SDK, he asked the voice agent to “generate a credits overlay,” and Codex reprogrammed the running application in real-time, adding the new visual feature on the fly.

Unleashing Unprecedented Power: GPT-5 Pro, Realtime Voice, and Sora 2 API Updates

The final set of announcements focused on the core models and APIs that power the entire OpenAI ecosystem, delivering a massive leap in intelligence, speed, and creative potential.

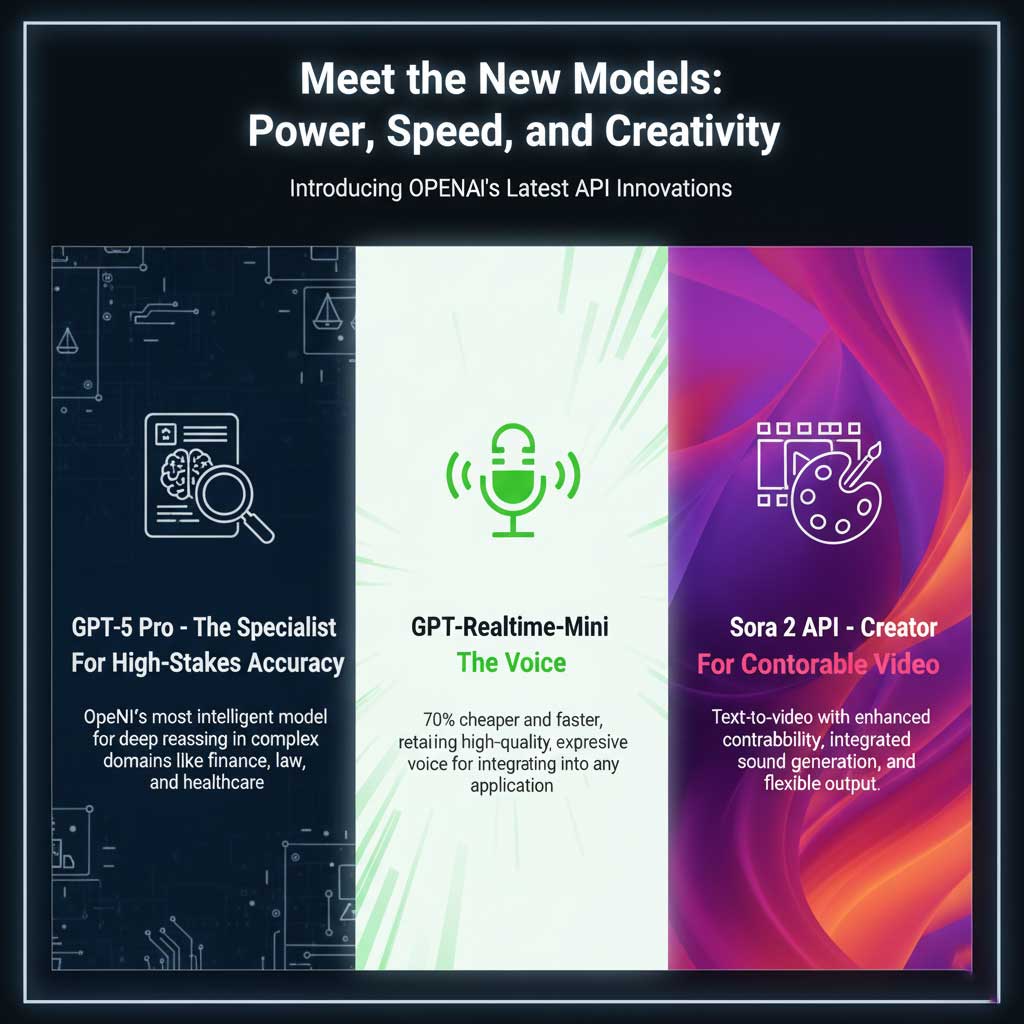

| Model / API | Target Audience | Key Features & Benefits |

| GPT-5 Pro API | Developers, Enterprises | OpenAI’s most intelligent model ever. Designed for high-accuracy, deep reasoning in complex domains like finance and legal. |

| GPT-Realtime-Mini | Developers, Voice Apps | 70% cheaper and faster than the advanced voice model. Retains high-quality, expressive voice for scalable applications. |

| Sora 2 API Preview | Creators, Marketers, Studios | Text-to-video with enhanced controllability, integrated sound generation, and flexible output parameters (length, aspect ratio). |

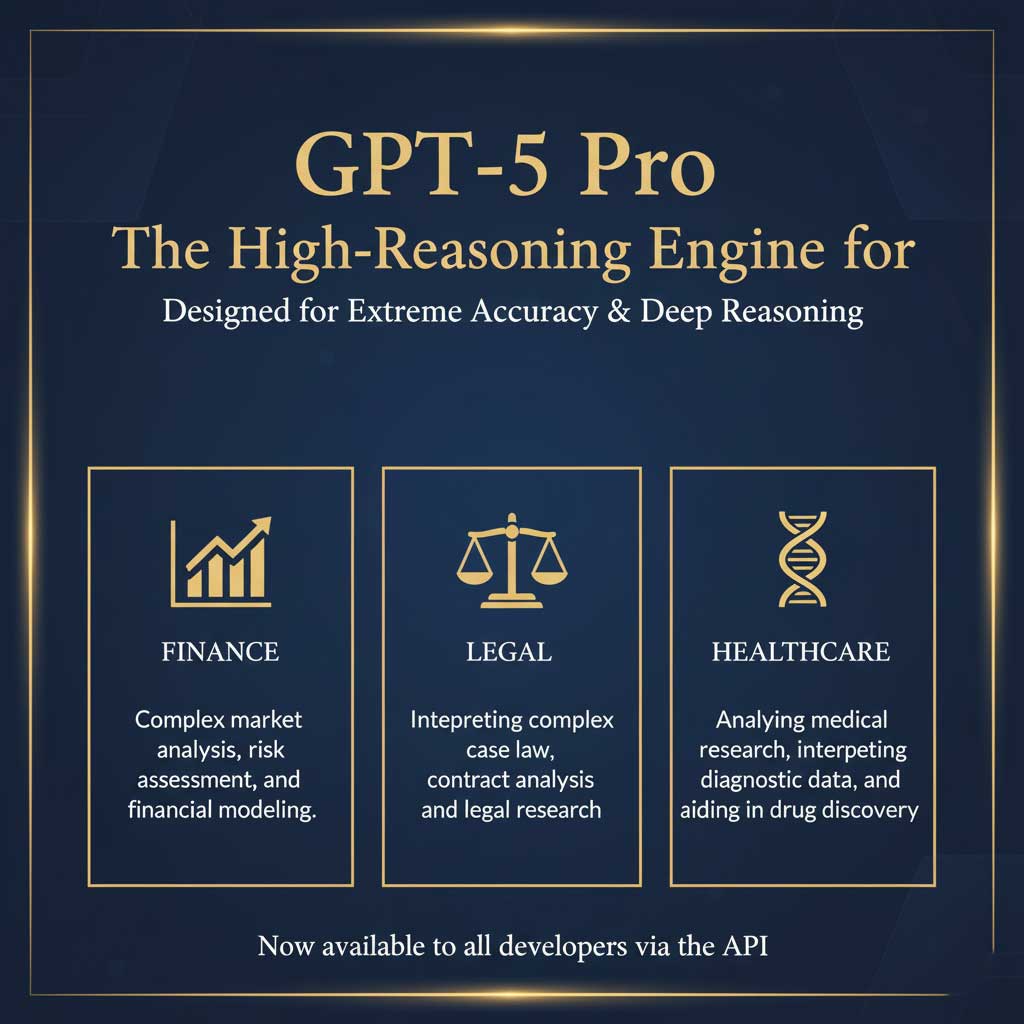

The Launch of the GPT-5 Pro API

The moment many developers have been waiting for is here: GPT-5 Pro, described as the most intelligent and capable model OpenAI has ever shipped, is now available to all developers via the API. This model is not intended as a replacement for everyday chatbot tasks; it is a highly specialized engine designed for the most difficult problems in domains requiring extreme accuracy and depth of reasoning, such as finance, legal, and healthcare.

Making Advanced Voice Accessible with GPT-Realtime-Mini

OpenAI firmly believes that voice is a primary way people will interact with AI in the future. To accelerate this, they have released GPT-Realtime-Mini. This is a smaller, faster, and 70% cheaper version of their state-of-the-art voice model. Crucially, it retains the same incredible voice quality and emotional expressiveness, making it feasible for developers to integrate high-fidelity, real-time voice interactions into applications at scale.

The Next Generation of Video: Sora 2 API Preview

The text-to-video model that stunned the world is getting its first major API release. A preview of the Sora 2 API is now being opened to creators, and it comes with significant improvements.

Enhanced Controllability and Sound Integration

A major focus for Sora 2 has been controllability. Developers can now provide highly detailed instructions to generate stylized, accurate, and perfectly composed video results. The most significant new feature, however, is integrated sound. Sora 2 can now pair visuals with audio, generating rich soundscapes, ambient noise, and perfectly synchronized sound effects that are grounded in the video’s content.

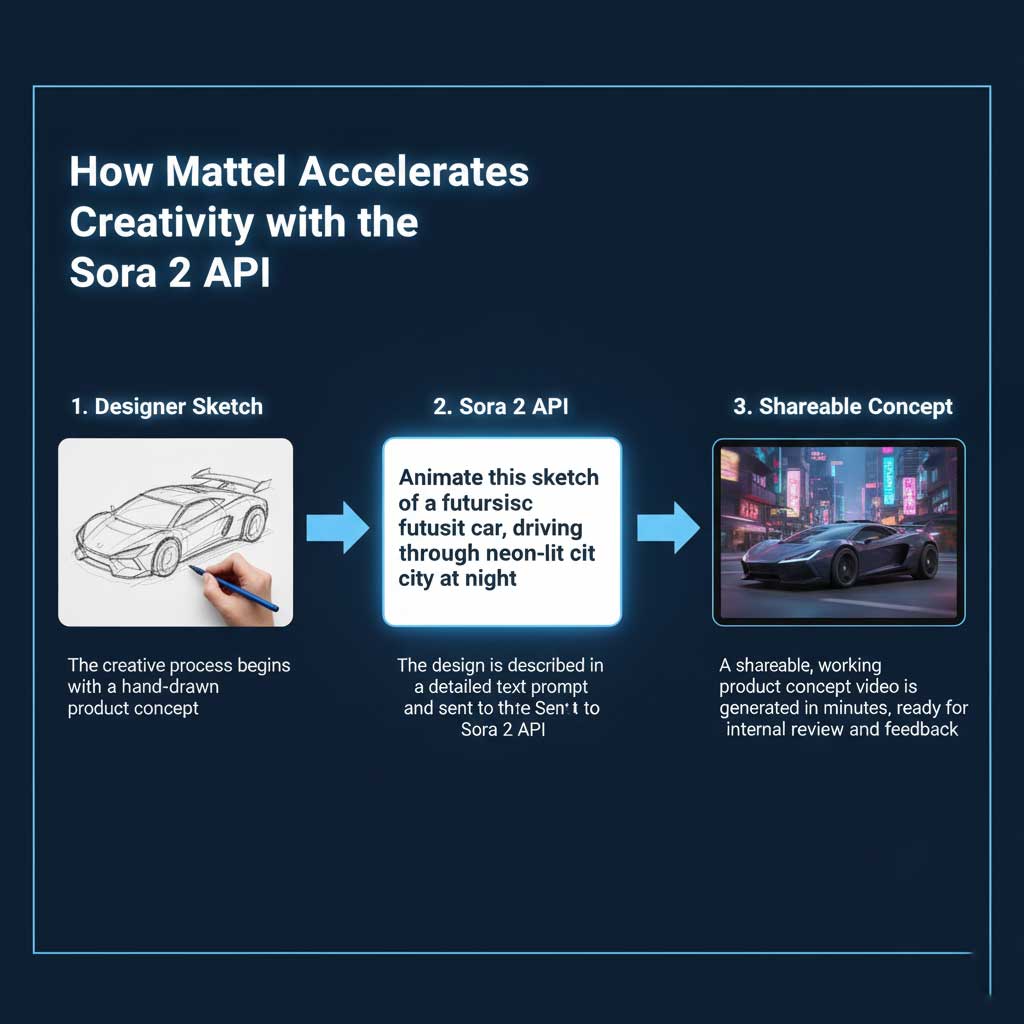

Flexibility and Real-World Use Cases

The API gives developers control over key parameters like video length, aspect ratio, and resolution, and even allows for easy remixing of existing videos. This is already unlocking powerful workflows for marketing and design teams. Partner Mattel, for instance, is using the Sora 2 API to turn designer sketches into shareable, working product concepts in a fraction of the time, dramatically accelerating their creative pipeline.

Conclusion: The New Age of the AI-Powered Builder

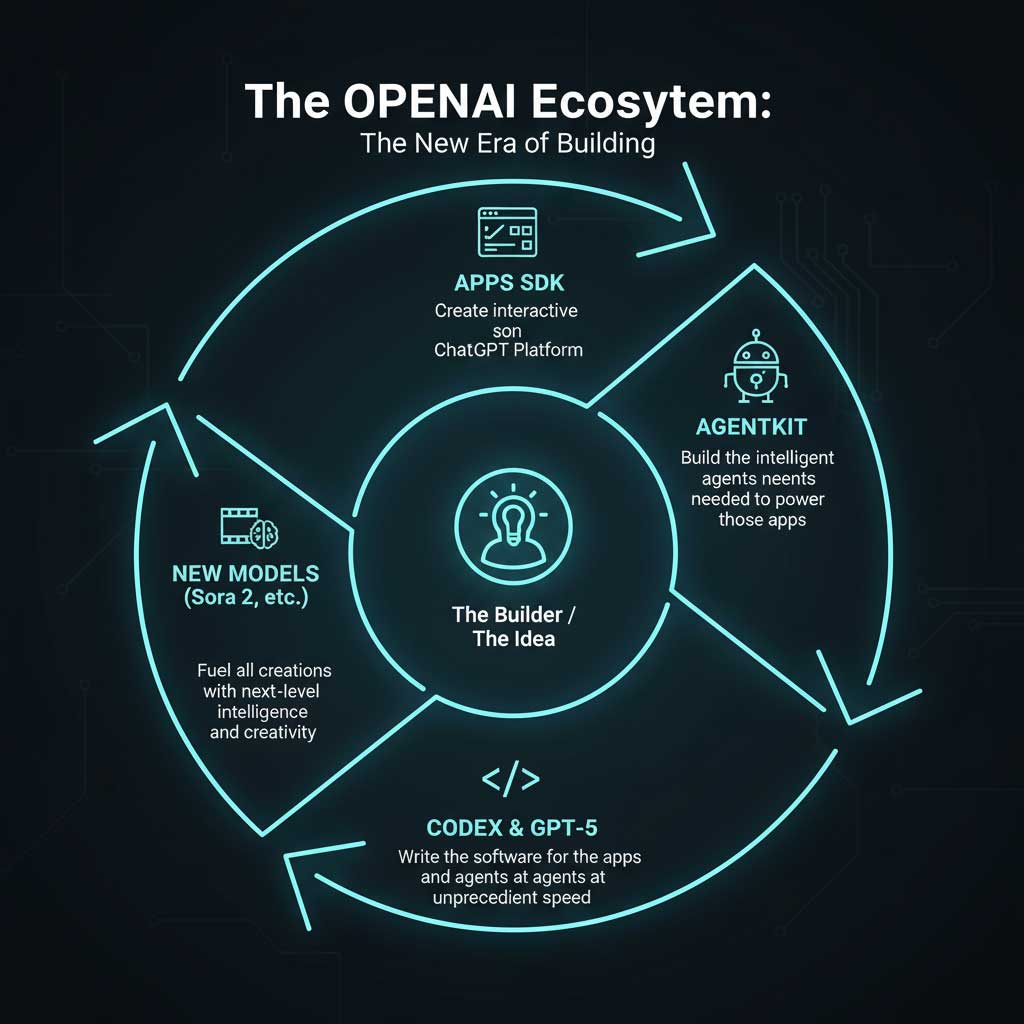

The announcements from OpenAI’s DevDay keynote are more than just a series of product updates; they represent a cohesive, powerful vision for the future of technology. By connecting the dots between these four pillars, a clear picture emerges: OpenAI is building a comprehensive, end-to-end ecosystem designed to empower a new generation of creators and builders.

The Apps SDK transforms ChatGPT into an interactive platform, creating a new surface for user engagement and commerce. AgentKit provides the toolkit to build the intelligent, autonomous systems needed to power these new experiences. The now generally available Codex, supercharged by GPT-5, fundamentally redefines the act of creation itself, making development faster and more accessible than ever. And underpinning it all are the new foundational models—the raw intelligence of GPT-5 Pro, the accessibility of GPT-Realtime-Mini, and the creative potential of Sora 2.

For businesses and marketers, this is a call to action. The tools to build deeply integrated, AI-powered customer experiences and automate complex internal workflows are no longer theoretical; they are available today. The ability to generate a marketing video from a prompt, build an interactive sales tool with natural language, or deploy a customer service agent that understands context is now within reach.

As Sam Altman stated, we have moved from playing with AI to building with it every day. This DevDay was a clear declaration that the era of the AI-powered builder has arrived. The challenge now is not a matter of technical feasibility, but of imagination. The tools are here, the platform is ready, and the future is waiting to be built—not in years or months, but in minutes.

Frequently Asked Questions (FAQ)

1. What is GPT-5 Pro, and how is it different from the GPT-4 models I’m using now?

GPT-5 Pro is OpenAI’s newest and most intelligent model, now available via API. Unlike GPT-4, which is a powerful general-purpose model, GPT-5 Pro is specifically designed for “hard tasks” that require an extremely high degree of accuracy and deep, complex reasoning. Think of it as a specialist for industries like finance (complex market analysis), law (interpreting case law), and healthcare (analyzing medical data), where the cost of being wrong is very high. While GPT-4 is the versatile workhorse, GPT-5 Pro is the expert surgeon you call in for the most critical operations.

2. What exactly is the Apps SDK, and can I build a complete application with a UI inside ChatGPT?

Yes, that’s precisely what it is. The Apps SDK is a full-stack toolkit that allows developers to build complete, interactive applications that run directly within the ChatGPT interface. You are no longer limited to text-based interactions. With the SDK, you can:

- Render a custom User Interface (UI): As seen in the Zillow demo with its interactive map.

- Connect to live data sources: Pull in real-time information from your servers.

- Trigger actions in other systems: For example, update a record in a CRM or complete a purchase.

It effectively turns ChatGPT into a platform or an operating system where your app can live.

3. My team has struggled to get AI agents into production. How does AgentKit solve this problem?

AgentKit is designed to directly address the common pain points that keep AI agents in the prototype phase. It provides a structured, end-to-end solution by offering four key components:

- Agent Builder: A visual, low-code tool to design and test the agent’s logic, reducing complex coding.

- ChatKit: A pre-built, embeddable chat interface, so you don’t have to build your own UI from scratch.

- Evals for Agents: A dedicated suite for measuring performance, tracing the agent’s decisions, and optimizing prompts, which is critical for ensuring reliability.

- Connector Registry: A secure way to connect your agent to internal and third-party tools, solving a major security and integration hurdle.

Essentially, it provides the scaffolding, testing framework, and UI components that teams would otherwise have to build themselves, dramatically speeding up the path to production.

4. Codex is now ‘Generally Available’ (GA). What does that mean, and what’s the significance of the new GPT-5-Codex model?

“General Availability” (GA) means Codex has moved out of its experimental, research-preview phase and is now a fully supported, production-ready product that OpenAI is confident in offering to all customers, particularly enterprises. The significance of the new GPT-5-Codex model is that it’s a version of GPT-5 specifically fine-tuned for “agentic coding.” This means it doesn’t just write code when asked; it can perform multi-step software engineering tasks, understand context, refactor complex codebases, and even dynamically decide how much “thinking time” to use for a difficult problem, making it a far more powerful and autonomous development partner.

5. Can I monetize the apps I build with the new Apps SDK inside ChatGPT?

Yes, absolutely. OpenAI explicitly stated that future plans include robust support for monetization within the ChatGPT app ecosystem. While the initial preview focuses on functionality, they are building the commercial infrastructure. This includes the “Agentic Commerce Protocol,” which is designed to allow for instant, frictionless checkouts directly within a conversation, paving the way for developers to sell products, subscriptions, and services through their apps.

6. What are the biggest new features of the Sora 2 API, especially for marketers and content creators?

The Sora 2 API preview introduces two game-changing features for marketers and creators. First is enhanced controllability, which allows you to give much more detailed and nuanced prompts to get a video that precisely matches your stylistic vision. The second, and arguably bigger, feature is integrated sound generation. Sora 2 can now create a complete audio landscape for the video, including ambient sounds, synchronized effects, and background audio that is contextually grounded in the visuals. For marketers, this means you can go from a simple idea (like “a happy family enjoying our new cereal”) to a nearly-finished, shareable video concept with visuals and sound in minutes.

7. What is GPT-Realtime-Mini, and why is it important for developing voice applications?

GPT-Realtime-Mini is a smaller, faster, and 70% cheaper version of OpenAI’s most advanced real-time voice model. Its importance is accessibility and scalability. While the top-tier voice model offers incredible quality, its cost and resource requirements can be a barrier for widespread use. GPT-Realtime-Mini retains the same high-quality, expressive, and human-like voice but at a price point and speed that makes it feasible for developers to build voice interactions into their applications at scale, from customer service bots to in-app voice assistants.

8. How will users discover the apps I build inside ChatGPT? Is there an app store?

Discovery will happen in two primary, conversational ways. There isn’t a traditional “app store” to browse, but rather an intelligent discovery engine.

- Direct Invocation: Users can ask for your app by name (e.g., “Use Canva to make a presentation about my Q3 results”).

- Conversational Suggestion: ChatGPT itself will suggest relevant apps based on the user’s conversation. If a user is planning a trip, ChatGPT might suggest a travel app to book flights or accommodations.

This organic, needs-based discovery model aims to put your app in front of the user at the exact moment they need it.

9. The Codex demo seemed to create an app from a sketch. Is it really possible to build software without writing code now?

For certain tasks, yes. The demo showcased that the barrier to creation is becoming dramatically lower. Codex was able to take a visual concept (a sketch) and generate the functional UI code. It also handled complex tasks like implementing communication protocols and integrating hardware (a camera and controller) based on natural language commands. While deep, complex software still requires engineering expertise, Codex acts as an incredibly powerful co-pilot that can automate huge portions of the development process. It empowers people with ideas (the “what”) to build functional software without needing to be an expert in the “how.”

10. What is the single biggest takeaway for businesses and developers from OpenAI’s DevDay?

The biggest takeaway is the shift from providing a single powerful tool (like the API) to building a complete, interconnected ecosystem for creation. OpenAI is no longer just offering intelligence; they are providing the platform (Apps SDK), the automation tools (AgentKit), the development engine (Codex), and the foundational models (GPT-5 Pro, Sora 2) needed to build the next generation of software and media. The core message is that the speed of creation has been compressed from months or years down to minutes, and the businesses that embrace this new paradigm of rapid, AI-powered building will be the ones to lead the future.