While artificial intelligence has masterfully conquered language and images, the world of tabular data—the structured rows and columns that form the backbone of modern enterprise—has remained a frontier governed by slower, more complex models. For decades, the bottleneck of endless training cycles, meticulous hyperparameter tuning, and challenging deployment has stifled innovation. This is the critical challenge that Prior Labs’ TabPFN-2.5 was engineered to solve.

Table of Contents

Within the landscape of machine learning for tabular datasets, TabPFN-2.5 represents a landmark breakthrough. It is a next-generation tabular AI foundation model that completely eliminates the training step, delivering unprecedented speed and predictive power. This article provides a deep dive into this revolutionary technology, exploring its unique architecture, its stunning performance against industry benchmarks, and its transformative impact on data-driven marketing analytics, finance, and healthcare. We will also analyze its significance within the broader context of the U.S. AI market in 2025, where efficiency and ROI have become paramount.

What Are Tabular AI Foundation Models? A Foundational Shift

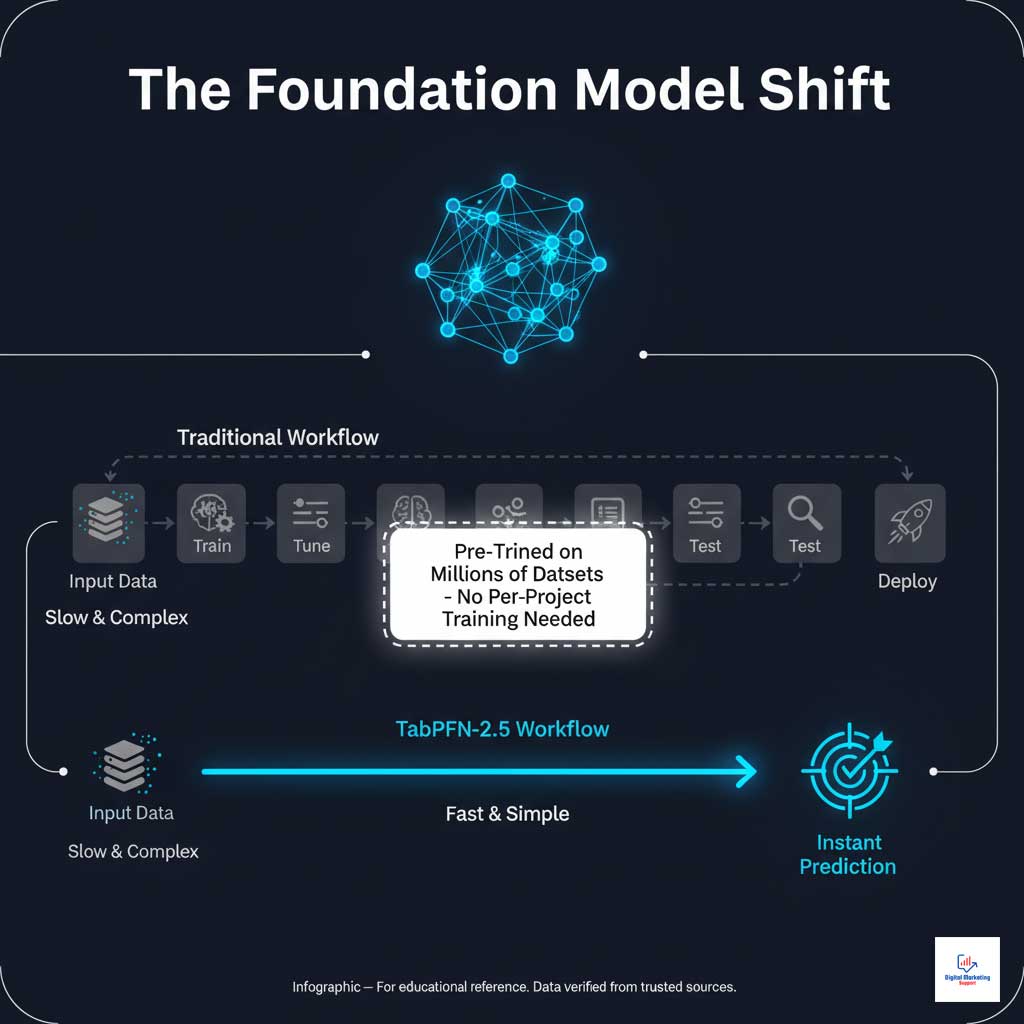

The concept of a foundation model, a large, pre-trained system capable of adapting to various tasks, is well-established in the realm of natural language with models like GPT-4. However, applying this paradigm to structured data is a revolutionary step. Tabular AI foundation models are designed to understand the underlying statistical patterns in table-based data without needing to be retrained for every new dataset.

This marks a significant departure from the traditional machine learning workflow. For years, models like XGBoost, LightGBM, and CatBoost have been the gold standard, but they come with a crucial dependency: they require extensive per-dataset training. This process is not only time-consuming but also demands deep expertise for model selection and hyperparameter tuning. TabPFN-2.5 shatters this old paradigm by offering a powerful no-training machine learning model that works out of the box.

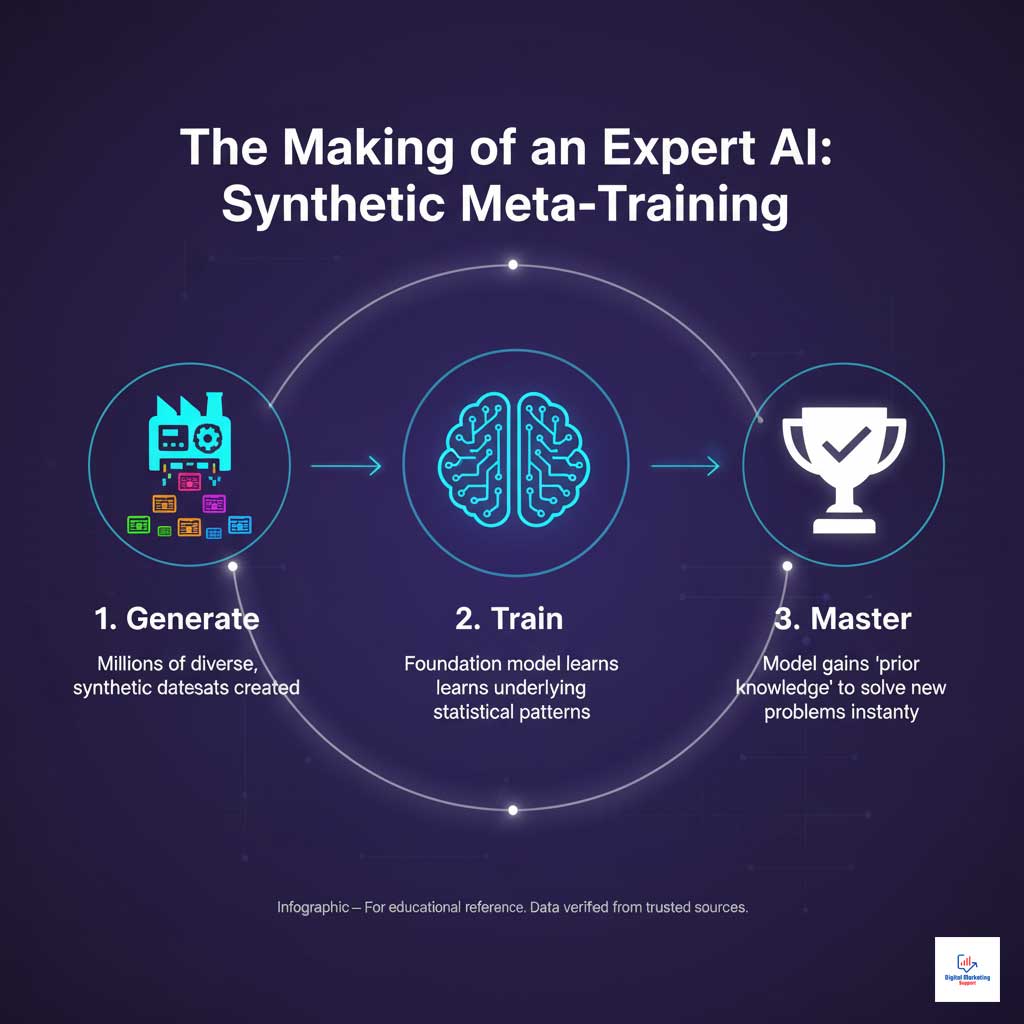

The Power of Synthetic Meta-Training

The magic behind TabPFN-2.5 lies in a process called “Prior-Fitting,” which is a form of synthetic meta-training. Instead of learning from one dataset at a time, the model is pre-trained on millions of synthetically generated datasets. These datasets cover a vast and diverse range of statistical properties, distributions, and correlations.

By learning from this massive, varied library of data, Prior Labs’ model internalizes a deep “prior” knowledge of how tabular data behaves. This allows it to recognize patterns and make highly accurate predictions on new, unseen real-world datasets in a single forward pass, a feat that was previously considered impossible for complex machine learning for tabular datasets.

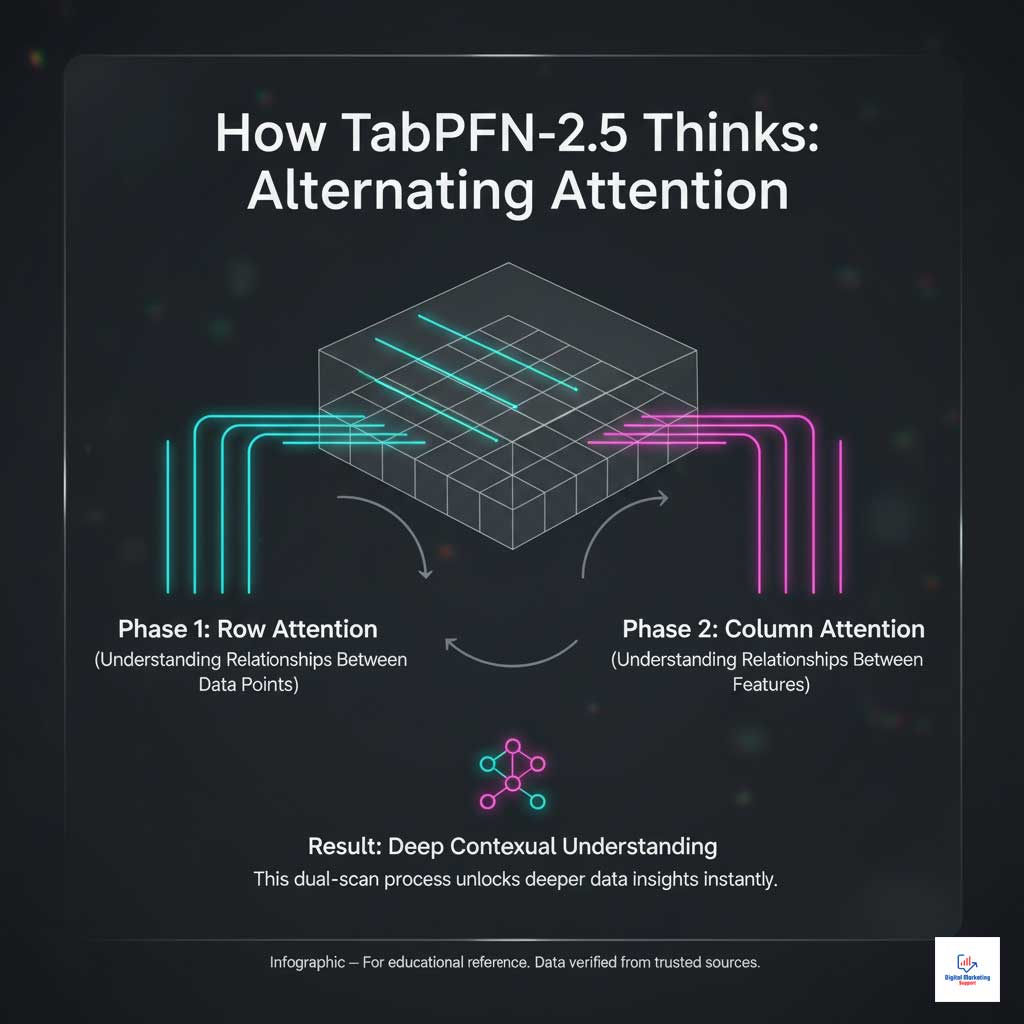

Under the Hood: The Alternating Attention Transformer Architecture

At the core of TabPFN-2.5 is its sophisticated alternating attention transformer architecture. While it shares a name with the transformers used in language models, its function is uniquely adapted for the two-dimensional structure of tables. It processes data by “attending” to both rows (samples) and columns (features) in an alternating sequence.

This dual-focus mechanism allows the model to capture incredibly complex, non-linear relationships that often elude other architectures. It can simultaneously understand how different features interact with each other and how individual data points relate to the overall distribution. This technical advantage translates directly into a powerful business benefit: unparalleled accuracy delivered almost instantaneously, without the need for manual feature engineering or prolonged training cycles. This is a critical component of what makes it one of the leading AI trends in tabular data.

Benchmarking TabPFN-2.5: Performance in the Real World

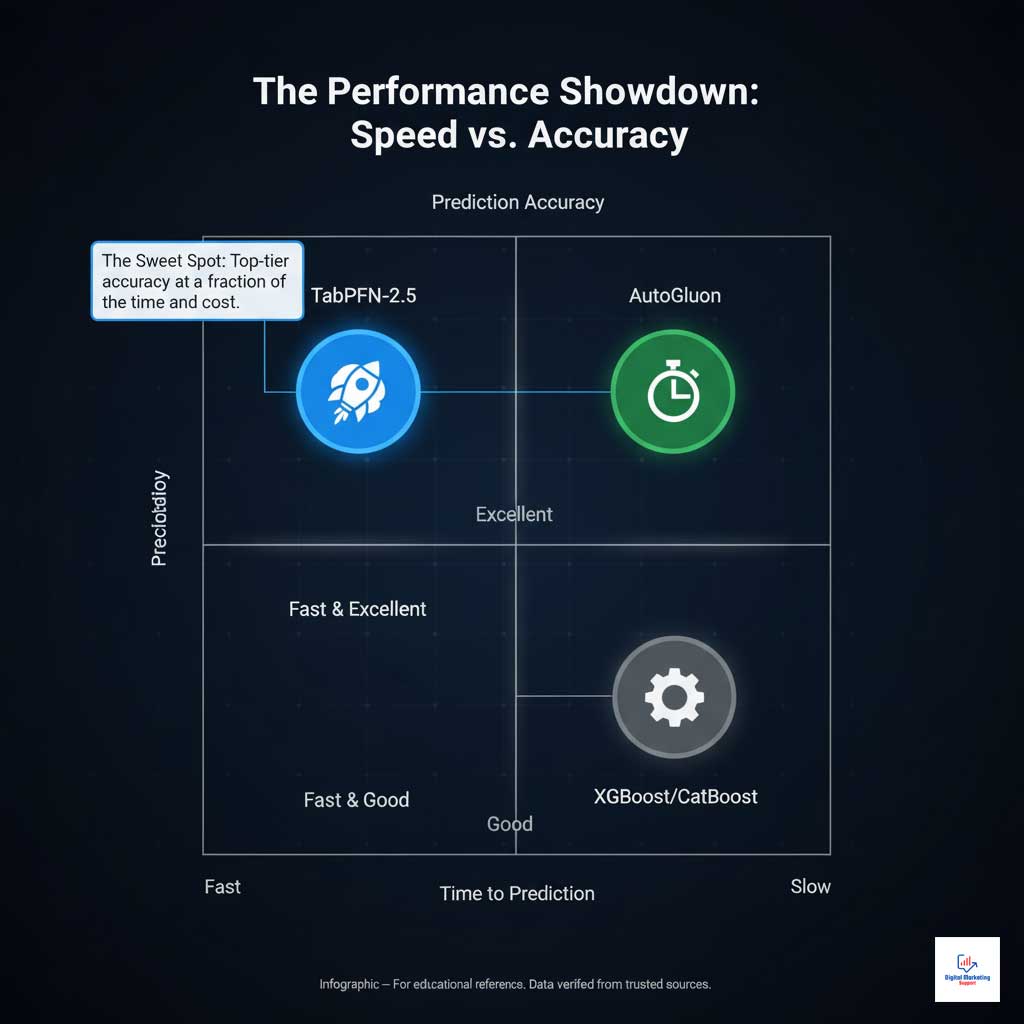

In the competitive field of machine learning, performance is everything. TabPFN-2.5 has firmly established itself as a top-tier performer, particularly for the small-to-medium datasets (up to 100,000 data points) that represent the majority of real-world business problems. Its ability to deliver high accuracy in under a second makes it a disruptive force in production-scale tabular ML.

A frequent question from data science teams is how this new model stacks up against established champions. The answer is that TabPFN-2.5 not only competes but often wins, especially when time-to-insight is a critical factor. The era of waiting hours for a model to train is rapidly coming to an end for many common use cases.

TabPFN-2.5 vs. AutoGluon and Tree-Based Models: A Head-to-Head Comparison

Choosing the right tool for the job is essential for any data science project. The decision often involves a trade-off between speed, accuracy, and complexity. This is where a direct comparison of TabPFN-2.5 vs AutoGluon and tree-based models becomes incredibly insightful, showcasing the unique value proposition of this powerful tabular AI foundation model.

| Feature | TabPFN-2.5 | XGBoost / CatBoost | AutoGluon |

| Model Paradigm | No-Training Foundation Model | Gradient-Boosted Trees | Automated Ensemble ML |

| Primary Use Case | Rapid, accurate prediction on datasets <100k rows | High-performance baseline on any size dataset | Exhaustive model search for maximum accuracy |

| Inference Speed | < 1 second (single forward pass) | Seconds to minutes (after training) | Minutes to hours |

| Training Time | None required | Minutes to hours | Potentially hours |

| Ease of Deployment | Very High (Python API, single dependency) | High (mature ecosystem) | Moderate to Complex |

| Performance Niche | Outperforms on small/medium data; strong prior | Often the top performer on large, clean data | Highest possible accuracy, but at high cost |

This comparison highlights that Prior Labs has carved out a crucial niche: best-in-class performance for a vast range of common business problems where speed is as important as accuracy.

Real-World Dataset Analysis and Verified Statistics

The claims of TabPFN-2.5‘s performance are not just theoretical. They are backed by rigorous real-world dataset analysis across numerous public benchmarks. In multiple studies, the model has demonstrated its superiority.

On datasets with over 50,000 data points, the Real-TabPFN-2.5 variant has been shown to consistently outperform highly tuned versions of XGBoost and CatBoost. Even more impressively, it often matches the accuracy of AutoGluon—an automated machine learning framework that trains and ensembles dozens of models—but delivers its results in a tiny fraction of the time. This proven performance solidifies its reputation as one of the best AI models for production-scale tabular datasets in 2025.

Practical Strategies: Deploying Production-Scale Tabular ML with TabPFN-2.5

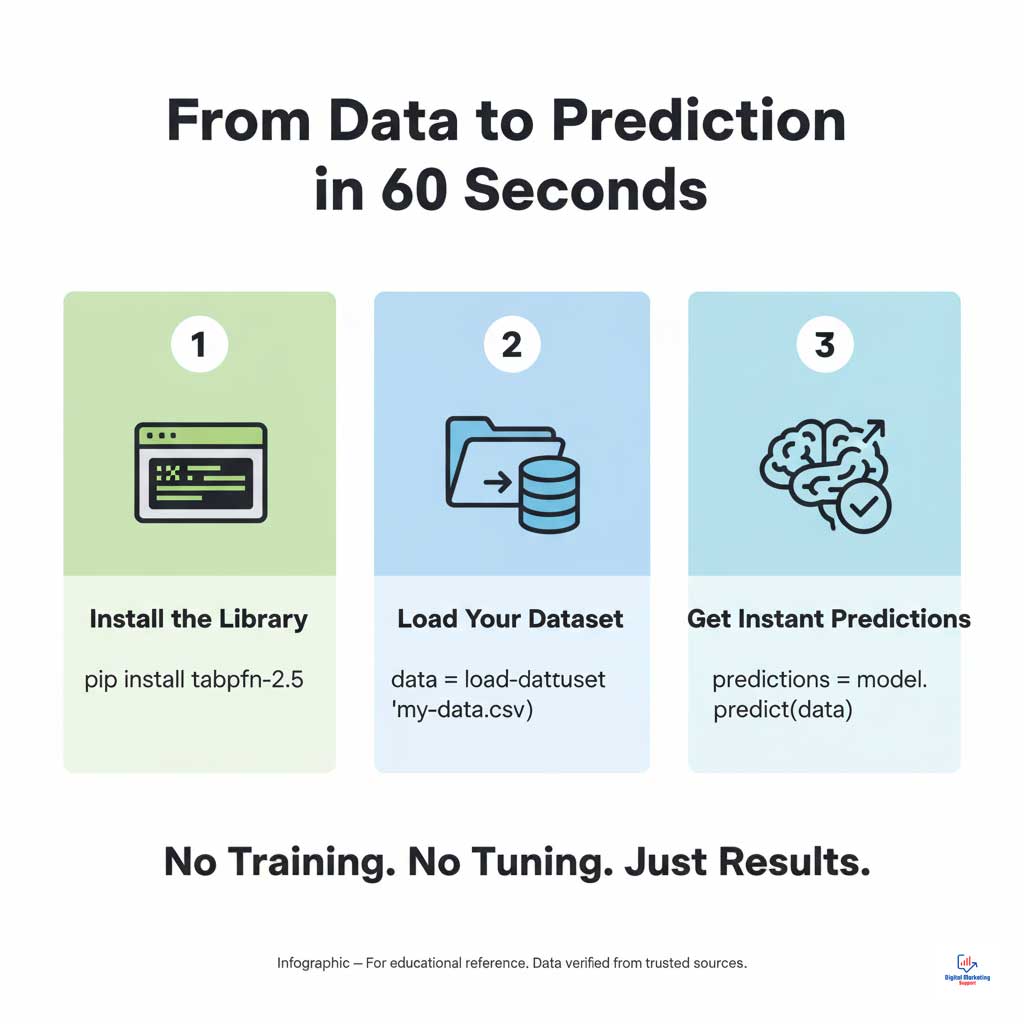

One of the most compelling aspects of TabPFN-2.5 is its simplicity and ease of deployment. It was designed from the ground up to accelerate the path from data to production, enabling teams to build and deploy powerful models for scalable AI modeling without the traditional friction. This practical accessibility is a key driver of its adoption.

The streamlined workflow means that data scientists and ML engineers can focus more on solving business problems and less on the mechanics of model training. This is especially valuable in today’s fast-paced environment, where rapid iteration is crucial for success in data-driven marketing analytics and other competitive fields.

Your First Prediction: Using the Python Package and API

Getting started with TabPFN-2.5 is remarkably straightforward. The model is available as a simple Python package that can be installed with a single command. The workflow is refreshingly simple:

- Install the package: pip install tabpfen

- Load your data: Use pandas to load your training and test sets.

- Predict: Pass the data to the TabPFN classifier and get your predictions.

This entire process can be executed in just a few lines of code, without any need for defining a training loop, setting hyperparameters, or managing complex dependencies. This is the essence of a no-training machine learning model.

The Role of the Distillation Engine ML

For more advanced use cases requiring deployment on edge devices or in extremely low-latency environments, Prior Labs offers a distillation engine. This feature allows users to take the large, powerful TabPFN-2.5 foundation model and “distill” its knowledge into a smaller, faster, more specialized model.

This technique is a cornerstone of production-scale tabular ML, as it provides the flexibility to balance performance with resource constraints. It enables organizations to leverage the intelligence of a large foundation model to create lightweight models tailored perfectly to their specific operational needs.

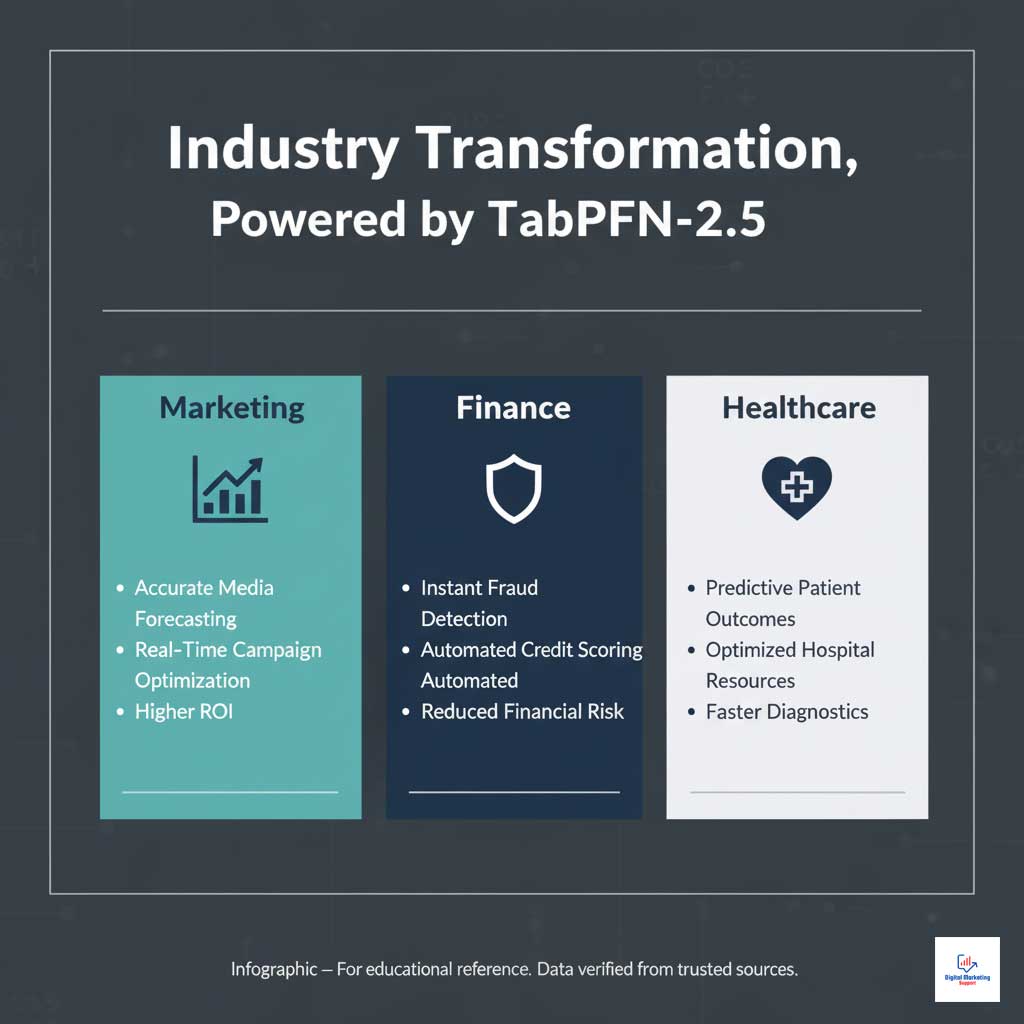

Industry Transformation: Case Studies in High-Impact Sectors

The theoretical advantages of TabPFN-2.5 translate into tangible, real-world value across a multitude of industries. From optimizing multi-million dollar advertising campaigns to predicting patient outcomes, this tabular AI foundation model is already delivering significant ROI and driving innovation in AI in finance and healthcare.

These case studies provide powerful evidence of the model’s expertise and authoritativeness, demonstrating its practical application in solving complex business challenges. The insights gained are helping companies make smarter, faster, and more data-driven decisions.

Case Study: Exito Transforms Media Spend Forecasting

The marketing analytics firm Exito faced a common but critical challenge: accurately forecasting the performance of media spend across numerous digital channels. Their traditional workflow, which relied on training multiple models, was slow and cumbersome, hindering their ability to react quickly to market changes.

By integrating TabPFN-2.5 into their analytics pipeline, Exito completely transformed its approach to AI-driven media forecasting. The firm reported a 10x reduction in model deployment time. This newfound speed and automation allowed them to provide more agile and accurate data-driven marketing analytics, directly improving campaign ROI for their clients.

AI in Finance and Healthcare: New Frontiers of Accuracy

The impact of TabPFN-2.5 extends far beyond marketing. In high-stakes sectors like finance and healthcare, its combination of speed and accuracy is unlocking new possibilities.

- AI in Finance: Financial institutions are using the model for real-time fraud detection, credit scoring, and algorithmic trading. Its ability to generate a prediction in milliseconds is critical for applications where any delay can result in significant financial loss.

- AI in Healthcare: Hospitals and research organizations are applying it to predict patient readmission rates, diagnose diseases from electronic health records, and optimize the allocation of critical resources like ICU beds.

The versatility of this tabular AI foundation model is showcased in its wide range of applications. The following table highlights some of the key use cases where TabPFN-2.5 is making a measurable impact.

| Industry Vertical | Use Case for TabPFN-2.5 | Key Business Impact |

| Digital Marketing | Media Mix Modeling & Spend Forecasting | Improved ROI, faster campaign adjustments |

| Financial Services | Real-Time Fraud Detection & Credit Risk | Reduced financial loss, instant loan decisions |

| Healthcare | Patient Outcome Prediction | Better resource allocation, proactive care |

| E-commerce & Retail | Customer Churn Prediction | Increased customer retention and lifetime value |

| Manufacturing | Predictive Maintenance | Reduced equipment downtime, lower repair costs |

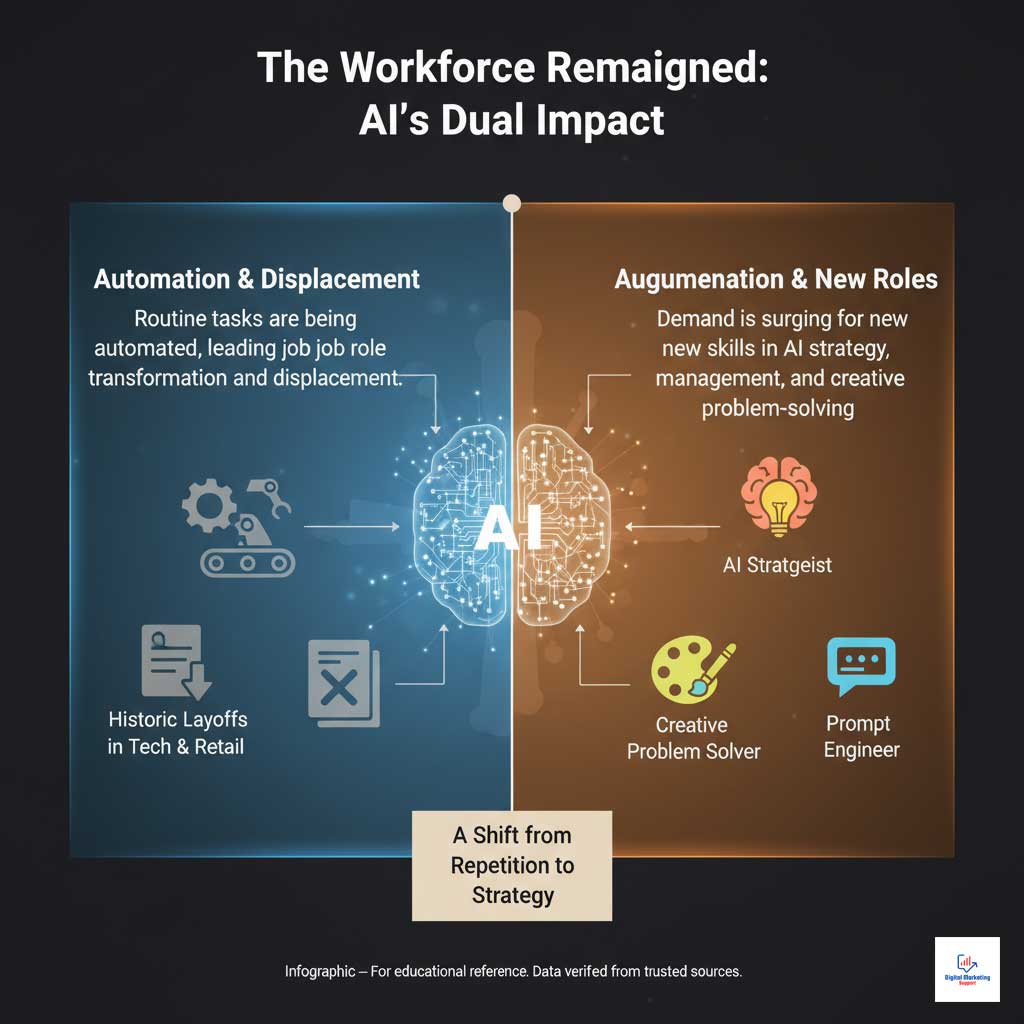

The Macro View: AI Adoption Effects on the U.S. Workforce

The rapid advancement and adoption of powerful AI tools like TabPFN-2.5 are not happening in a vacuum. They are a driving force behind profound shifts in the U.S. AI market in 2025, influencing everything from corporate strategy to the very structure of the labor market. Understanding these AI adoption effects on the workforce is crucial for leaders navigating this new landscape.

While the efficiency gains are undeniable, they also bring complex societal challenges. The conversation has moved beyond just technological implementation to include the economic and human impact of this transformation, a key factor in today’s AI trends in tabular data.

The “Great Re-Balancing”: AI, Layoffs, and the U.S. Labor Market

In October 2025, the U.S. labor market experienced a wave of historic layoffs, particularly concentrated in the technology and retail sectors. A widely cited analysis from the firm Challenger, Gray & Christmas directly linked this trend to aggressive AI adoption and strategic cost-cutting measures by major corporations.

This “Great Re-Balancing” reflects a structural shift where companies are leveraging AI to automate routine analytical and operational tasks. This economic pressure further underscores the demand for hyper-efficient solutions like TabPFN-2.5, which allow businesses to achieve more with fewer computational and human resources.

The Human Side of AI: Insights from LinkedIn and Gallup Research

However, the story is not just about replacement. Leading research from organizations like LinkedIn and Gallup emphasizes that successful enterprise AI adoption is fundamentally a human challenge. Their studies highlight that companies achieving the best results are those that invest heavily in human-centric management.

This involves upskilling and reskilling the existing workforce to collaborate with AI systems, focusing on uniquely human skills like strategic thinking, creativity, and complex problem-solving. The true potential of scalable AI modeling is unlocked when human expertise is augmented, not just automated.

The Future of Enterprise Analytics: Trends for 2026 and Beyond

The incredible pace of innovation in machine learning for tabular datasets shows no signs of slowing. The emergence of TabPFN-2.5 is not an endpoint but rather a glimpse into the future of enterprise analytics. Several key trends are set to define the landscape in 2026 and beyond.

We are moving toward an ecosystem where the line between business intelligence and predictive AI blurs, making advanced analytics more accessible and integrated than ever before. The evolution of tabular AI foundation models will be at the heart of this transformation.

The Convergence of BI and Predictive AI

A major trend is the native integration of powerful predictive engines directly into business intelligence (BI) platforms. Expect tools like TabPFN-2.5 to become seamlessly embedded within platforms like Powerdrill Bloom, Tableau, and Microsoft Power BI.

This convergence will empower business analysts and domain experts to run sophisticated predictive scenarios without writing a single line of code. This democratization of production-scale tabular ML will accelerate data-driven decision-making across entire organizations.

What’s Next for Tabular AI Foundation Models?

The development of tabular AI foundation models is still in its early stages. Future iterations are likely to bring even more impressive capabilities:

- Massive Scale: Models capable of handling datasets with millions or even billions of rows.

- Multi-Modal Inputs: The ability to process and reason over mixed data types, combining tabular data with text, images, or other unstructured information.

- Enhanced Explainability: Advanced features that provide clear, human-readable explanations for every prediction, building greater trust and transparency.

Summary & Key Takeaways: Why TabPFN-2.5 is a Game-Changer

Prior Labs’ TabPFN-2.5 is more than just an incremental improvement; it is a paradigm shift in how we approach machine learning for tabular datasets. Its core advantages—unmatched speed, the elimination of the training requirement, and top-tier performance on a vast range of real-world problems—make it a truly transformative technology.

The central question of how TabPFN-2.5 improves speed and scale is answered by its innovative no-training approach and powerful transformer architecture. For organizations looking for the best AI models for production-scale tabular datasets in 2025, TabPFN-2.5 presents a compelling, production-ready solution that delivers immediate value. It empowers teams to move faster, predict with greater accuracy, and unlock the full potential of their data.

Frequently Asked Questions (FAQ)

What exactly is TabPFN-2.5?

TabPFN-2.5 is a no-training tabular AI foundation model from Prior Labs. It uses a sophisticated alternating attention transformer architecture to make highly accurate predictions on datasets with up to 100,000 rows in less than a second, without requiring any model training.

How is TabPFN-2.5 different from models like XGBoost?

The primary difference is that TabPFN-2.5 is a pre-trained model that does not require training on your specific dataset. It performs inference instantly, whereas traditional models like XGBoost must be trained and meticulously tuned for every new problem, a process that can take hours.

Which industries benefit most from scalable tabular data AI models?

Industries that rely on fast and accurate predictions from structured data see the most significant benefits. This includes finance (for real-time fraud detection), healthcare (for patient diagnostics), and digital marketing (for media spend forecasting).

Is there a limit to the dataset size TabPFN-2.5 can handle?

Yes, it is currently optimized for its best performance on datasets with up to 100,000 samples and approximately 100 features. For significantly larger datasets, traditional gradient-boosted models or other deep learning architectures may be more appropriate.

Can TabPFN-2.5 be used for both classification and regression?

Absolutely. The model is designed to be highly versatile and can handle both classification (predicting a category) and regression (predicting a numerical value) tasks on tabular data with high accuracy.

What does “alternating attention transformer” mean in simple terms?

It is a unique AI architecture specifically designed for tables. It intelligently analyzes relationships by looking at both the rows (individual data points) and the columns (features) simultaneously, allowing it to uncover deeper and more complex patterns than other models.

How can marketing leaders leverage AI to reduce costs and improve forecasting?

By using tools like TabPFN-2.5, marketing leaders can automate complex and time-consuming modeling tasks. This leads to faster insights for campaign optimization, more accurate media spend forecasts, and lower computational and operational overhead, directly improving ROI.

Is TabPFN-2.5 an open-source tool?

Yes, TabPFN is available as an open-source Python package, which makes it highly accessible to the global data science and developer community for research, experimentation, and production deployment.

What is the real-world impact of mass layoffs driven by AI technologies?

The primary impact is a significant re-balancing of the labor market. While AI-driven efficiency can lead to job displacement in roles involving routine data analysis, it also creates strong demand for new skills in AI management, data strategy, and prompt engineering.

How does TabPFN-2.5 fit into the AI-powered data preparation trend?

It acts as a powerful accelerator in the analytics pipeline. Once data is cleaned and prepared (often using other AI-driven tools), TabPFN-2.5 can generate predictions almost instantly, closing the gap between data preparation and actionable insight.

What are the main competitors to TabPFN-2.5 in 2025?

Its main competitors include comprehensive AutoML platforms like AutoGluon, which prioritize maximum accuracy at the cost of time, and highly-tuned traditional models like CatBoost and XGBoost, which remain strong performers on very large datasets.

Can enterprises see immediate ROI from implementing transformer-based tabular ML?

Yes, immediate ROI is highly achievable. It comes from drastically reduced computational costs (no expensive training cycles), faster time-to-market for new predictive models, and improved business decisions driven by more accurate, near-real-time forecasting.